![[Update] Amazon Nova 2 Lite has been announced #AWSreInvent](https://images.ctfassets.net/ct0aopd36mqt/33a7q65plkoztFWVfWxPWl/a718447bea0d93a2d461000926d65428/reinvent2025_devio_update_w1200h630.png?w=3840&fm=webp)

[Update] Amazon Nova 2 Lite has been announced #AWSreInvent

This page has been translated by machine translation. View original

Hello, I'm Kakei (@takaakikakei).

Amazon Nova 2 Lite has been announced on the AWS News Blog, so I've tried it right away. In this article, I'll introduce the overview of Nova 2 Lite and how to use the playground.

Official Documentation

Model Overview

- Model ID:

amazon.nova-2-lite-v1:0 - Input format: Text, images, video

- Maximum tokens: 1M (1 million) tokens

- Languages: 200+ (Japanese supported)

- Regions: Available in multiple regions including cross-region inference in Japan

Trying the Playground

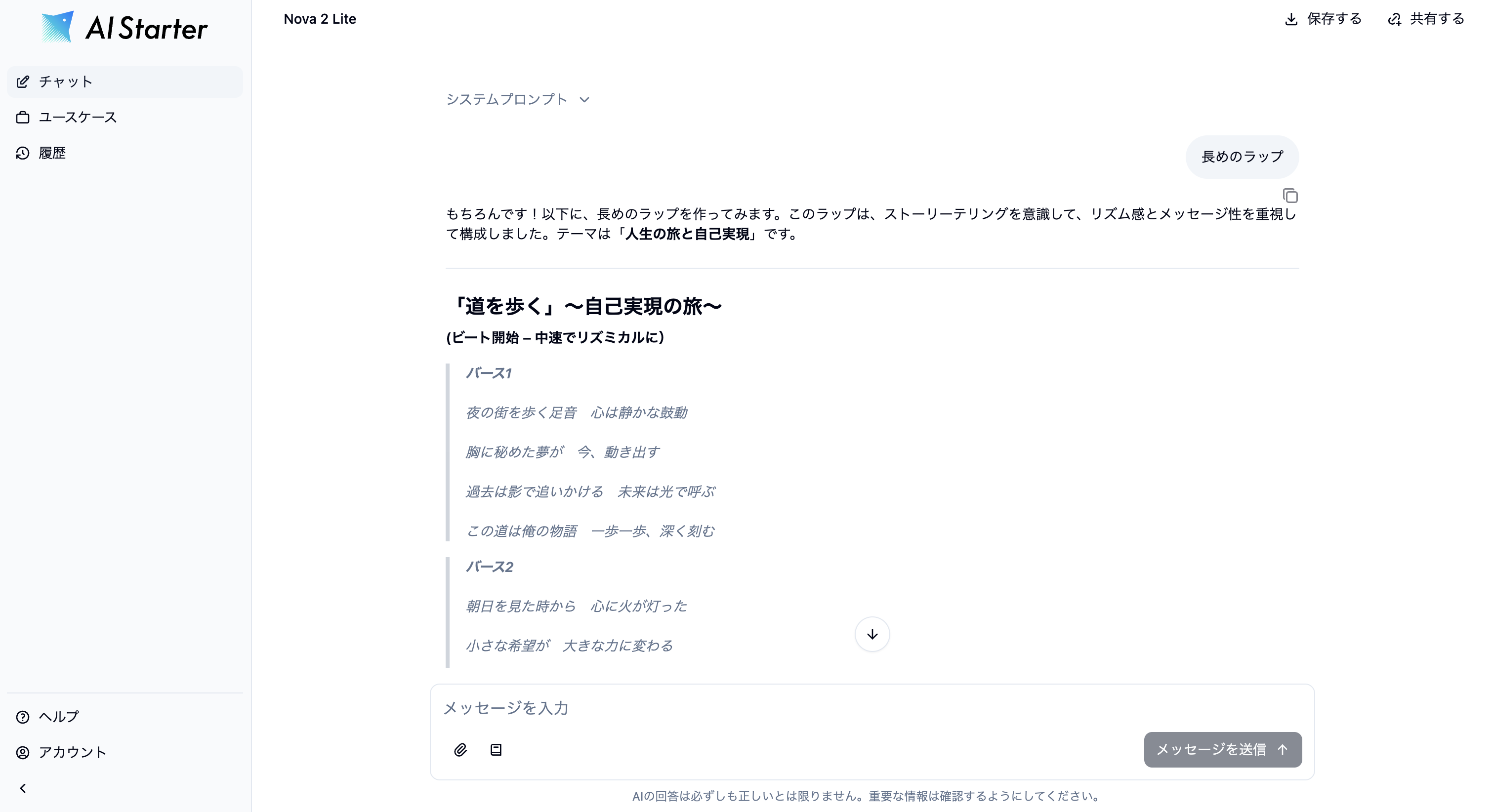

I immediately tried Nova 2 Lite in the playground. After logging into the AWS Management Console, I selected "Chat / Text playground" from the Amazon Bedrock service screen in the Tokyo region. Opening the model selection screen, I found that Nova 2 Lite supports cross-region inference for both Japan region and globally. This time, I selected the Japan region.

I tried text generation by entering some text with Nova 2 Lite. True to its name, the response speed is very fast.

Let's look at the options in the sidebar. Looking at the Built-in tools, I found the following tools are available. Nova Grounding is a feature already released in a recent update, which enables web search for Nova series models. Code Interpreter is appearing for the first time. It's probably similar to other providers' equivalent features, providing a code execution environment that can be used for analysis, calculations, etc.

- Nova Grounding

- Addition) After trying it in the playground, it seems currently only available in the US East (N. Virginia) region for US cross-region inference.

- Code Interpreter

The model inference options are also impressive. In addition to turning inference ON/OFF, you can specify the maximum inference length in three levels: Low, Medium, and High.

Other conventional options are also available. For example, you can set system prompts, Temperature, prompt caching, etc.

Trying the API

Like conventional Nova models, it can also be used via API. In our product AI-Starter, we use the Converse API, and we were able to use Nova 2 Lite just by switching the model. Code Interpreter may require separate implementation, but the basic migration seems straightforward.

Conclusion

Thank you for reading to the end. It's great news that Nova 2 Lite is available for cross-region inference within Japan. The integration of Code Interpreter is also interesting. I'd like to actively use it and find use cases for Nova 2 Lite going forward.

I hope this article has been helpful to you. See you next time!