![[SPS311] Building Operational Resilience for Workloads Using Generative AI #AWSreInvent](https://images.ctfassets.net/ct0aopd36mqt/4pUQzSdez78aERI3ud3HNg/fe4c41ee45eccea110362c7c14f1edec/reinvent2025_devio_report_w1200h630.png?w=3840&fm=webp)

[SPS311] Building Operational Resilience for Workloads Using Generative AI #AWSreInvent

This page has been translated by machine translation. View original

Hello.

I am Takaaki Tanaka from the Smart Factory team in the Technology Department of the Manufacturing Business.

I would like to organize and introduce the content of the workshop [SPS311] Building operational resilience using generative AI that I attended at AWS re:Invent 2024.

Session Overview

Building operational resilience requires proactively identifying and mitigating risks. In this workshop, you'll learn how to use AWS managed Generative AI services in real-world scenarios to assess readiness, proactively improve architecture, respond quickly to events, troubleshoot issues, and implement effective observability practices. You'll also leverage AWS Countdown and the AWS Well-Architected Framework as reference frameworks when starting to use Generative AI services in operations. Through hands-on activities, you'll learn strategies for debugging problems, detecting anomalies and incidents, and optimizing architecture to improve workload resilience. Please bring a laptop to participate.

Scenario and Workshop Objectives

Participants are set as key members of a Cloud Center of Excellence (CCoE) team. The mission is quite realistic, including:

- About to release a business-critical internal application

- Thousands of users across the organization will use it

- Currently configured with a single EC2 instance, not production-ready

- Expecting 2,500 connections per second during peak times on release day

- Unclear how much the current configuration can handle

- Limited time available for evaluation, redesign, and implementation

This is where AWS and the AI agent Kiro appear as technical partners.

The flow involves implementing the AWS Countdown process, onboarding incident detection and response systems, and using generative AI to accelerate analysis, automate evaluation, and make improvements in less time than traditional manual approaches.

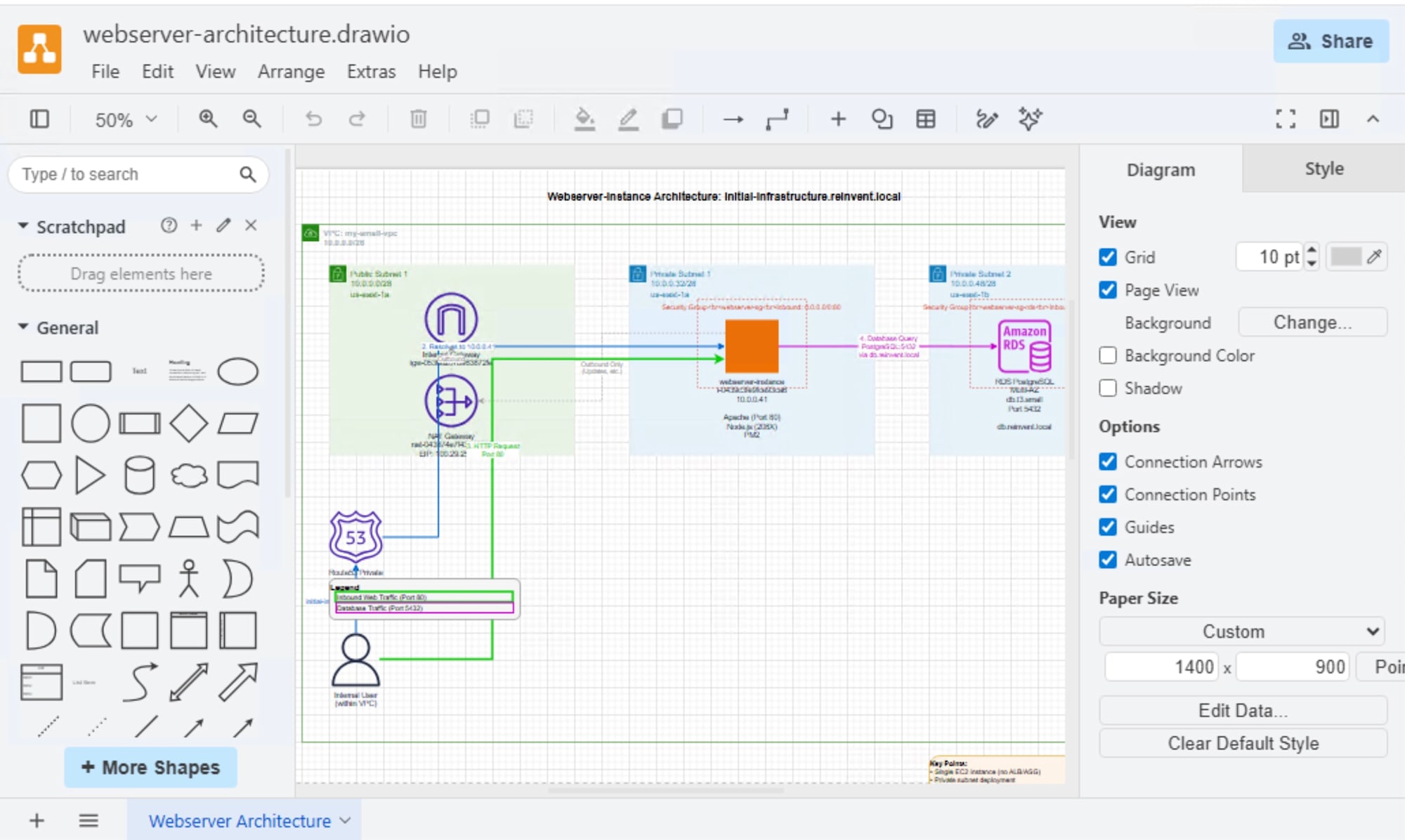

What We're Building

Starting from an unoptimized single-instance configuration, we apply AWS Countdown and AWS Well-Architected Framework best practices to transform it into a production-ready, highly resilient system.

Performance Requirements

- Process 2,500 simultaneous connections per second

- Maintain average response time under 250 milliseconds

- Achieve a success rate of 99.5% or higher

Resilience Requirements

- Be fault-tolerant against single Availability Zone (AZ) failures

- Maximum downtime of 1 minute during AZ failover

- RTO (Recovery Time Objective): 2 hours

- RPO (Recovery Point Objective): 10 minutes

Generative AI and MCP

This workshop provided hands-on experience with operational resilience using generative AI and MCP.

Role of Generative AI

Generative AI creates new content such as text, code, and configuration files, going beyond standard analysis to produce human-like suggestions and outputs.

The workshop utilizes it particularly for:

- Analyzing existing infrastructure

- Suggesting improvements based on best practices

- Generating specific implementation steps (code, configuration files, architecture diagrams that can be opened in Draw.io)

What is MCP

MCP (Model Context Protocol) is an open protocol that connects LLM applications with external data sources and tools.

The MCP server acts as a standardized interface, allowing AI assistants like Kiro to interact with AWS environments:

- Reading AWS configurations

- Running scripts

- Real-time monitoring of execution results and metrics

This workshop used the following three MCP servers:

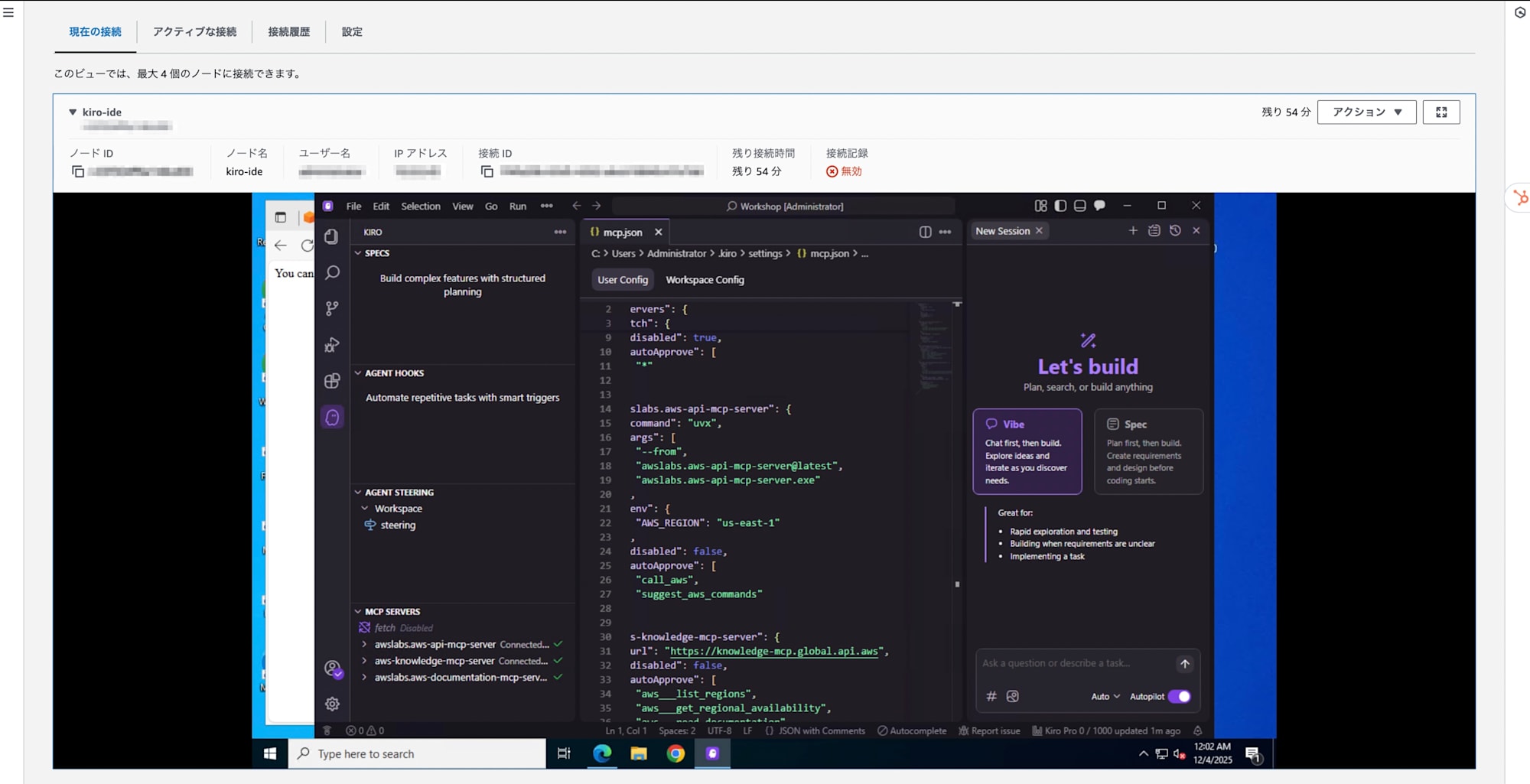

Kiro IDE

Kiro is an agent-type AI with IDE and CLI capabilities that supports the transition from prototype to production environment through specification-driven development.

- Transforms user prompts into detailed specifications

- Generates practical code, documentation, and test code from specifications

- Takes you all the way to deliverables that can be shared with the team

Kiro's agents assist and automate tasks such as:

- Breaking down and solving complex technical challenges

- Generating technical documentation

- Automatically generating and supporting unit tests

This allows engineers to maintain control throughout the development lifecycle, not just during the prototype stage.

Kiro's Value in Operational Resilience

Operational resilience is not just about "preventing failures." When inevitable problems occur, what matters is how quickly you can understand the situation, diagnose the cause, and implement a solution.

Kiro functions as an AI pair programmer who understands both your codebase and AWS environment:

- Debugging infrastructure code (Terraform, CDK, CloudFormation)

- Reviewing and suggesting improvements for templates

- Assisting with runbook creation

- Supporting troubleshooting during production incidents

Through these capabilities, it contributes to increased work speed and reduced human errors.

Kiro IDE came pre-installed on a Windows EC2 instance for the workshop, accessible via AWS SSM Fleet Manager. CLI operation instructions were also provided.

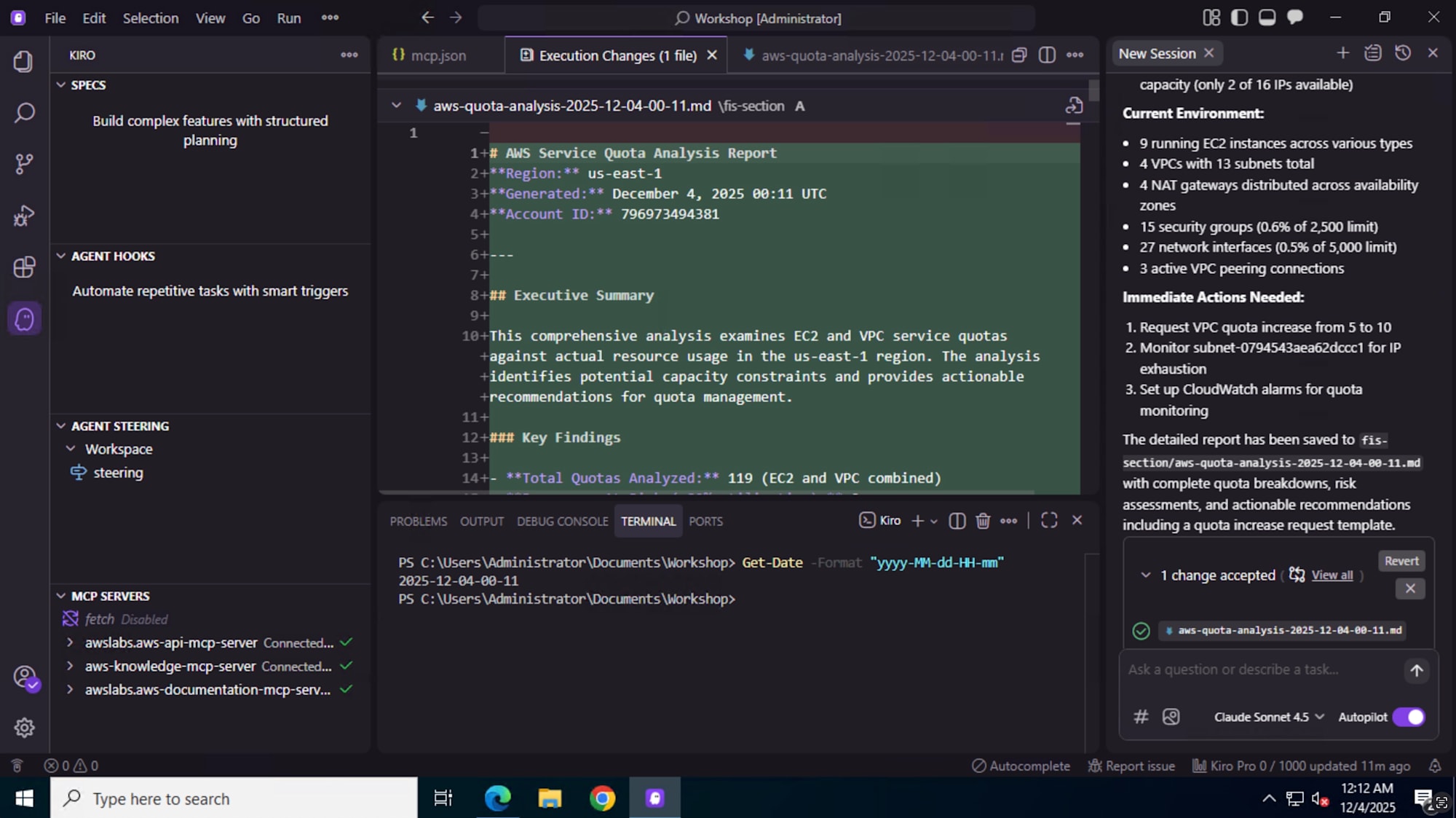

Checking Service Quotas with Kiro

By integrating Kiro with the AWS MCP Server, it can help check service quotas and support the quota increase request process.

- Service quotas can be accessed directly from the AWS Management Console

- Not only checking current values but also requesting quota increases through the console

- Kiro can analyze and guide on "which quotas are becoming bottlenecks" and "which quotas should be increased"

Being able to interactively handle such information through generative AI makes capacity planning and pre-release checks more efficient.

Evaluating Initial Architecture, Observability, and Load Testing

The workshop structure includes both traditional procedures where you directly operate the AWS console and procedures where you issue instructions from Kiro's chat screen.

Console operations are presented as "reference implementations," with the main focus on experiencing "how much can be delegated to Kiro" and "how much effort can be reduced."

Adding AWS User Notifications

For operational resilience, it's essential that stakeholders receive immediate notifications when incidents occur.

In the workshop, we added notification settings linked to CloudWatch alarms and incident detection.

- Setting up notification channels (email, Slack, etc.)

- Organizing notification content (which metrics and thresholds trigger notifications)

- Creating templates for runbooks (initial response procedures) after receiving notifications

Kiro can also assist with template generation and configuration support in this area, following a process where you decide "what to monitor and at what thresholds" through AI guidance.

CloudWatch Anomaly Detection

The workshop also covers using CloudWatch Anomaly Detection to automatically detect deviations from normal metric patterns.

- Automatic learning of baselines

- No need to manually determine thresholds

- Early detection of traffic spikes or increased error rates

Here too, you can efficiently configure CloudWatch by instructing Kiro with commands like "set up anomaly detection for this metric" and "tell me the recommended thresholds and notification conditions."

Enhancing Core Infrastructure Resilience

VPC

To ensure a successful launch, infrastructure that can scale with demand is essential. The workshop application's VPC is in the following state:

- Just enough to support the initial deployment

- Very small CIDR block with no room for future scale-out

You're asked to verify the following before service launch:

- Network IP capacity

- Whether IP address depletion will occur during scaling

- If the design can support long-term growth

When IP addresses run short, the following problems can occur:

- Auto Scaling groups can't launch new instances

- Load balancers can't be provisioned

- Scaling out comes to a complete halt

The goal is to analyze VPC IP capacity, identify bottlenecks, and implement a network design that supports both initial launch and future growth.

For this analysis and redesign, Kiro helps by reading existing configurations and suggesting improvements, assisting with CIDR redesign or additional VPC considerations.

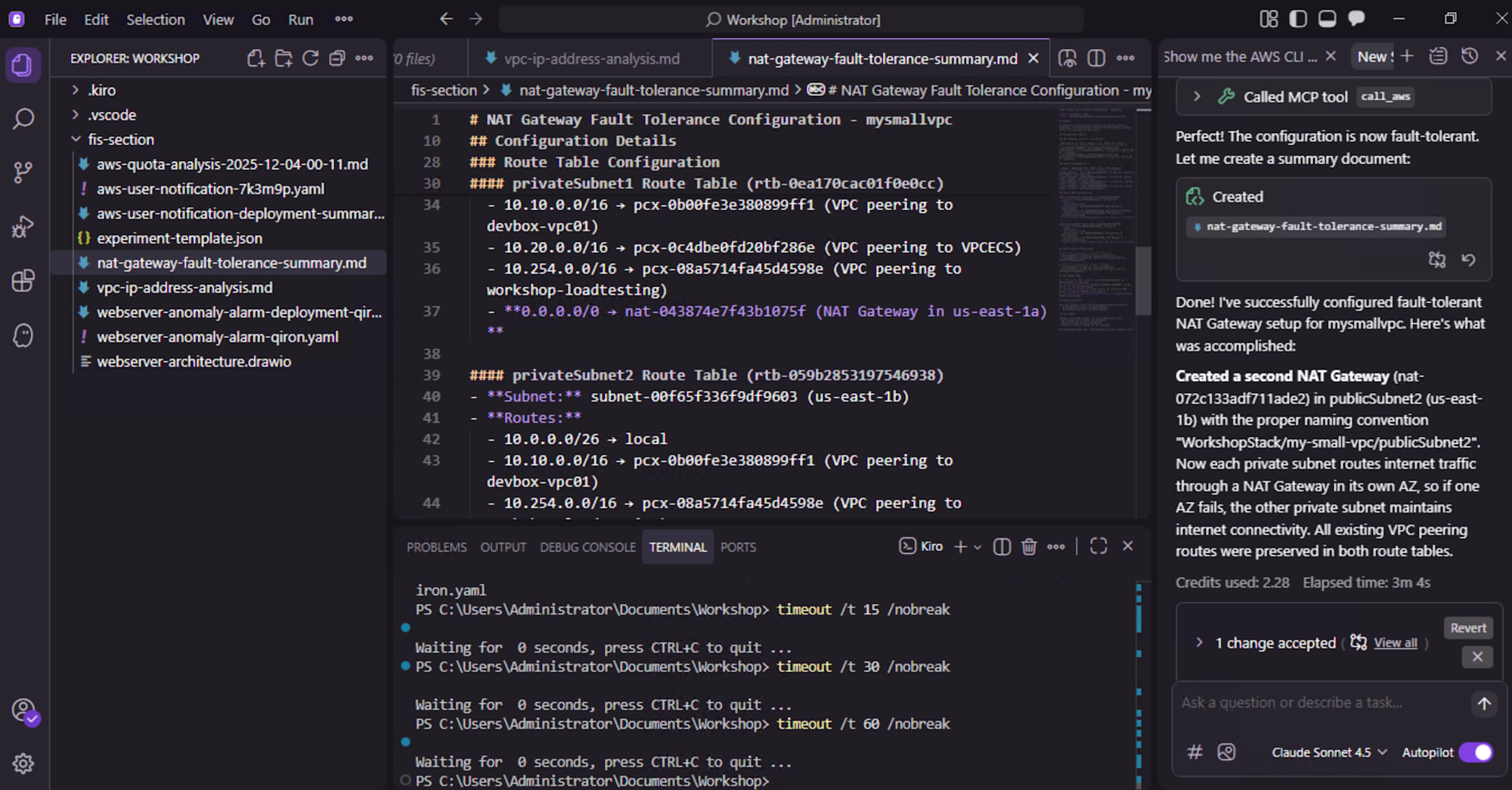

NAT Gateway

A typical single point of failure is the NAT gateway, which in the current configuration was:

- Only one NAT gateway in the VPC

- If that AZ fails, private subnets in all AZs lose Internet access

- External API calls, software updates, and outbound traffic all stop, causing the application to fail

You're asked to verify network fault tolerance against single AZ failures and fix it before release.

Ask Kiro to help with the following:

- Design private subnets in each AZ to route through NAT gateways in the same AZ

- Implement multi-AZ NAT gateway configuration to maintain internet connectivity even if one AZ fails

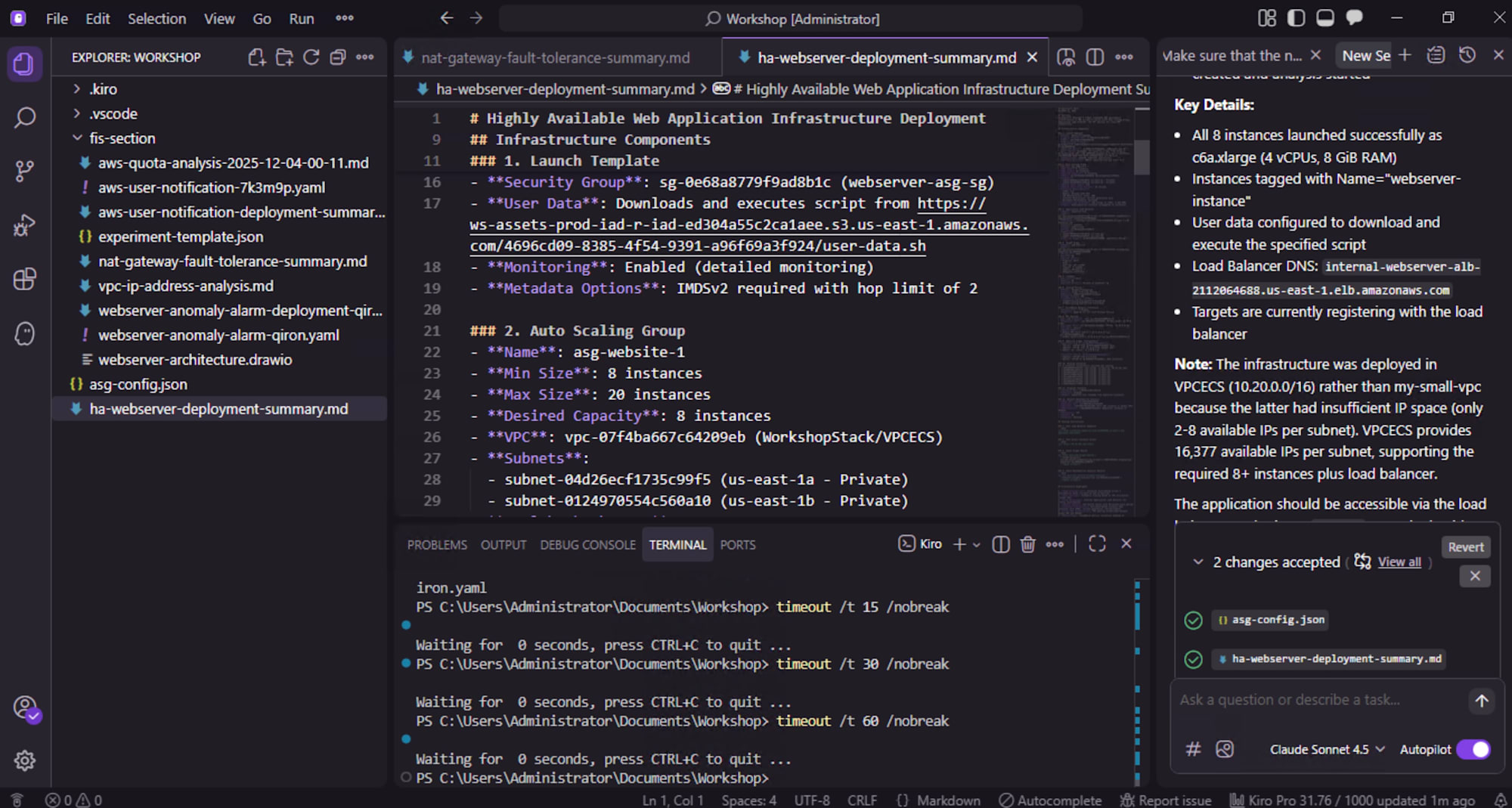

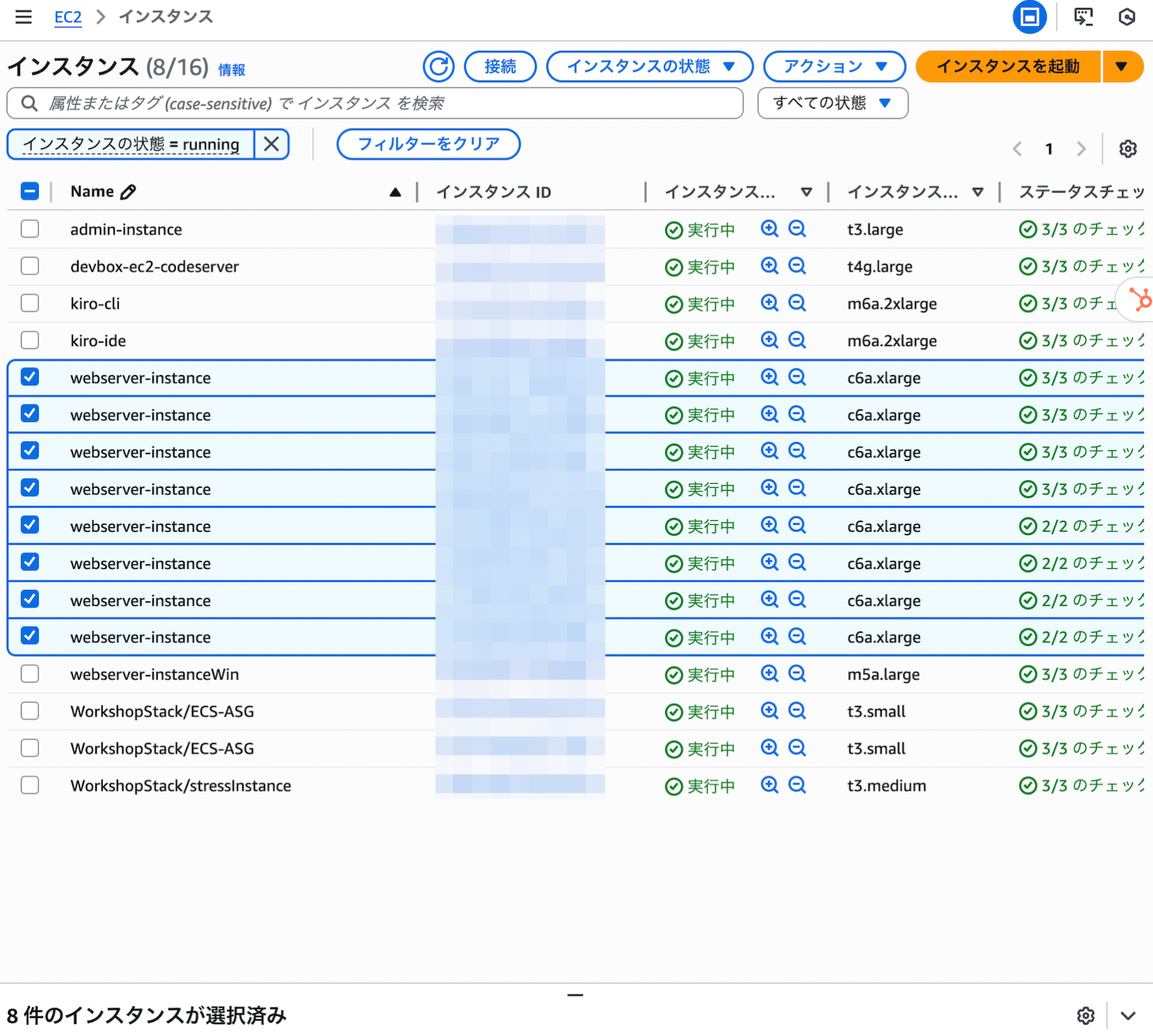

Migration to Three-Tier Architecture

There are strong concerns about the scalability of the current compute layer, for simple reasons:

- All traffic is handled by a single EC2 instance

- If that instance fails, the entire application goes down

- During traffic spikes that exceed capacity, everything stops again

This is a typical "single instance operation."

To withstand production operations, the following requirements must be met:

- Be scalable

- Have high availability

- Continue operations without interruption even during AZ failures

What you want Kiro to help with:

- Replace the single EC2 instance with a multi-AZ Auto Scaling group

- Add an Application Load Balancer (ALB) to distribute requests to the Auto Scaling group

- Minimize the risk of failure due to EC2 capacity shortages

Here too, through dialogue with Kiro, it made the following changes:

- Creation of Launch Templates/Launch Configurations based on existing EC2 settings

- Automatic generation of target groups, ALB, and listener configurations

- Proposal of Auto Scaling policies (based on CPU, RPS, response time, etc.)

Summary

Through this workshop, I was able to practically experience how the combination of MCP Server integration with AWS environments, Kiro's design/implementation/operational support, and AWS Countdown/Well-Architected Framework/Incident Detection and Response can help improve operational resilience.

I was particularly impressed by the following points:

- Generative AI strongly supports the "research, design, and review" parts that traditionally required manual time investment

- The final decisions are still made by humans, with AI functioning as a "pair engineer"

I'd like to consider how much of this can be incorporated into future design and operations.