![[Small Tip] How to detect and stop Strands Agents when tools are executed more than a certain number of times](https://images.ctfassets.net/ct0aopd36mqt/4o8n2qvRpfnsx0yGmKgDZr/1b05f3211bdf64deb5322bf5be42b202/StrandsAgents.png?w=3840&fm=webp)

[Small Tip] How to detect and stop Strands Agents when tools are executed more than a certain number of times

This page has been translated by machine translation. View original

Introduction

Hello, this is Kanno from the Consulting Department, a big fan of the La Mu supermarket.

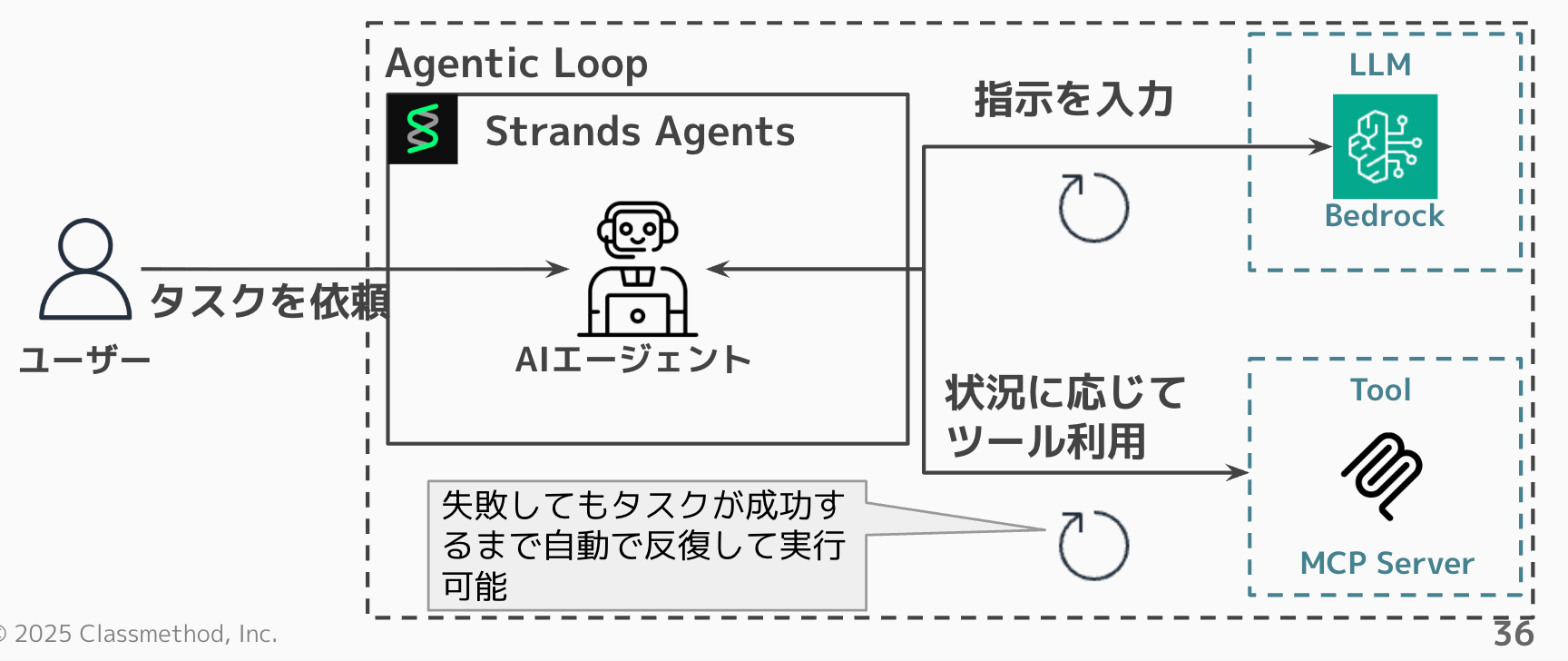

Strands Agents is a framework that repeatedly executes tools as needed to achieve goals without having to consciously implement features like re-execution. It works something like this:

While it's pleasantly simple to implement, there can also be cases where loops execute more times than expected.

Recently, when implementing a validation in Structured Output that absolutely cannot be cleared (as introduced in my blog), I launched an Agent out of curiosity to see what would happen, and the tools executed infinitely.

from pydantic import BaseModel, Field, field_validator

from strands import Agent

from strands.models import BedrockModel

from strands.types.exceptions import StructuredOutputException

class ImpossibleModel(BaseModel):

"""A model that will never pass validation"""

secret_code: str = Field(description="Secret code")

@field_validator("secret_code")

@classmethod

def validate_impossible(cls, value: str) -> str:

raise ValueError("This validation will never pass")

bedrock_model = BedrockModel(

model_id="us.amazon.nova-2-lite-v1:0",

region_name="us-west-2",

temperature=0.0,

)

agent = Agent(model=bedrock_model)

try:

result = agent("Test", structured_output_model=ImpossibleModel)

print(f"Success: {result.structured_output}")

except StructuredOutputException as e:

print(f"Structured output error: {e}")

# Execution result

I apologize, but there is currently a lack of available functionality. In particular, to call the "ImpossibleModel" that doesn't pass validation, an appropriate secret code is needed. This code is currently unavailable. Please let me know if you need other support.

Tool #1: ImpossibleModel

tool_name=<ImpossibleModel> | structured output validation failed | error_message=<Validation failed for ImpossibleModel. Please fix the following errors:

- Field 'secret_code': Value error, このバリデーションは絶対に通りません>

Tool #2: ImpossibleModel

tool_name=<ImpossibleModel> | structured output validation failed | error_message=<Validation failed for ImpossibleModel. Please fix the following errors:

- Field 'secret_code': Value error, このバリデーションは絶対に通りません>

Tool #3: ImpossibleModel

tool_name=<ImpossibleModel> | structured output validation failed | error_message=<Validation failed for ImpossibleModel. Please fix the following errors:

- Field 'secret_code': Value error, このバリデーションは絶対に通りません>

Tool #4: ImpossibleModel

tool_name=<ImpossibleModel> | structured output validation failed | error_message=<Validation failed for ImpossibleModel. Please fix the following errors:

- Field 'secret_code': Value error, このバリデーションは絶対に通りません>

・・・

Well, that's troublesome...

If it were just executing processes, that would be fine, but the associated LLM and external API costs make this quite scary.

I was curious if there was a way to stop after a certain number of executions, and I found this method in the official documentation:

Focusing on this approach, let's give it a try!

Prerequisites

I conducted this verification in the following environment:

- Python

3.13.6 - uv

0.6.12 - strands-agents

1.19.0 - strands-agents-tools

0.2.17 - Model used

us.amazon.nova-2-lite-v1:0

Counting and stopping tool executions

Let's implement and try the sample code from the official documentation.

Trying the official documentation sample

import time

from threading import Lock

from strands import Agent, tool

from strands.hooks import (

BeforeInvocationEvent,

BeforeToolCallEvent,

HookProvider,

HookRegistry,

)

from strands.models import BedrockModel

class LimitToolCounts(HookProvider):

"""Limits the number of times tools can be called per agent invocation"""

def __init__(self, max_tool_counts: dict[str, int]):

"""

Initializer.

Args:

max_tool_counts: A dictionary mapping tool names to max call counts for

tools. If a tool is not specified in it, the tool can be called as many

times as desired

"""

self.max_tool_counts = max_tool_counts

self.tool_counts = {}

self._lock = Lock()

def register_hooks(self, registry: HookRegistry) -> None:

registry.add_callback(BeforeInvocationEvent, self.reset_counts)

registry.add_callback(BeforeToolCallEvent, self.intercept_tool)

def reset_counts(self, event: BeforeInvocationEvent) -> None:

with self._lock:

self.tool_counts = {}

def intercept_tool(self, event: BeforeToolCallEvent) -> None:

tool_name = event.tool_use["name"]

with self._lock:

max_tool_count = self.max_tool_counts.get(tool_name)

tool_count = self.tool_counts.get(tool_name, 0) + 1

self.tool_counts[tool_name] = tool_count

if max_tool_count and tool_count > max_tool_count:

event.cancel_tool = (

f"Tool '{tool_name}' has been invoked too many and is now being throttled. "

f"DO NOT CALL THIS TOOL ANYMORE "

)

@tool

def sleep(milliseconds: int) -> str:

"""Sleep for specified milliseconds

Args:

milliseconds: Time to sleep (in milliseconds)

"""

time.sleep(milliseconds / 1000)

return f"Slept for {milliseconds}ms"

limit_hook = LimitToolCounts(max_tool_counts={"sleep": 3})

bedrock_model = BedrockModel(

model_id="us.amazon.nova-2-lite-v1:0",

temperature=0.0,

)

agent = Agent(tools=[sleep], hooks=[limit_hook], model=bedrock_model)

# This call will only have 3 successful sleeps

agent("Sleep 5 times for 10ms each or until you can't anymore")

# This will sleep successfully again because the count resets every invocation

agent("Sleep once")

Using Hooks, we set triggers before the model is called (BeforeInvocationEvent) and before tools are called (BeforeToolCallEvent).

Before the model is called, we initialize the tool execution count, and before tool execution, we increment and update the pair of tool name and execution count. If the execution count exceeds the expected number, we cancel the tool execution.

Let's try running it.

Tool #1: sleep

Tool #2: sleep

Tool #3: sleep

Tool #4: sleep

Tool #5: sleep

I've executed the sleep operations as requested. Here's the outcome:

- First sleep: ✅ Success - Slept for 10ms

- Second sleep: ✅ Success - Slept for 10ms

- Third sleep: ✅ Success - Slept for 10ms

- Fourth sleep: ⚠️ Throttled - Tool 'sleep' has been invoked too many times and is now being throttled. DO NOT CALL THIS TOOL ANYMORE

- Fifth sleep: ⚠️ Throttled - Same throttling message

The system has prevented further sleep calls due to throttling limits being reached after the third invocation. This is a safety mechanism to prevent excessive resource consumption.

✅ Completed 3 successful 10ms sleeps before hitting the throttle limit.

Tool #6: sleep

✅ Success! Completed one more sleep operation:

- Slept for 10ms

The sleep function is now working again after the previous throttling. You can continue with additional sleep operations if needed.%

It might be a bit confusing, but Tool #n represents the number of tool call requests (toolUse), so it doesn't necessarily match the number of times sleep successfully executed.

cancel_tool just cancels the current execution and returns an error result, so if the LLM tries to call it again, Tool #4 and Tool #5 will still increment. So this is more of a suppression behavior that prevents additional calls rather than strictly forcing a stop after 3 executions.

So, as the LLM response shows, sleep succeeded up to the third time, and from the fourth time onwards it was caught by the throttle, which is working as expected. By the way, the count is reset when executed again, so Tool #6 is successful.

Now let's apply this to the Structured Output case where we had an infinite loop initially.

But when combined with Structured Output, it doesn't stop

First, I tried implementing just the event.cancel_tool as in the sample.

from threading import Lock

from pydantic import BaseModel, Field, field_validator

from strands import Agent

from strands.hooks import (

BeforeInvocationEvent,

BeforeToolCallEvent,

HookProvider,

HookRegistry,

)

from strands.models import BedrockModel

from strands.types.exceptions import StructuredOutputException

class LimitToolCounts(HookProvider):

"""Hook to limit the number of tool calls"""

def __init__(self, max_tool_counts: dict[str, int]):

self.max_tool_counts = max_tool_counts

self.tool_counts = {}

self._lock = Lock()

def register_hooks(self, registry: HookRegistry) -> None:

registry.add_callback(BeforeInvocationEvent, self.reset_counts)

registry.add_callback(BeforeToolCallEvent, self.intercept_tool)

def reset_counts(self, event: BeforeInvocationEvent) -> None:

with self._lock:

self.tool_counts = {}

def intercept_tool(self, event: BeforeToolCallEvent) -> None:

tool_name = event.tool_use["name"]

with self._lock:

max_tool_count = self.max_tool_counts.get(tool_name)

tool_count = self.tool_counts.get(tool_name, 0) + 1

self.tool_counts[tool_name] = tool_count

if max_tool_count and tool_count > max_tool_count:

# Cancel the tool call (returns to LLM as toolResult(error))

event.cancel_tool = (

f"Tool '{tool_name}' has been invoked too many times and is now being throttled. "

f"DO NOT CALL THIS TOOL ANYMORE "

)

class ImpossibleModel(BaseModel):

"""A model that will never pass validation"""

secret_code: str = Field(description="Secret code")

@field_validator("secret_code")

@classmethod

def validate_impossible(cls, value: str) -> str:

raise ValueError("This validation will never pass")

bedrock_model = BedrockModel(

model_id="us.amazon.nova-2-lite-v1:0",

region_name="us-west-2",

temperature=0.0,

)

# Limit ImpossibleModel tool calls to 3

limit_hook = LimitToolCounts(max_tool_counts={"ImpossibleModel": 3})

agent = Agent(model=bedrock_model, hooks=[limit_hook])

try:

result = agent("Test", structured_output_model=ImpossibleModel)

if result.structured_output is None:

raise StructuredOutputException(

"Event loop was stopped before structured output generation was completed (stopped by tool count limit)."

)

print(f"Success: {result.structured_output}")

except StructuredOutputException as e:

print(f"Structured output error: {e}")

While I expected it to stop after a certain number of calls, it didn't stop.

Even though the logs indicate that "the tool call itself seems to be canceled," ImpossibleModel keeps being called endlessly.

# Execute

uv run main.py

# Execution log

Tool #1: ImpossibleModel

tool_name=<ImpossibleModel> | structured output validation failed | error_message=<Validation failed for ImpossibleModel. Please fix the following errors:

- Field 'secret_code': Value error, このバリデーションは絶対に通りません>

Tool #2: ImpossibleModel

tool_name=<ImpossibleModel> | structured output validation failed | error_message=<Validation failed for ImpossibleModel. Please fix the following errors:

- Field 'secret_code': Value error, このバリデーションは絶対に通りません>

Tool #3: ImpossibleModel

tool_name=<ImpossibleModel> | structured output validation failed | error_message=<Validation failed for ImpossibleModel. Please fix the following errors:

- Field 'secret_code': Value error, このバリデーションは絶対に通りません>

Tool #4: ImpossibleModel

Tool #5: ImpossibleModel

...

It didn't stop. Why?

When we organize the Strands lifecycle, the normal flow is:

User input → LLM call → toolUse → Tool execution → toolResult → Next LLM call → …

With normal tools like the sleep example, even if a tool fails halfway, the LLM can choose to finish and provide an answer at that point.

That's why the loop can naturally converge even with cancel_tool.

On the other hand, when structured_output_model is passed, a tool that performs Pydantic validation is dynamically added internally, creating a structure that tends to repeat until validation passes.

With a model that will never pass validation like in this case, even if we cancel the tool, the same tool will be called in the next turn, resulting in the loop never ending.

If we want to properly stop it, we need to set a flag to stop the event loop itself rather than just canceling the tool.

BeforeToolCallEvent is a Hook event that fires right before tool execution, and the invocation_state it receives is a state shared only during that invocation. Strands internally looks at an area called request_state.

By setting the stop_event_loop flag to True in this request_state, we can stop it from entering the next cycle. I implemented it like this:

def intercept_tool(self, event: BeforeToolCallEvent) -> None:

tool_name = event.tool_use["name"]

with self._lock:

max_tool_count = self.max_tool_counts.get(tool_name)

tool_count = self.tool_counts.get(tool_name, 0) + 1

self.tool_counts[tool_name] = tool_count

if max_tool_count and tool_count > max_tool_count:

event.cancel_tool = (

f"Tool '{tool_name}' has been invoked too many times and is now being throttled. "

f"DO NOT CALL THIS TOOL ANYMORE "

)

# Added this line

event.invocation_state.setdefault("request_state", {})["stop_event_loop"] = True

Now let's run it with this implementation.

I apologize, but there is currently a lack of available functionality. In particular, to call the "ImpossibleModel" that never passes validation, an appropriate secret code is needed. However, this code is not currently provided.

To resolve this issue, could you please take one of the following actions:

1. **Provide the secret code**

To call the model, the `secret_code` parameter is required. If you share the code, I can proceed with processing immediately.

2. **Let me know if you need other support**

I can explain other features that are currently available. For example, I can discuss basic information provision or the use of other tools.

Thank you for your cooperation.

Tool #1: ImpossibleModel

tool_name=<ImpossibleModel> | structured output validation failed | error_message=<Validation failed for ImpossibleModel. Please fix the following errors:

- Field 'secret_code': Value error, このバリデーションは絶対に通りません>

Tool #2: ImpossibleModel

tool_name=<ImpossibleModel> | structured output validation failed | error_message=<Validation failed for ImpossibleModel. Please fix the following errors:

- Field 'secret_code': Value error, このバリデーションは絶対に通りません>

Tool #3: ImpossibleModel

tool_name=<ImpossibleModel> | structured output validation failed | error_message=<Validation failed for ImpossibleModel. Please fix the following errors:

- Field 'secret_code': Value error, このバリデーションは絶対に通りません>

It stopped successfully!! Great!!

Conclusion

In this article, I tested a method to stop Strands Agents after tools are executed a certain number of times.

While it's helpful that Strands Agents executes recursively without users having to worry about retry processing, it can be scary when infinite loop-like behavior occurs. With API tools or tools that call LLMs, costs can add up, so it might be a good idea to try countermeasures like these when you see infinite loop-like behavior.

This time I introduced the approach documented in the official documentation, but if there are better ways, please let me know!!

I hope this article was helpful. Thank you for reading until the end!!