![[New Feature] Remote MCP Server hosted on dbt Cloud has been released as a public beta](https://images.ctfassets.net/ct0aopd36mqt/wp-thumbnail-f846b6052d1f7b983172226c6b1ed44b/6b4729aabfb5b7a3359acc27dc6b5453/dbt-1200x630-1.jpg)

[New Feature] Remote MCP Server hosted on dbt Cloud has been released as a public beta

I am Sagara.

dbt Cloud's hosted Remote MCP Server has been released as a public beta.

I've actually tried this new feature, so I'll summarize my experience in this article.

What is Remote MCP Server?

First, as background, dbt had already released dbt MCP Server as an OSS.

This OSS dbt MCP Server required users to set it up themselves, which presented the following challenges:

- Deployment difficulty: Hosting and operating in multi-tenant environments where multiple users access it is challenging.

- Limited use cases: It was mainly intended for personal development environments and wasn't suitable for building shared services like web applications.

The Remote MCP Server released this time solves these challenges! As the name "Remote" suggests, users don't need to set up the MCP Server themselves, but instead use the MCP Server hosted on dbt Cloud.

The following table summarizes the differences between the traditional local MCP Server and the newly released Remote MCP Server, based on the content from this official blog.

| Local MCP Server | Remote MCP Server | |

|---|---|---|

| Hosting | Managed by users themselves | Managed by dbt Labs (cloud service) |

| Connection method | Runs in local environment | Connects via HTTP API |

| Main use cases | Personal development support (local coding agents, etc.) | Shared applications, web apps, server-side AI agent construction |

| Benefits | Complete match between local code and information held by AI | No infrastructure management needed, easy implementation. Scalability and security can be left to dbt Cloud. |

This official blog listed the following use cases for Remote MCP Server (translated into Japanese in the original, translated back to English here):

- Answering business-related questions using accurate and managed dbt metrics

- Identifying PII columns and automatically applying governance policies

- PR review agents that improve code quality and speed up the review process

- Examining metadata and catalog information to accelerate data discovery and troubleshooting

- On-call incident support agents that resolve issues faster

Checking the information needed to set up Remote MCP Server

So, I'm going to try out the released MCP Server!

This time, I'll try accessing the Remote MCP Server from Cursor, which seems to have the simplest setup.

dbt Cloud host URL

First, I'll check the dbt Cloud host URL.

If the URL appears as shown in the figure below when accessing dbt Cloud, the URL for MCP Server will be https://cloud.getdbt.com/api/ai/v1/mcp/.

### Service Token

### Service Token

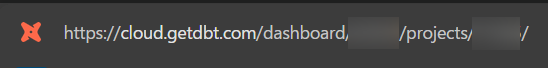

Next, let's generate a service token.

If you want to fully utilize Semantic Layer, specify Semantic Layer Only, Metadata Only, and Developer as Permissions when generating a service token.

Production Environment ID

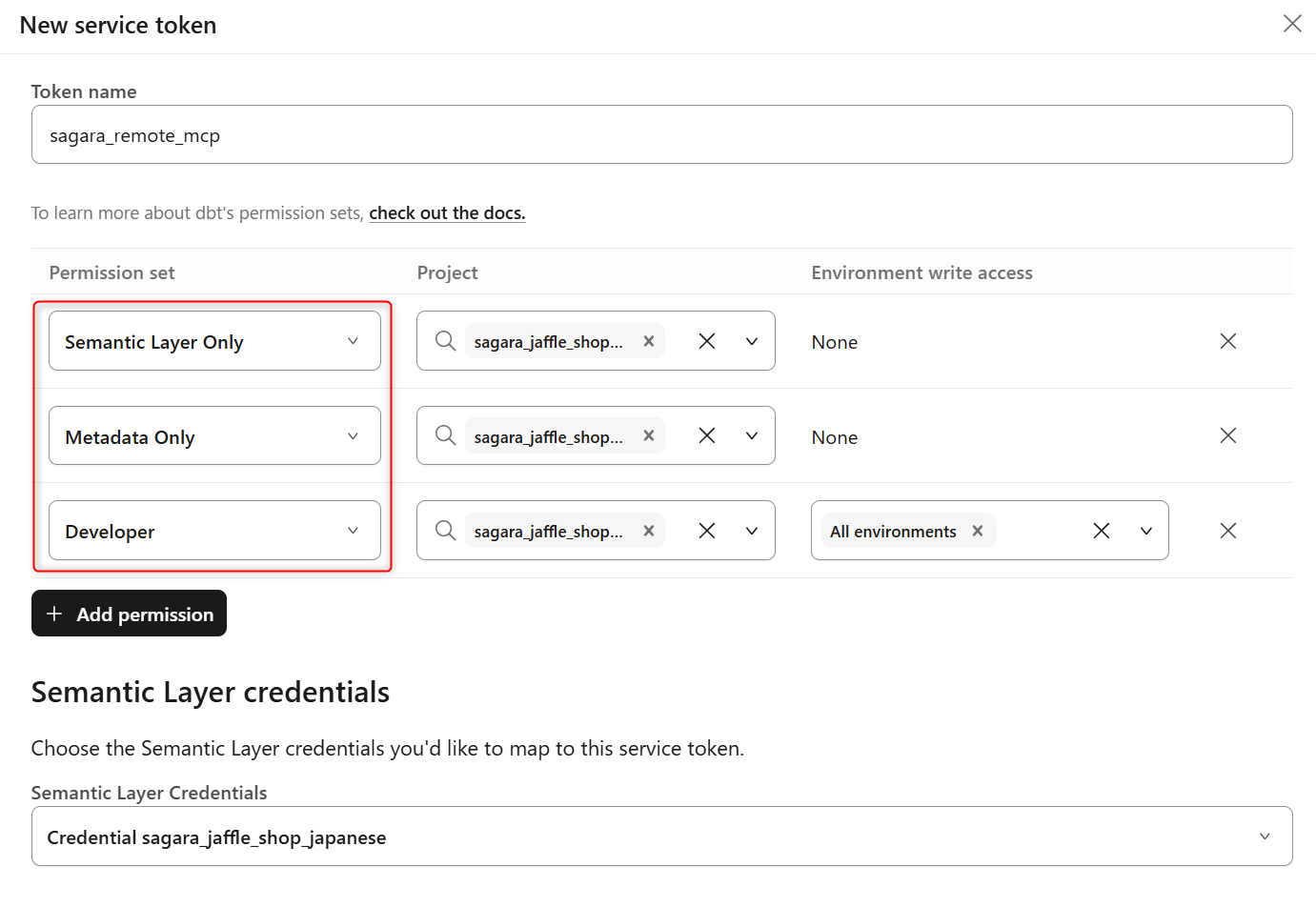

Finally, let's check the Production Environment ID. This can also be confirmed by opening the target Environment screen and checking the URL.

Connection Setup from Cursor

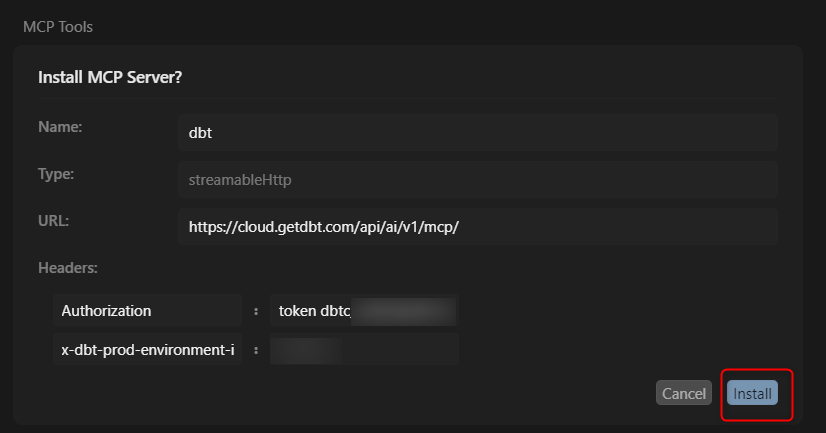

Now let's set up the connection from Cursor using the information we confirmed in dbt Cloud. (Assuming Cursor itself has been set up in advance.)

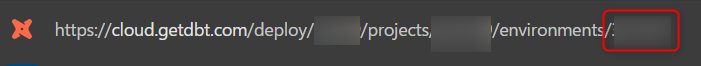

Access the following URL and click on Add to Cursor under Set up with remote dbt MCP server.

Cursor will launch, and you'll need to enter the information we confirmed earlier and press install. Note that for the service token, you need to enter it after token .

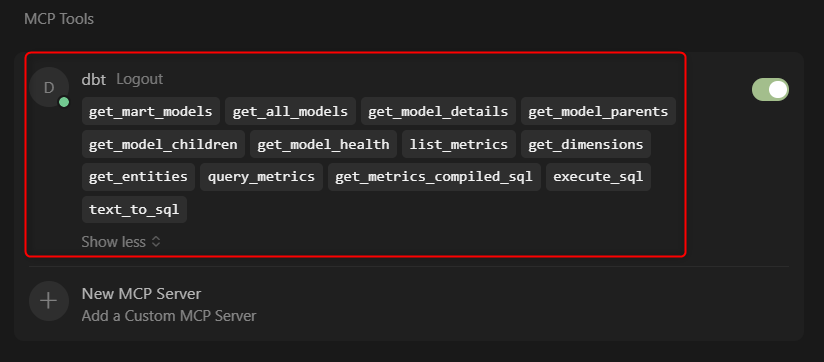

Once successfully installed, dbt's Remote MCP Server will be added to the MCP Tools section as shown in the image below.

Using the Remote MCP Server

Now let's actually use the Remote MCP Server from Cursor! (I'm using the free Hobby plan of Cursor.)

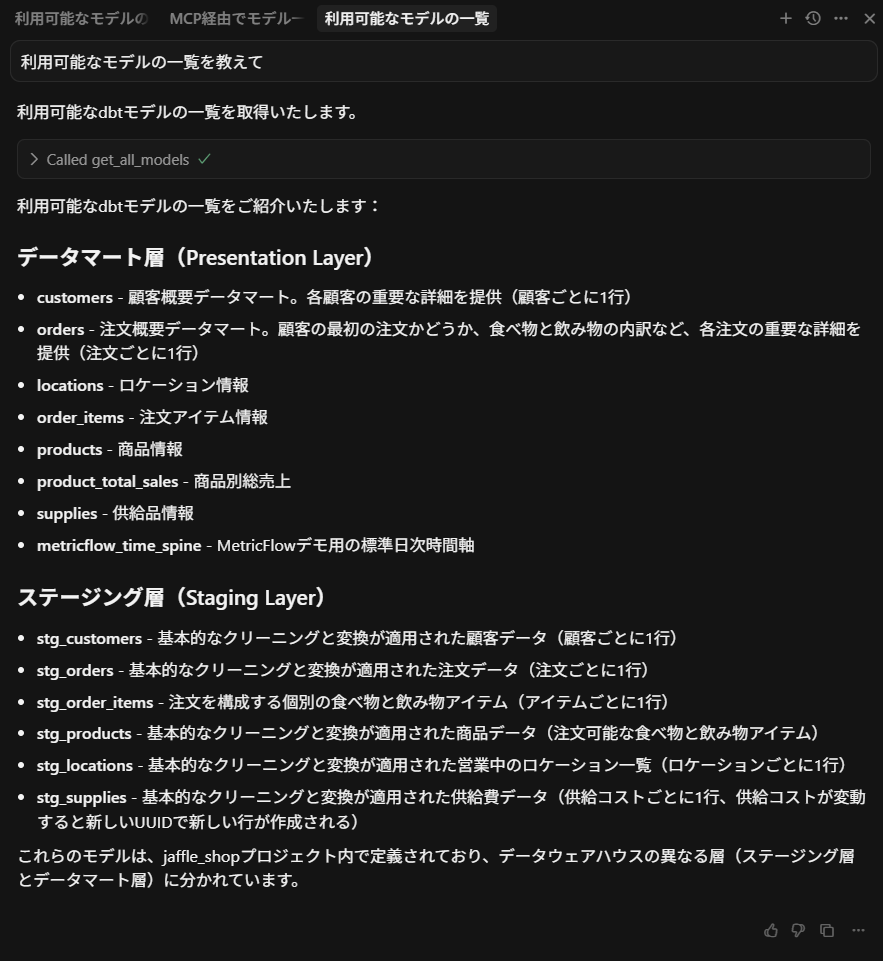

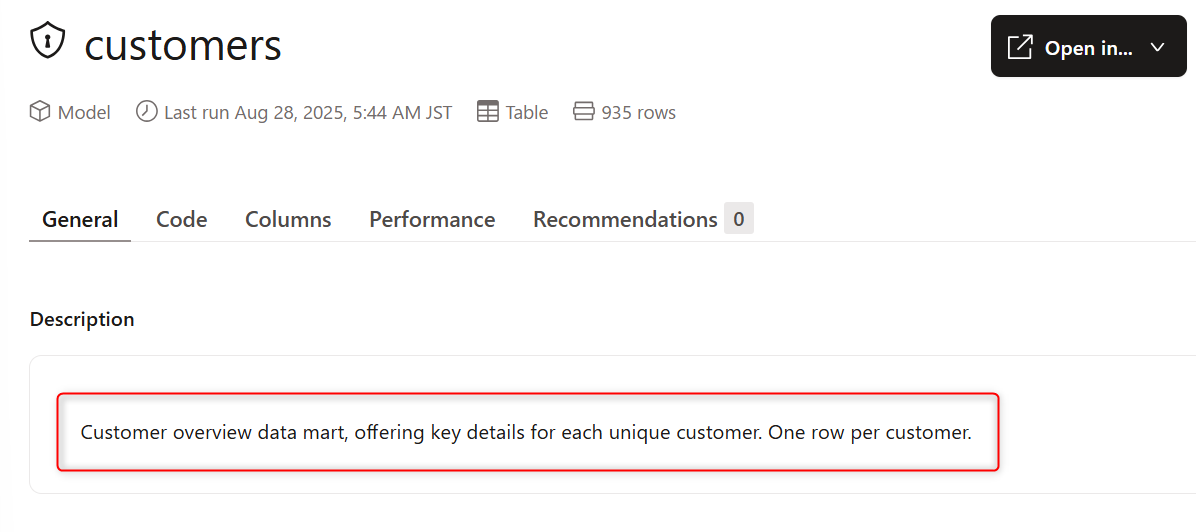

Checking Available Models

When I casually asked "Show me a list of available models" and pressed Run tool, I got the response shown below. Even if the descriptions were written in English, they were translated to Japanese in the response.

### Verification of Whether Each Model is Working Correctly

### Verification of Whether Each Model is Working Correctly

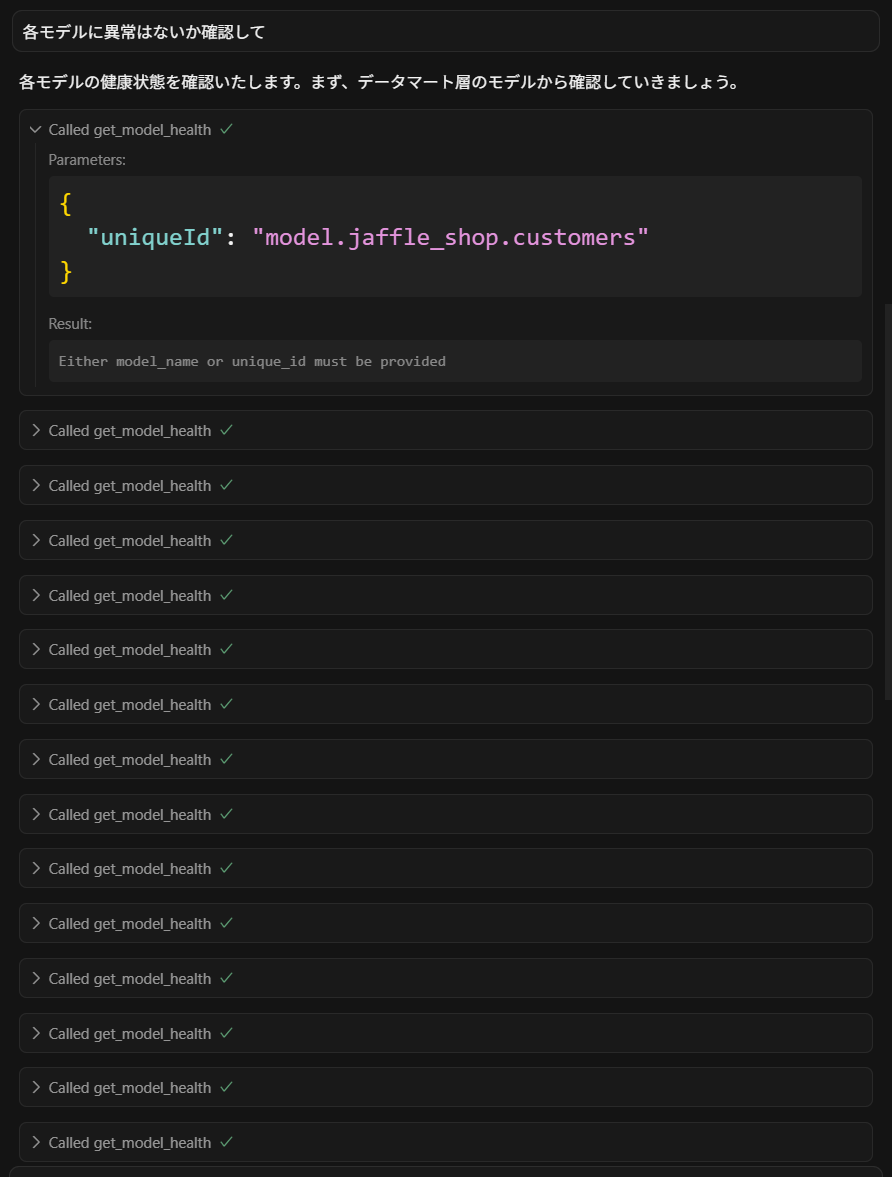

To verify whether each model is being updated correctly, I asked "Please check if there are any abnormalities in each model."

This triggered get_model_health for each Model, and the results showed that all models are functioning properly.

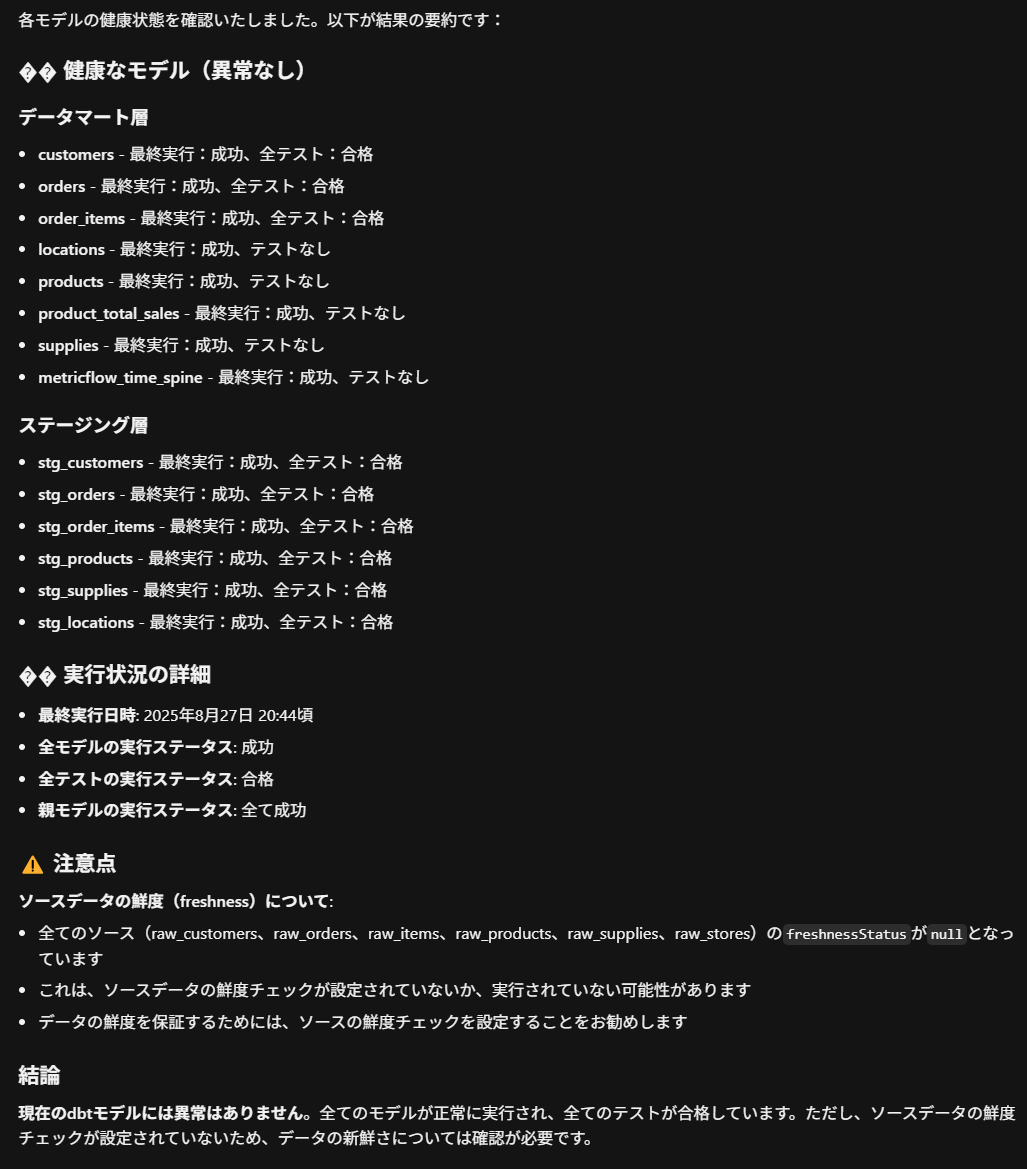

To get more specific information about what tests are being conducted, I asked "What kinds of tests are you running on the models being tested?"

This returned details of the tests being conducted on each model.

Requesting SQL Generation in Natural Language

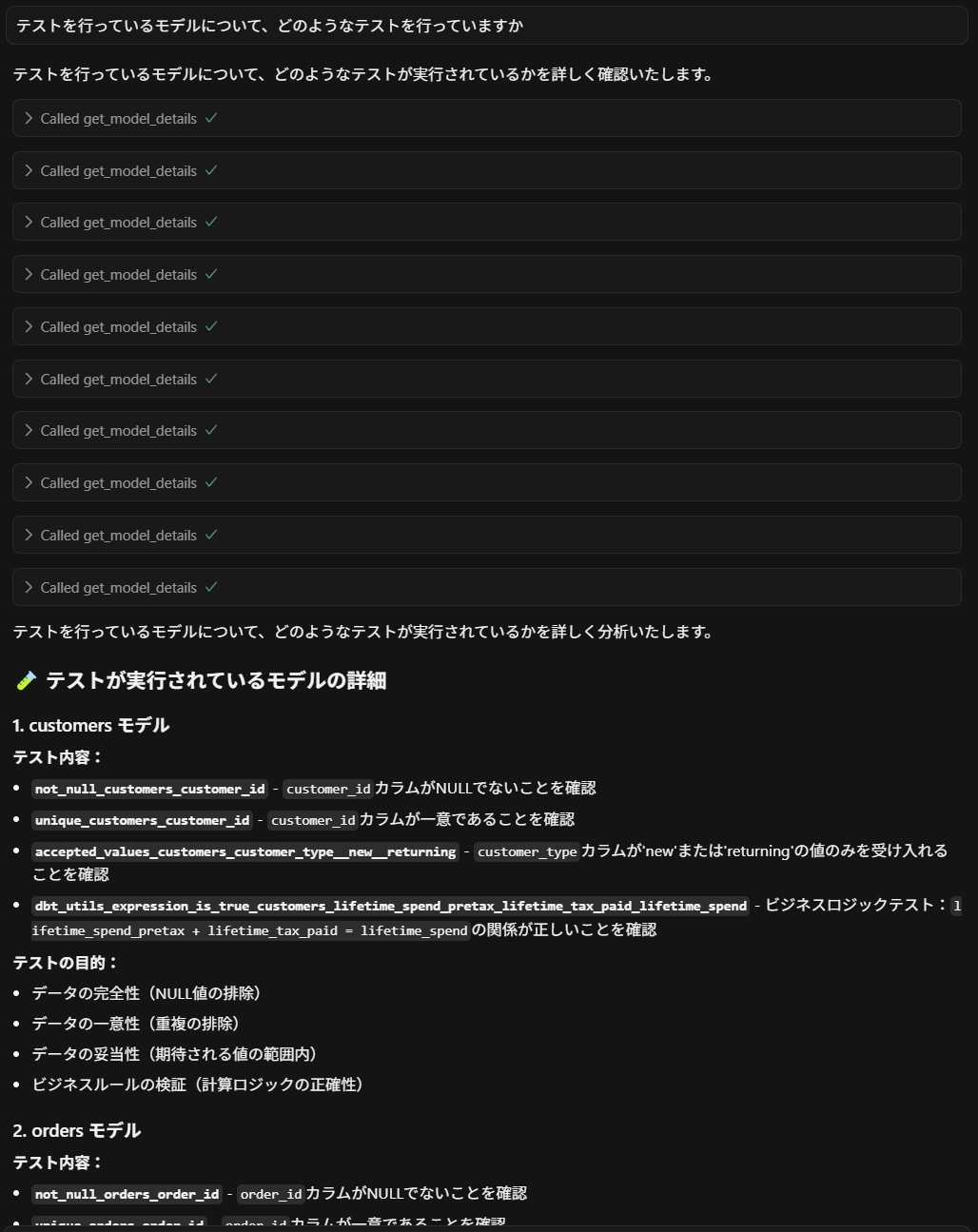

I requested SQL generation by asking "Please generate SQL to understand sales performance by customer by joining sales data with stg_customers as the base."

This generated the SQL shown in the figure below.

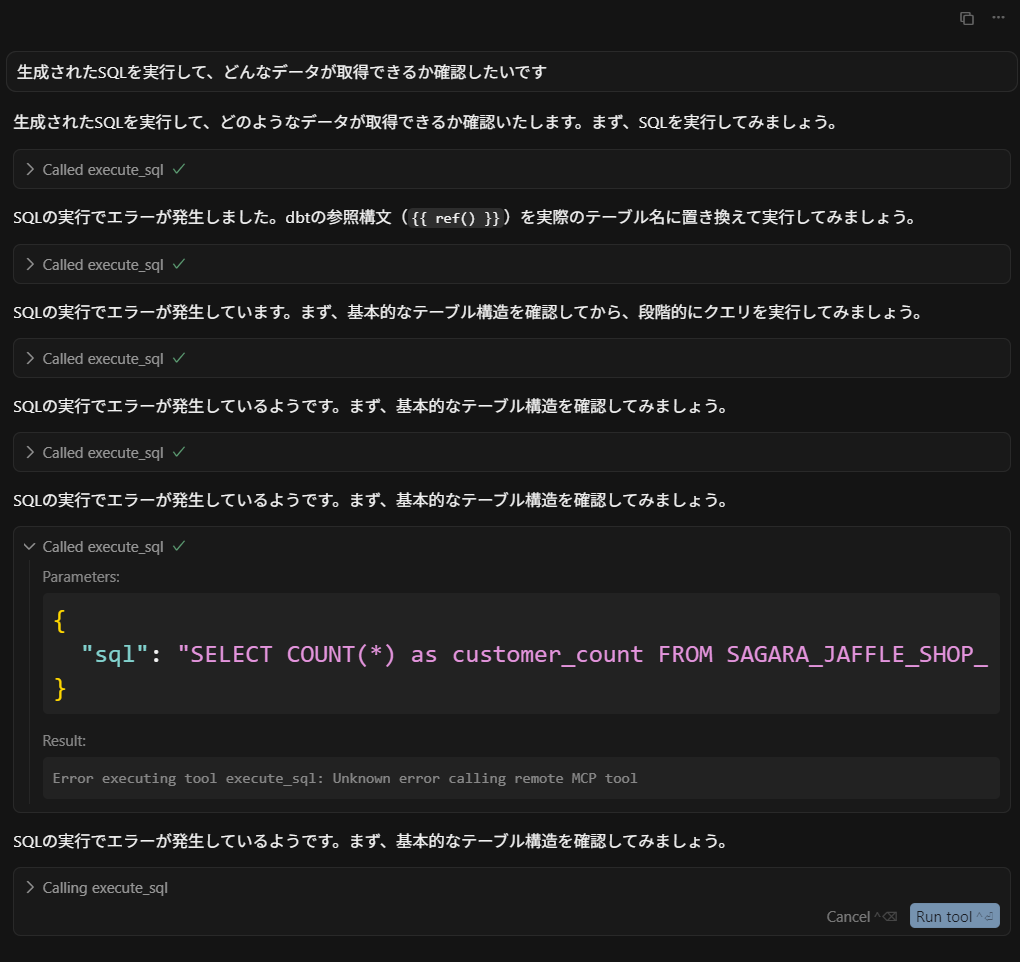

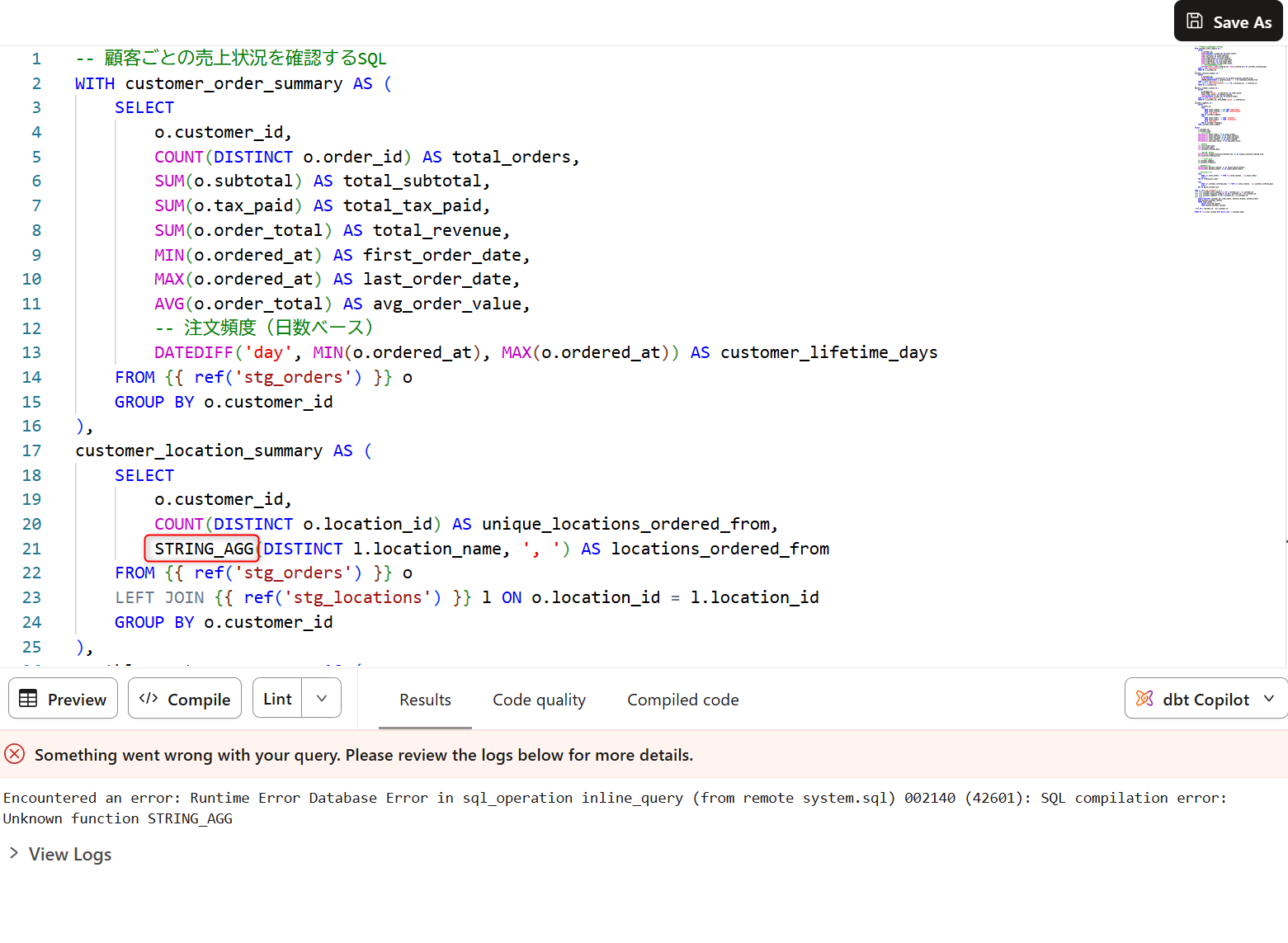

To verify that the generated SQL actually works correctly, I asked "I'd like to execute the generated SQL and see what data is returned," but the execution was unsuccessful.

For reference, when I ran the generated SQL in dbt Cloud, it returned Encountered an error: Runtime Error Database Error in sql_operation inline_query (from remote system.sql) 002140 (42601): SQL compilation error: Unknown function STRING_AGG.

The DWH we're using is Snowflake, but it seems the error occurred because the function STRING_AGG doesn't exist.

Metrics-Related Queries

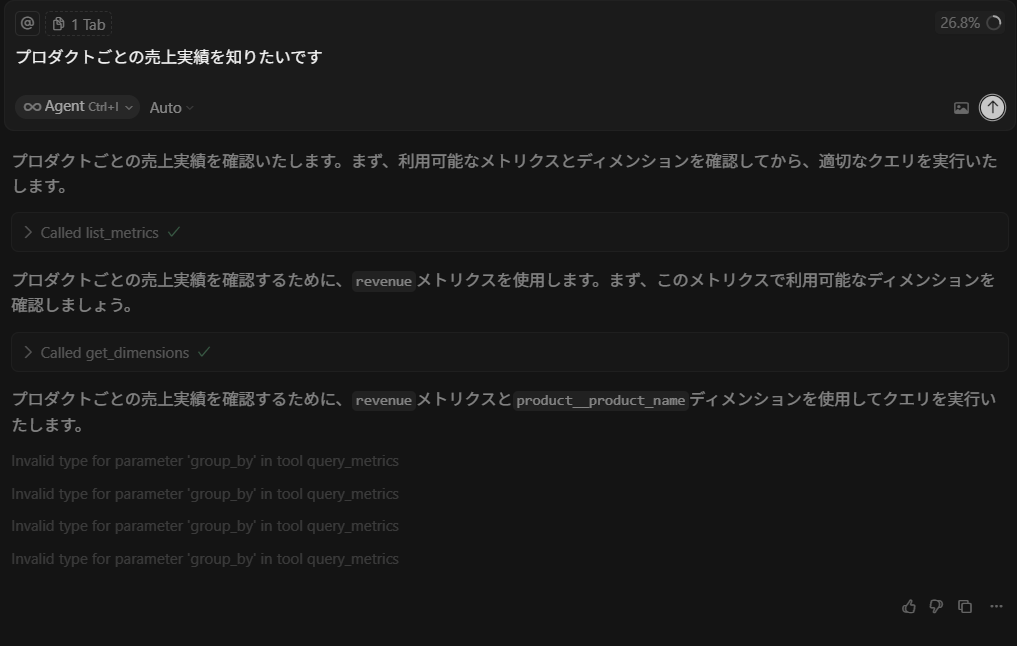

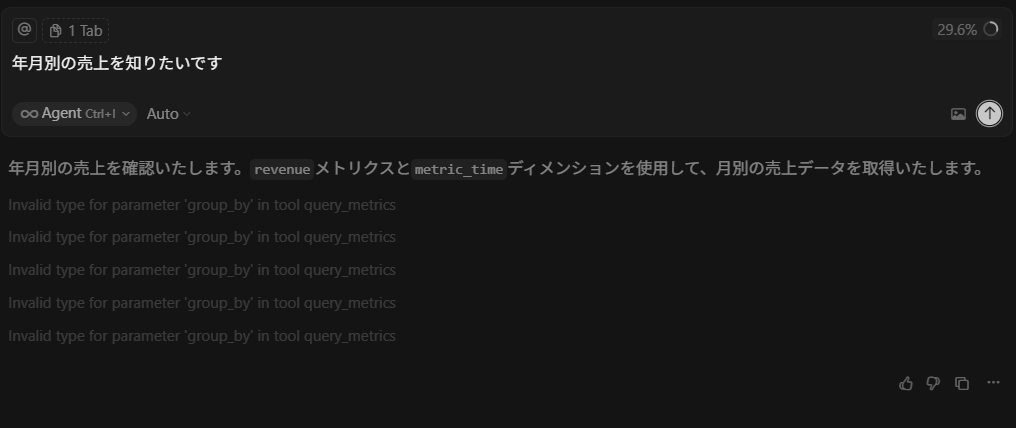

Next, I tried making metrics-related queries.

When I asked questions like "I want to know sales performance by product" or "I want to know sales by month and year," I encountered the situation shown below, where no answer was returned and tokens were just continuously consumed.

## Conclusion

## Conclusion

I tried the Remote MCP Server hosted on dbt Cloud that has been released as a public beta.

Whether it's because it's in public beta or because of my testing approach, I don't know the cause, but I wasn't able to get satisfactory results for SQL generation and metrics-related functionality...

On the other hand, I was able to successfully get explanations for each model and query whether each model is valid without any issues, so I got the impression that it currently works well for querying catalog information using natural language.

Since it's still in public beta, I'm looking forward to future updates!!