I researched AWS AI Factory #AWSreInvent

This page has been translated by machine translation. View original

Hello! I'm Takakuni (@takakuni_) from the Cloud Business Division Consulting Department.

AWS AI Factory was announced at re:Invent 2025.

Even though it's the end of the year, I'd like to discuss this update.

AWS AI Factory

Simply put, AWS AI Factory is a feature that deploys AWS physical servers in your own data center.

It's used in cases where you have data that absolutely cannot be moved to the cloud for data protection reasons, but you still want to utilize it as an asset.

If you were to do this on-premises, you would need to procure servers (GPUs) and power, as well as select the optimal AI models and obtain licenses from multiple AI providers.

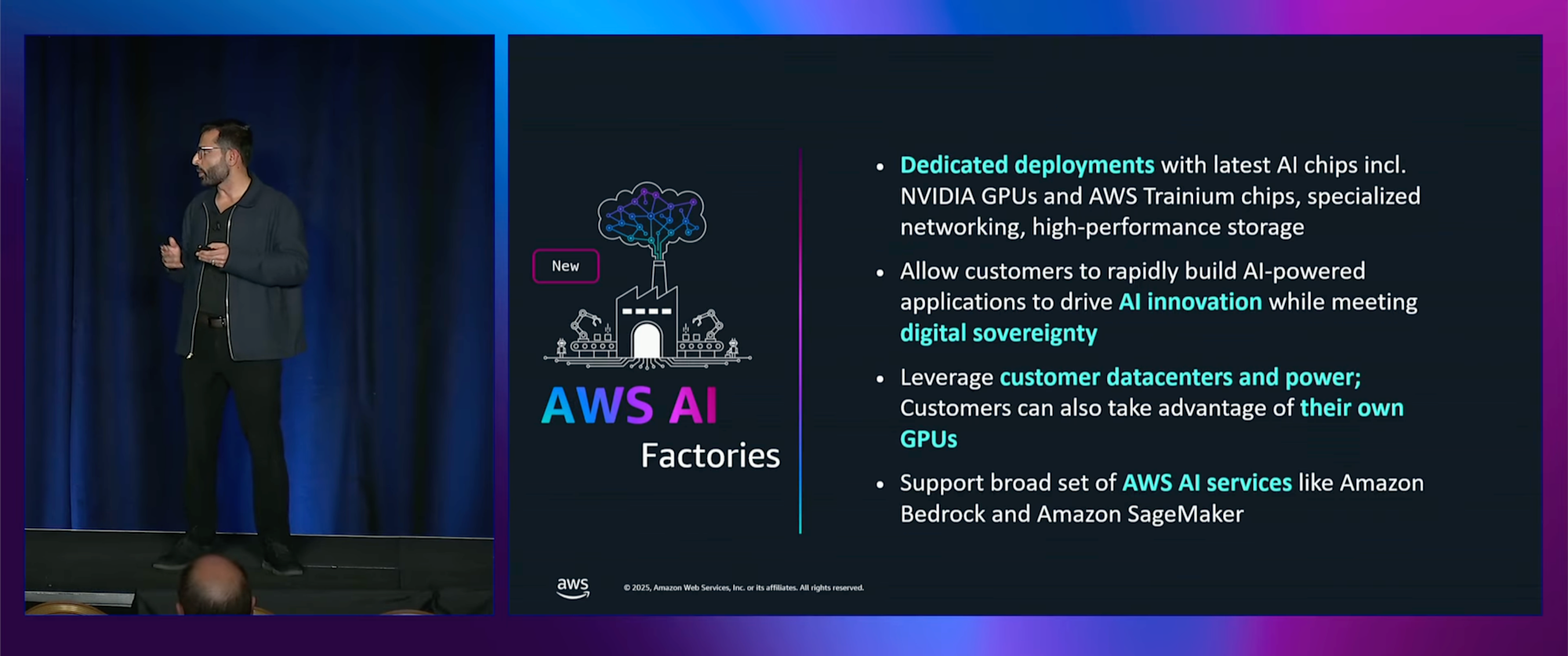

This service appears to handle the procurement/deployment of these resources on the AWS side. While detailed information is not provided, customers can use it with a responsibility split where customers provide space and power, while AWS manages the infrastructure.

Customers provide data center space and power capacity they already have, and AWS deploys and manages the infrastructure.

Services available through AWS AI Factory include Amazon Bedrock and SageMaker AI. (Regarding Amazon Bedrock, we're also curious about which models will be available)

Using integrated AWS AI services like Amazon Bedrock and Amazon SageMaker, you can immediately access key foundation models without having to negotiate individual contracts with each model provider.

Additionally, it supports NVIDIA GPUs and Trainium chips, giving the impression of a wide range of choices.

By combining the latest AWS Trainium accelerators and NVIDIA GPUs, dedicated low-latency networks, high-performance storage, and AWS AI services, AI Factories can shorten AI development by months or years compared to building it independently.

AI Zone

I'll also touch on AI Zone.

AWS has decided to collaborate in a strategic partnership with HUMAIN, based in Saudi Arabia, to build dedicated AWS AI infrastructure called "AI Zone" in a specially constructed data center by HUMAIN, with up to 150,000 AI chips (including GB300 GPUs).

In CEO Matt Garman's keynote, he introduced AWS AI Factory with the positioning of "Can we provide this AI Zone to more customers?"

Other Information

Looking for more detailed information in re:Invent sessions, I found some in "Build generative and agentic AI applications on-premises & at the edge (HMC308)". (If you're interested, check this out as well)

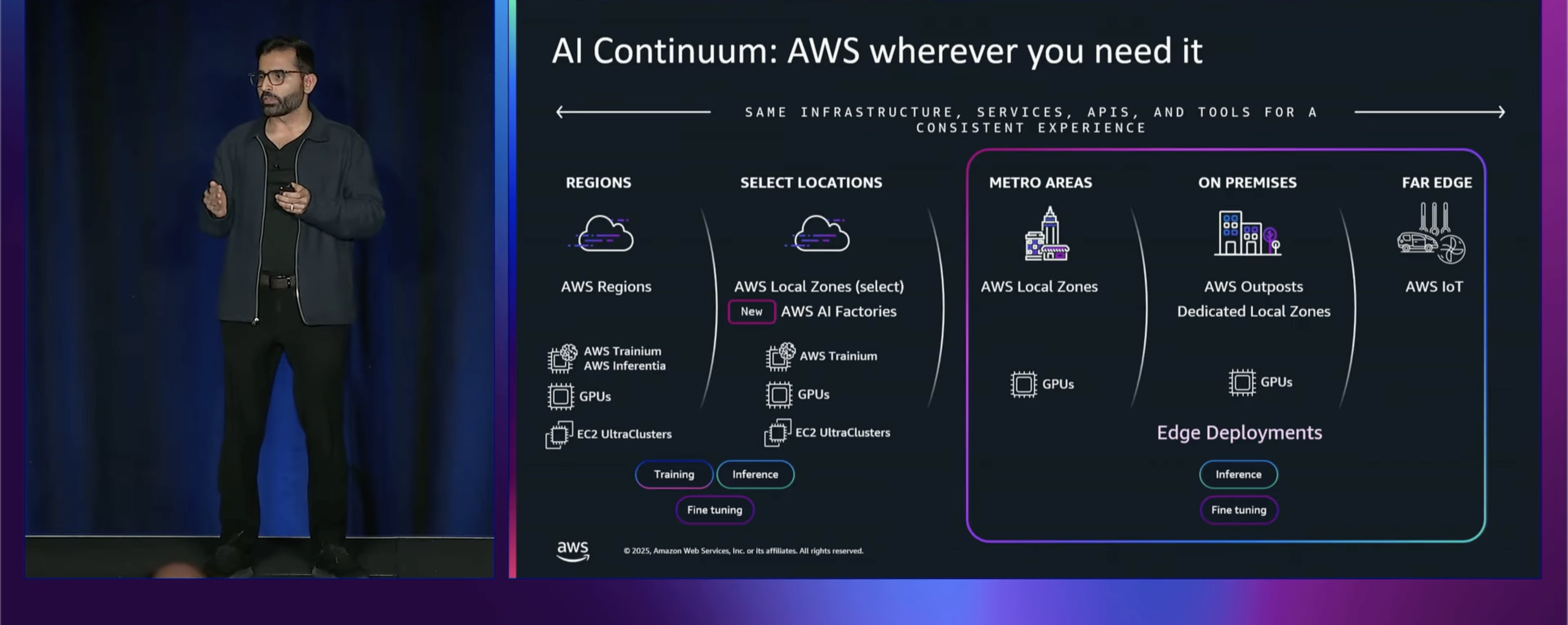

In the session, AI Factory is described as being placed in the customer's data center, making it different from Local Zones or Outposts.

It seems that customers' own GPUs can also be used.

It gives the impression that it could be useful for cases where more extensive expansion is desired.

For Details, Contact Your AWS Account Team

For more details, it seems you should inquire through your AWS sales representative. I'm curious about how flexible this can be (and of course, the pricing).

Summary

That was "Investigating AWS AI Factory."

Although information is somewhat scarce, the pattern of AWS managing servers in customers' data centers is intriguing.

This has been Takakuni (@takakuni_) from the Cloud Business Division Consulting Department!