I tried VCS Trigger with Working Directory set in HCP Terraform and apply

Introduction

Hello, this is Watanabe from the Data Business Division.

This time, I'll try implementing an apply triggered by branch updates in a Working Directory using HCP Terraform.

The motivation for this verification comes from our recent need to review Workspace operations, wanting to select resources to apply for each Workspace and define variables in tfvars files for each Workspace rather than using HCP Terraform Variables.

Until now, we've been triggering Terraform based on merges to the main branch of the Terraform project's root directory, but that doesn't satisfy the requirements above.

While looking through HCP Terraform for a solution, I found the Terraform Working Directory and Automatic Run triggering options in Version Control.

Thinking this might work, I decided to try it right away.

Let's Try It

Creating Terraform Configuration Files

Here's the Terraform structure. It will be managed in GitHub.

.

├── modules

│ ├── s3

│ │ ├── main.tf

│ │ └── variables.tf

│ └── sns

│ ├── main.tf

│ └── variables.tf

└── workspace

├── test-a

│ ├── README.md

│ ├── main.tf

│ ├── terraform.auto.tfvars

│ └── variables.tf

└── test-b

├── README.md

├── main.tf

├── terraform.auto.tfvars

└── variables.tf

The test-a directory calls modules/s3 to create a bucket, while the test-b directory calls modules/sns to create an SNS topic.

Below are excerpts from three files that create S3 buckets. For SNS, we similarly define variables in the workspace side and pass them to the modules.

resource "aws_s3_bucket" "this" {

bucket = var.bucket_name

tags = {

Name = var.bucket_name

}

}

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

provider "aws" {

region = "ap-northeast-1"

}

module "s3_bucket" {

source = "../../modules/s3"

bucket_name = var.bucket_name

}

bucket_name = "test-a-cm-watanabe"

```### HCP Terraform Configuration

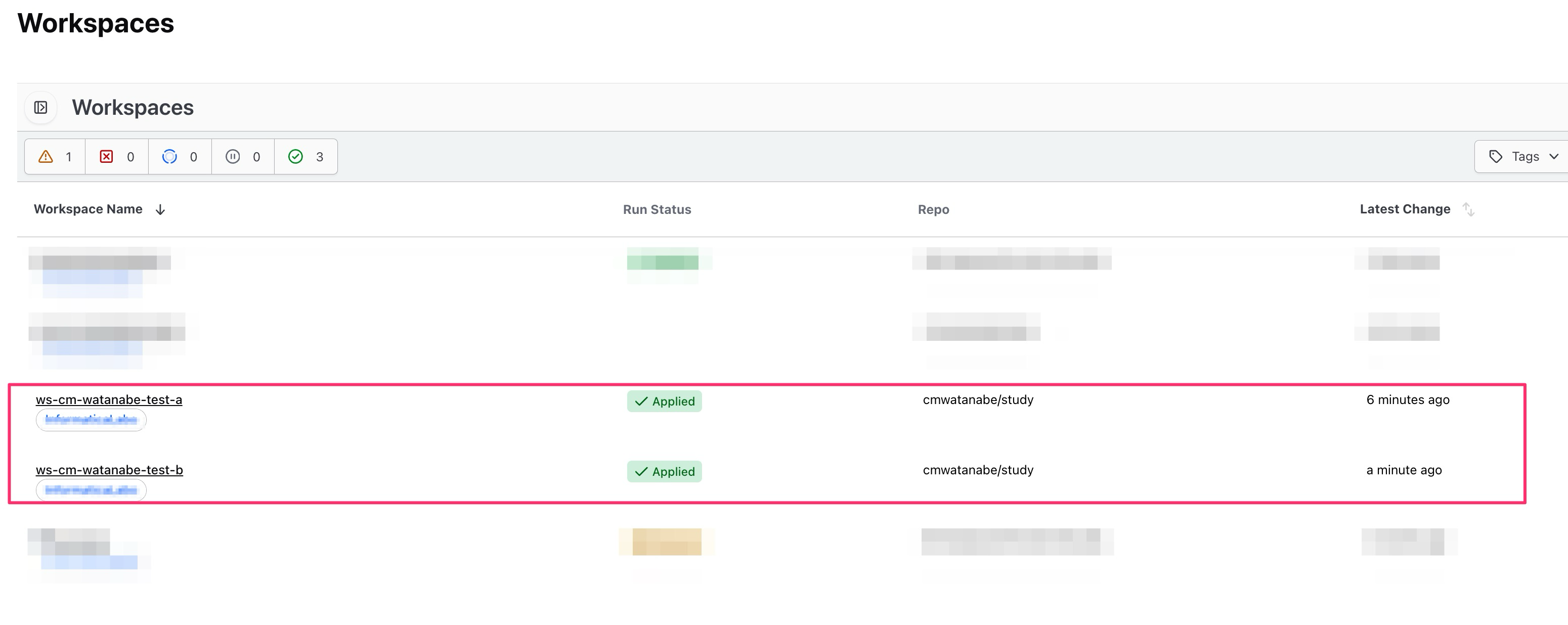

We prepared two Workspaces in HCP Terraform.

For GitHub integration, refer to the following:

https://dev.classmethod.jp/articles/tfe-provider-hcp-terraform-github/

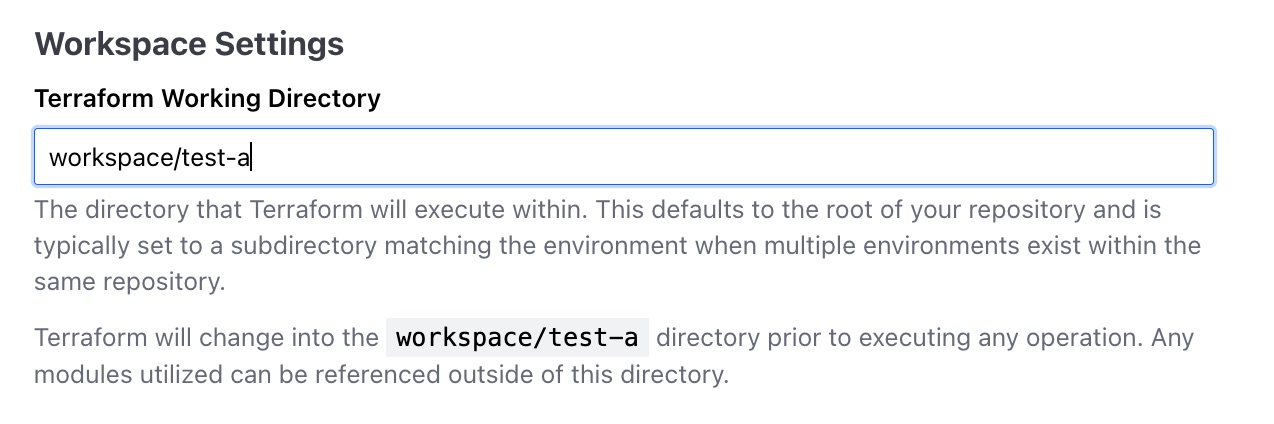

Now for the key configuration: set your desired working directory in the `Terraform Working Directory` field.

Terraform will init, plan, and apply based on the configuration files in this directory.

In our case, we prepared working directories per workspace, so we configure the working directory for each workspace.

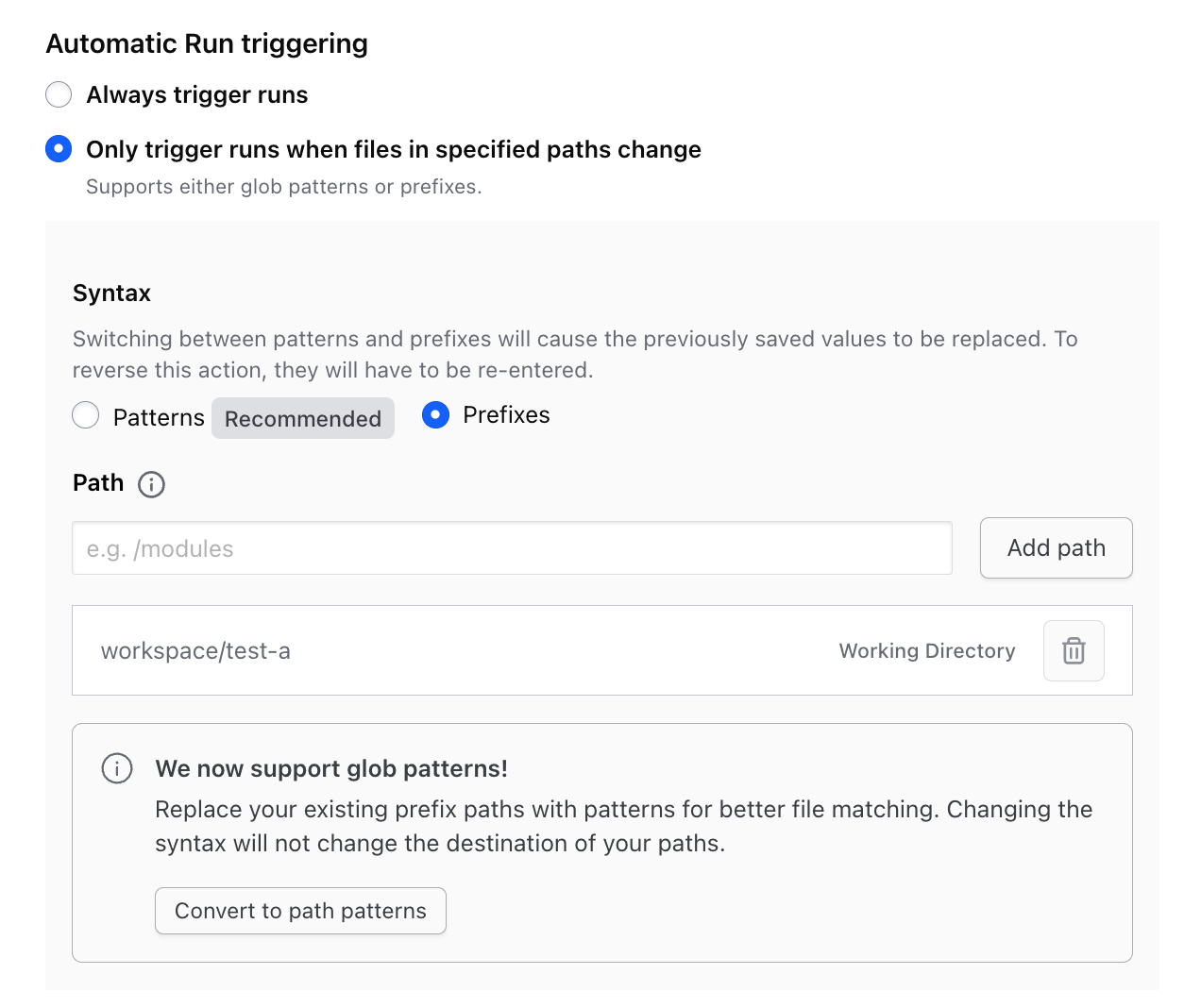

Next, in the `Automatic Run triggering` settings, select `Only trigger runs when files in specified paths change`.

This configures which directories in the VCS repository should trigger Terraform runs when changed.

Since we wanted runs to be triggered when the Working Directory changes, we selected Prefixes. The Working Directory is automatically displayed, so we leave it as is.

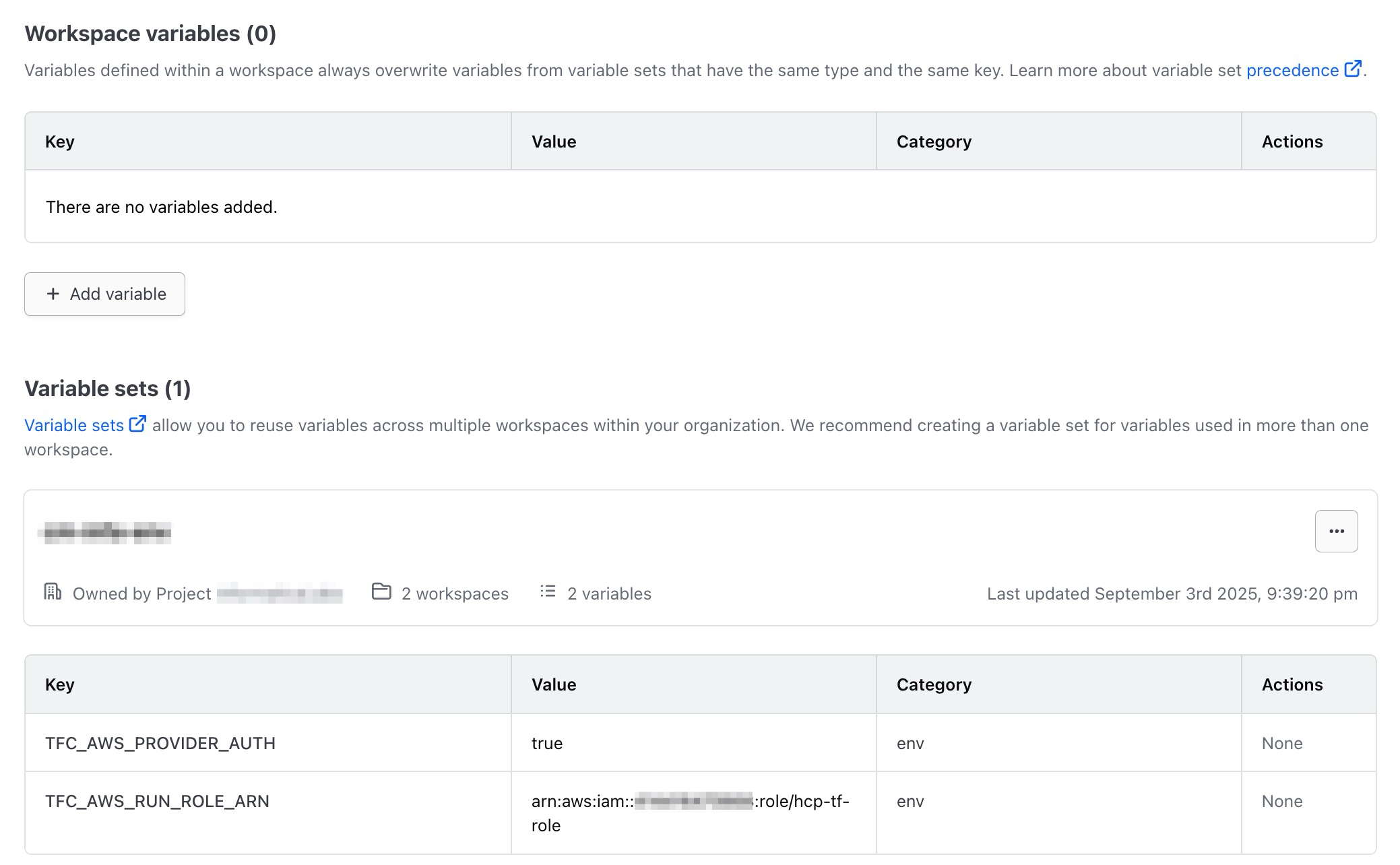

In each Workspace's Variables, we set Environment Variables for the credentials needed when HCP Terraform manipulates AWS resources.

In my environment, this was already prepared, but you need to set up OIDC integration with AWS.

For detailed steps, please refer to:

https://developer.hashicorp.com/terraform/cloud-docs/workspaces/dynamic-provider-credentials/aws-configuration

I didn't configure other Variables as values are passed from terraform.auto.tfvars.

This completes the Workspace configuration.### Execution

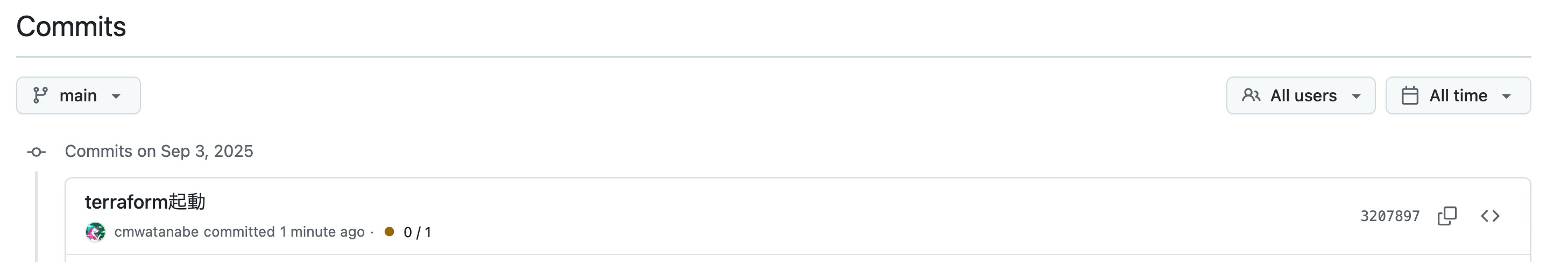

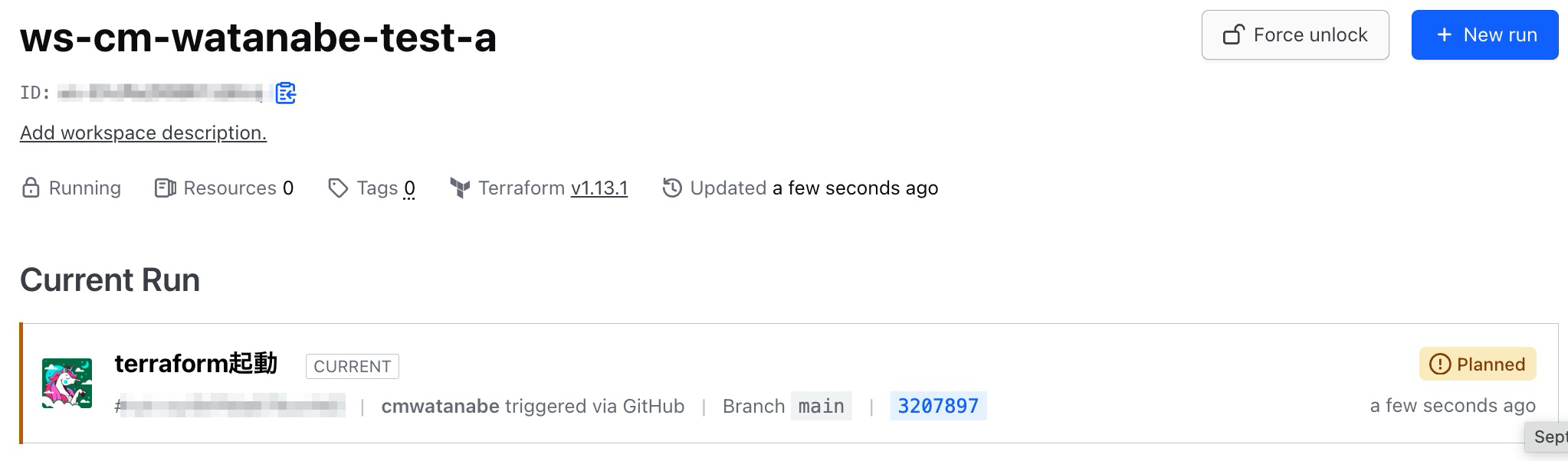

In order to run Terraform, I placed a README.md file on GitHub in the test-a workspace directory (./workspace/test-a).

The plan worked.

Terraform is not running for the test-b workspace. The working directory settings are properly reflected.

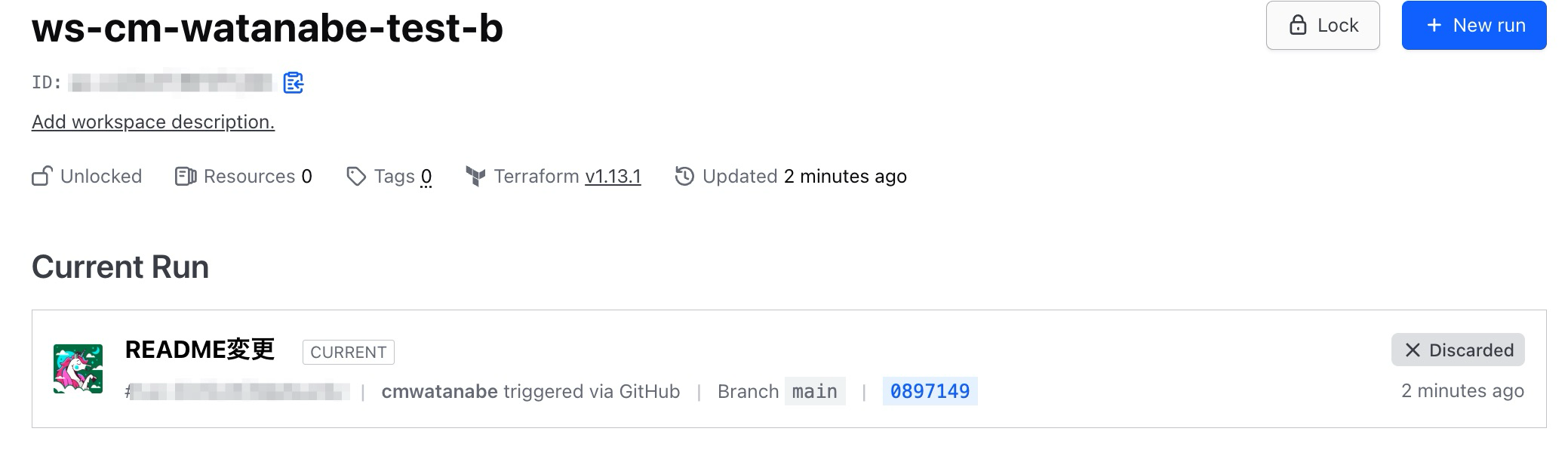

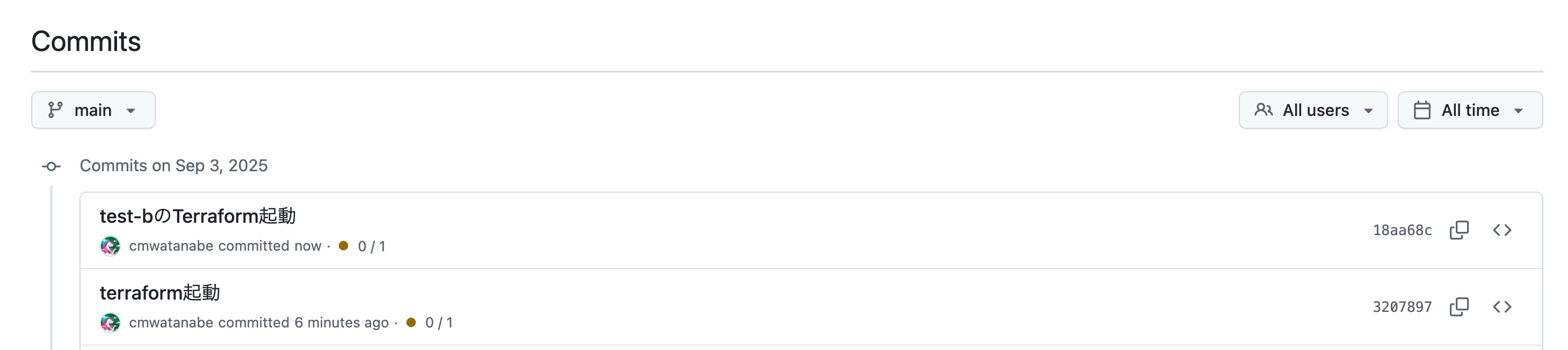

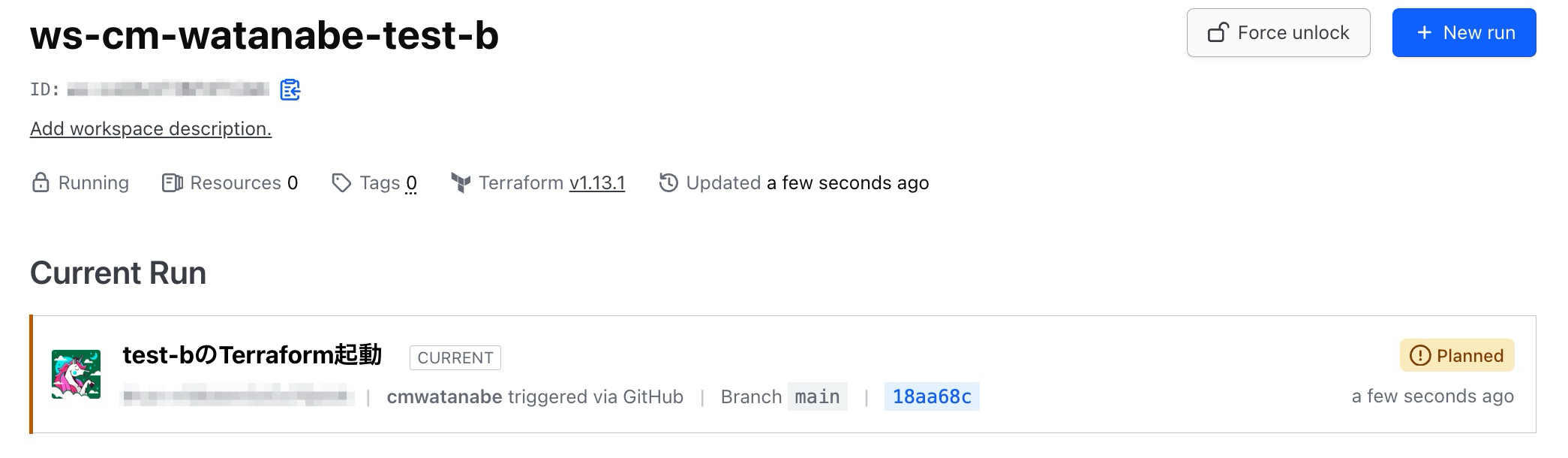

I also placed a README in the test-b working directory (./workspace/test-b) in the same way.

Terraform for the test-b workspace started running.

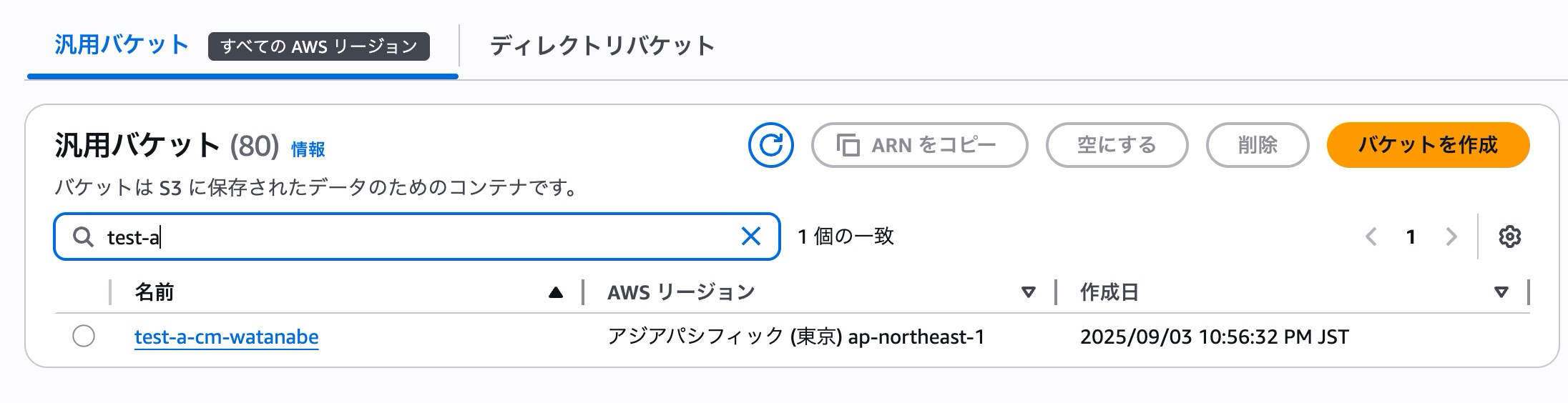

After applying both, I checked the S3 console and confirmed that the buckets were created.

## In conclusion

How was it?

When managing modules by workspace while separating working directories in HCP Terraform/Terraform Enterprise, this method of setting the WorkingDirectory for each Workspace seems to be a good approach.

By the way, I had previously thought that Terraform OSS Workspaces and HCP Terraform/Terraform Enterprise Workspaces were the same thing, but I realized they should be understood as different concepts.

This is because the former is a unit for state separation, while the latter is necessary for managing resources in HCP Terraform/Terraform Enterprise and defines an environment. I found that it has a broader meaning than just state separation, including variables, execution history, policies, and permissions.

My understanding is that OSS Workspaces excel at managing resources with state separation using the same Terraform configuration, and when deploying multiple environments, using Workspaces allows you to reuse plugins.

On the other hand, deployments using separate working directories don't reuse plugins but install them for each directory.

Therefore, I had thought that Workspaces and working directories were mutually exclusive.

However, in HCP Terraform/Terraform Enterprise, working directories serve a complementary role as pointers to code locations.

This enables flexible management even in a monorepo configuration managing multiple resources in a single repository, by using both Workspaces and working directories.

That's all.

I hope this is helpful to someone.