![[Update] Terraform's module for AWS Transfer Family now supports SFTP connectors.](https://images.ctfassets.net/ct0aopd36mqt/wp-thumbnail-dd57f8260eaf825c214225faa0278acc/c6d3e347c61a60abea783c1132a311be/aws-transfer-family)

[Update] Terraform's module for AWS Transfer Family now supports SFTP connectors.

Introduction

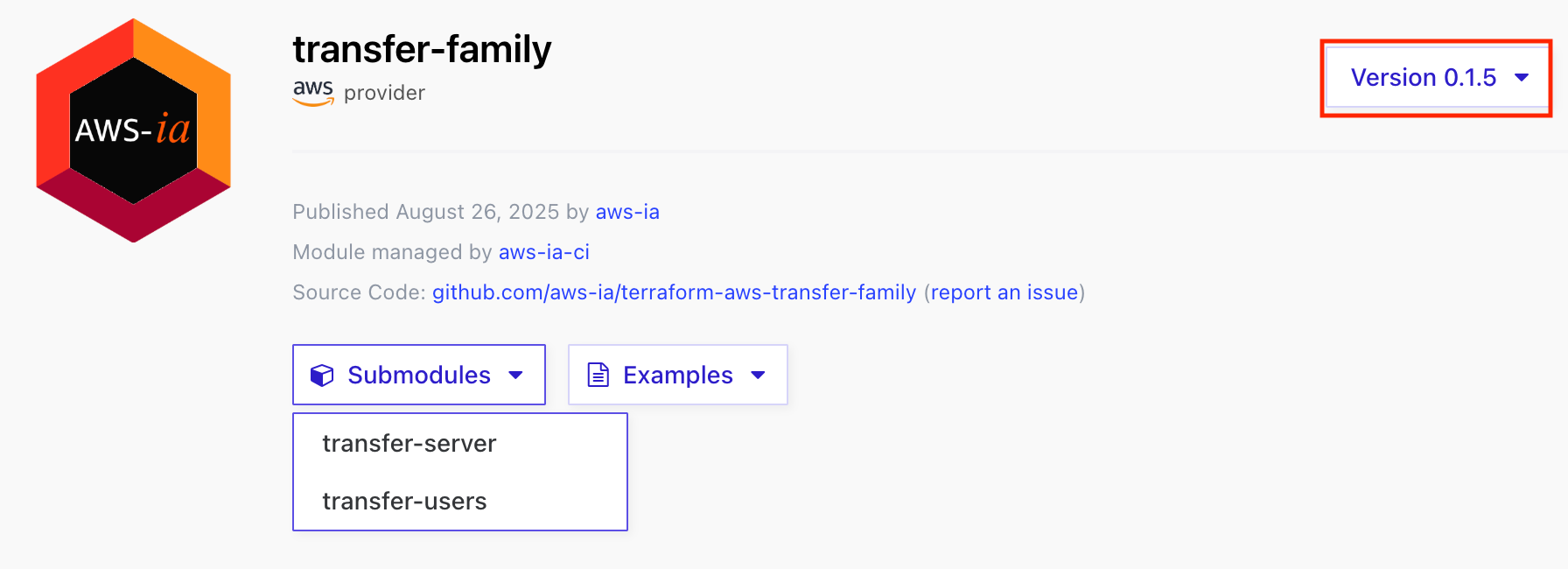

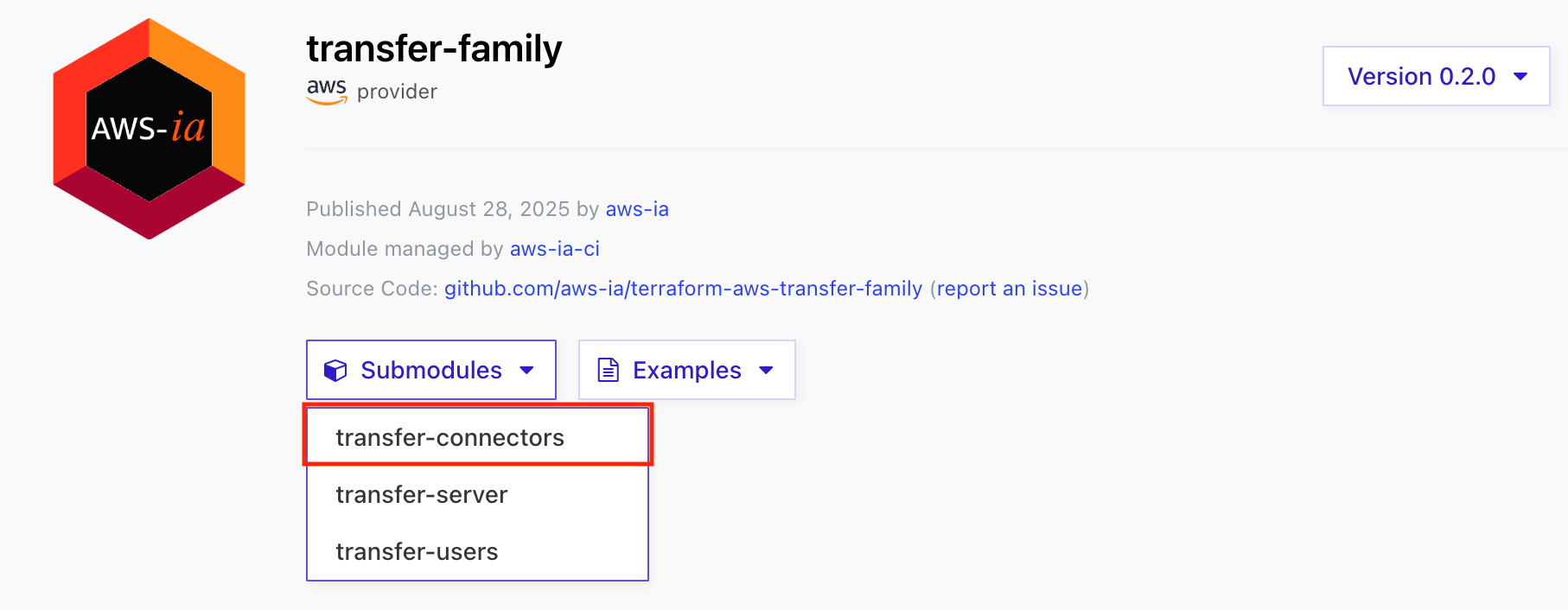

The other day, a submodule for SFTP connectors was added to the Terraform module for AWS Transfer Family.

The SFTP connector is one of the features in the Transfer Family service group released about two years ago. Using this feature enables file transfer execution between S3 and SFTP servers without the need to prepare a separate SFTP client.

Initially, a module for Transfer Family's SFTP server was provided, but now a submodule for connectors has been added, allowing SFTP connectors to be created with Terraform.

In this article, we'll be using version 0.2.4, though support has been available since v0.2.0.

Let's Use It

Let's actually use it and deploy it.

The documentation and repository are as follows:

https://registry.terraform.io/modules/aws-ia/transfer-family/aws/### Parameters

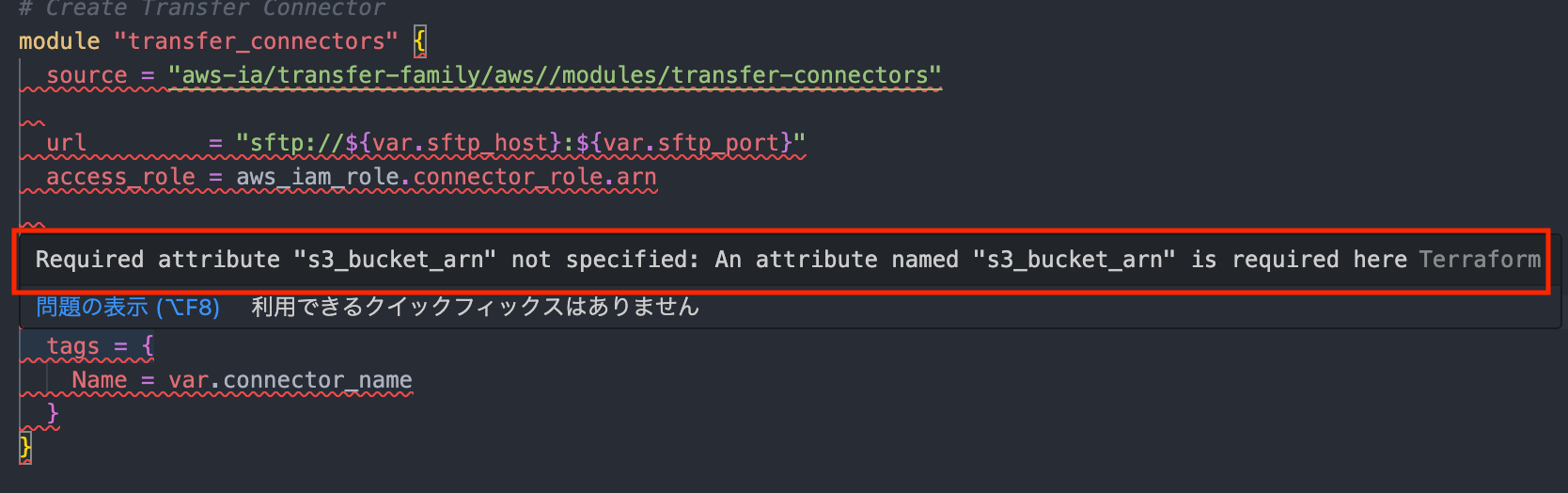

At the time of writing, if you look at the parent module documentation or the Github README, it shows that s3_bucket_arn and url are required, but from actual usage, that information appears to be incorrect and the submodule documentation seems to be correct.

*Note: In VSCode, it also shows a Syntax Error indicating that s3_bucket_arn is required...

The parameters used in v0.2.4 are as follows:

| Parameter Name | Description | Notes |

|---|---|---|

access_role (required) |

IAM role ARN to attach to the SFTP connector | |

url (required) |

URL of the destination SFTP server | |

connector_name |

Connector name | |

logging_role |

IAM role ARN for CloudWatch Logs output | |

secret_name |

Name of the Secrets storing authentication information | When specifying sftp_username, sftp_private_key |

sftp_username |

Username for SFTP authentication | |

sftp_private_key |

Private key for SFTP authentication | |

user_secret_id |

ARN of the AWS Secrets Manager secret containing authentication information | Can be used instead of the two parameters above |

secrets_manager_kms_key_arn |

KMS encryption key ARN for Secrets | When using CMK |

security_policy_name |

Security policy name | |

test_connector_post_deployment |

Whether to test the connector connection after deployment | If set to true and connection is successful, a verification public key will be registered (explained later) |

trusted_host_keys |

Public key for destination verification | |

tags |

Tags to assign to resources |

For a better understanding of the parameter framework, please refer to these previous articles where we built it from the management console.

https://dev.classmethod.jp/articles/sftp-connector-add-security-policy-setting/### Code

The complete code used to build the above is stored in the following repository:

IAM roles and S3 buckets are required separately, so this time I've divided them into two types of modules:

.

└── modules

├── s3-storage # Destination bucket

└── sftp-connector # SFTP connector resource (including IAM role)

I'll skip the s3-storage module as it just creates a bucket, but the sftp-connector module/resource consists of transfer-connectors and IAM role creation.

For secrets, I'm taking a bit of a shortcut by using existing ones (reusing ones from previous testing).

module "transfer_connectors" {

source = "aws-ia/transfer-family/aws//modules/transfer-connectors"

url = "sftp://${var.sftp_host}:${var.sftp_port}"

access_role = aws_iam_role.connector_role.arn

user_secret_id = var.user_secret_id

trusted_host_keys = var.sftp_trusted_host_keys

test_connector_post_deployment = true

tags = {

Name = var.connector_name

}

}

resource "aws_iam_role" "connector_role" {

name = "${var.connector_name}-connector-role"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "transfer.amazonaws.com"

}

}

]

})

}

resource "aws_iam_role_policy" "connector_s3_policy" {

name = "${var.connector_name}-s3-policy"

role = aws_iam_role.connector_role.id

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Action = [

"s3:GetObject",

"s3:PutObject",

"s3:DeleteObject",

"s3:GetObjectVersion",

"s3:GetObjectTagging",

"s3:PutObjectTagging"

]

Resource = "${var.s3_bucket_arn}/*"

},

{

Effect = "Allow"

Action = [

"s3:ListBucket",

"s3:GetBucketLocation"

]

Resource = var.s3_bucket_arn

}

]

})

}

resource "aws_iam_role_policy" "connector_secrets_policy" {

name = "${var.connector_name}-secrets-policy"

role = aws_iam_role.connector_role.id

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Action = [

"secretsmanager:GetSecretValue",

"secretsmanager:DescribeSecret"

]

Resource = var.user_secret_id

}

]

})

}

```## Execution

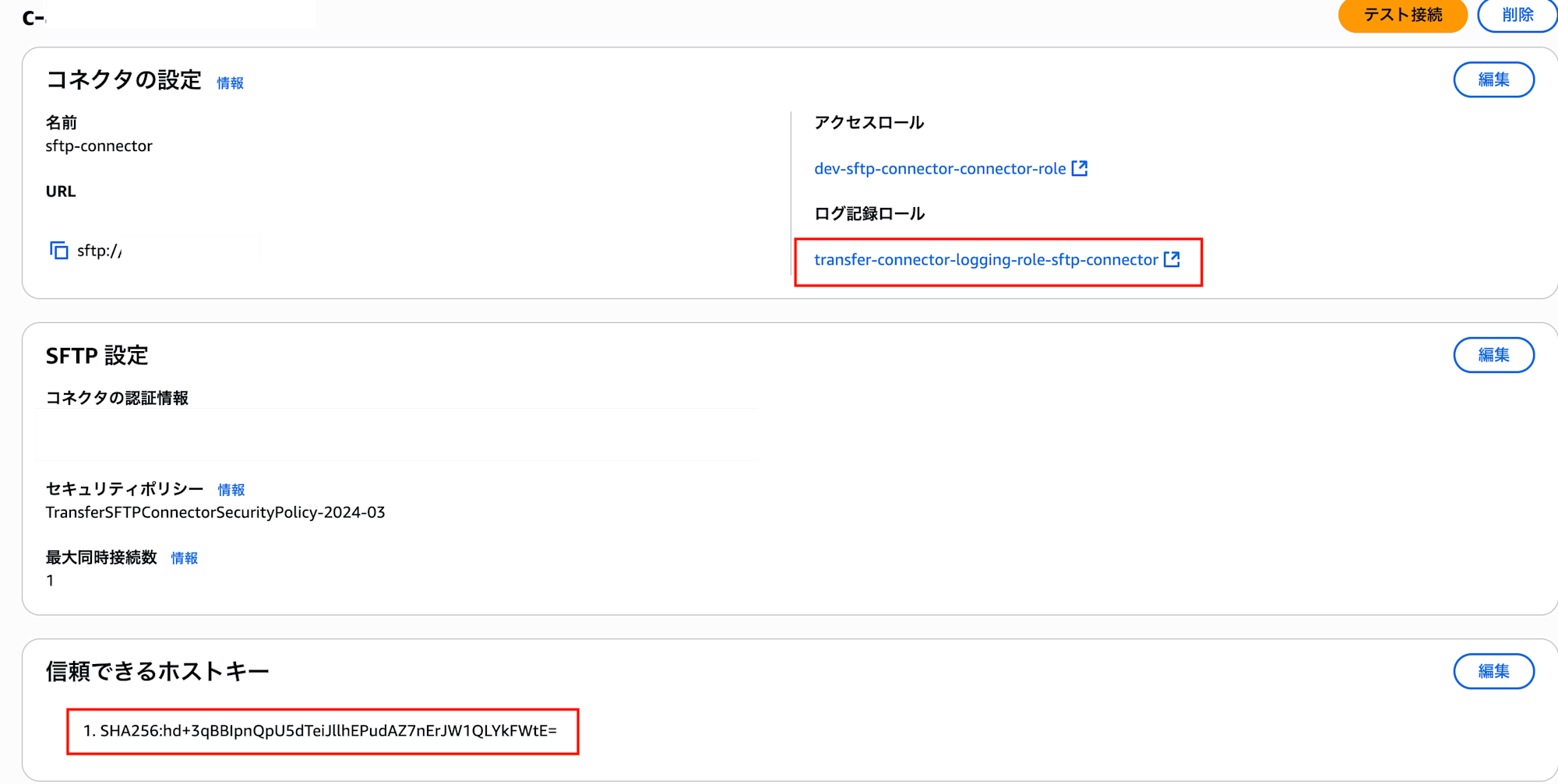

I'll omit the logs during execution since they just show resources being generated, but the connector is created as shown below.

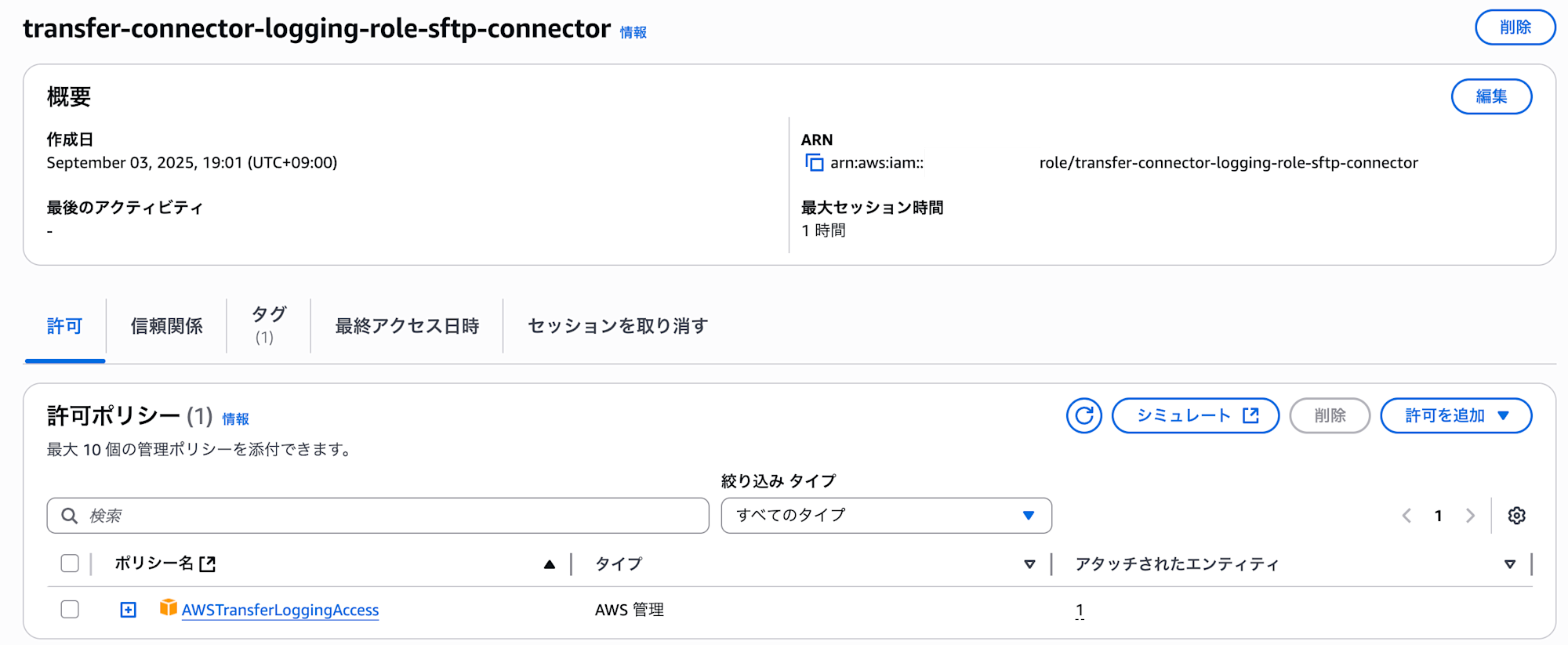

Although not specifically specified, a role for CloudWatch Logs is created, along with a trusted host key.

The role is assigned the AWS managed policy `AWSTransferLoggingAccess` for log output.

Regarding the host key, if you set `test_connector_post_deployment = true`, a predefined script will run and generate it.

Extracting and formatting the script from the logs reveals the following code, which appears to be how the host key is automatically generated:

```bash

#!/bin/sh

echo "Waiting 10 seconds for connector to be fully ready..."

sleep 10

if ! command -v aws &> /dev/null; then

echo "AWS CLI not found - connector testing skipped"

echo "Deployment completed successfully"

exit 0

fi

AWS_VERSION=$(aws --version 2>&1 | cut -d'/' -f2 | cut -d' ' -f1)

if [ -n "$AWS_VERSION" ]; then

MAJOR=$(echo "$AWS_VERSION" | cut -d'.' -f1)

MINOR=$(echo "$AWS_VERSION" | cut -d'.' -f2)

if [ "$MAJOR" -lt 2 ] || ([ "$MAJOR" -eq 2 ] && [ "$MINOR" -lt 28 ]); then

echo "AWS CLI version $AWS_VERSION detected - connector testing requires version 2.28.x or above"

echo "connector testing skipped"

echo "Deployment completed successfully"

exit 0

fi

echo "AWS CLI version $AWS_VERSION - version check passed"

else

echo "Could not determine AWS CLI version - connector testing skipped"

echo "Deployment completed successfully"

exit 0

fi

if ! command -v jq &> /dev/null; then

echo "jq not found - connector testing skipped"

echo "Deployment completed successfully"

exit 0

fi

echo "Testing connection to discover host key..."

MAX_RETRIES=3

RETRY_COUNT=0

HOST_KEY=""

while [ $RETRY_COUNT -lt $MAX_RETRIES ] && [ -z "$HOST_KEY" ]; do

RETRY_COUNT=$((RETRY_COUNT + 1))

echo "Attempt $RETRY_COUNT/$MAX_RETRIES: Testing connection..."

DISCOVERY_RESULT=$(aws transfer test-connection \

--connector-id c-xxxxx \

--region ap-northeast-1 \

--output json 2>/dev/null || echo '{}')

echo "DEBUG - Discovery Result: $DISCOVERY_RESULT"

STATUS=$(echo "$DISCOVERY_RESULT" | jq -r '.Status // empty')

echo "DEBUG - Status: $STATUS"

if [ "$STATUS" = "ERROR" ]; then

ERROR_MSG=$(echo "$DISCOVERY_RESULT" | jq -r '.StatusMessage // empty')

echo "Connection test failed: $ERROR_MSG"

echo "DEBUG - Full error response: $DISCOVERY_RESULT"

if echo "$ERROR_MSG" | grep -q "Cannot access secret manager"; then

echo "Secret manager not ready, waiting 10 seconds..."

sleep 10

continue

fi

elif [ "$STATUS" = "OK" ]; then

echo "Connection test successful - connector is properly configured"

echo "Deployment completed successfully"

exit 0

fi

HOST_KEY=$(echo "$DISCOVERY_RESULT" | jq -r '.SftpConnectionDetails.HostKey // empty')

echo "DEBUG - Host Key: $HOST_KEY"

if [ -n "$HOST_KEY" ] && [ "$HOST_KEY" != "null" ]; then

echo "Host key discovered: $HOST_KEY"

break

else

echo "Host key not found, retrying in 10s..."

sleep 10

fi

done

if [ -n "$HOST_KEY" ] && [ "$HOST_KEY" != "null" ]; then

echo "Updating connector with discovered host key..."

UPDATE_RESULT=$(aws transfer update-connector \

--connector-id c-xxxxx \

--region ap-northeast-1 \

--url "sftp://sftp.example.com:22" \

--access-role "arn:aws:iam::111122223333:role/dev-sftp-connector-connector-role" \

--logging-role "arn:aws:iam::111122223333:role/transfer-connector-logging-role-sftp-connector" \

--sftp-config "UserSecretId=arn:aws:secretsmanager:ap-northeast-1:111122223333:secret:aws/transfer/sftp-connector-bw-bastion-xxxxx,TrustedHostKeys=$HOST_KEY" \

--output json)

echo "DEBUG - Update Result: $UPDATE_RESULT"

echo "Testing final connection with trusted host key..."

FINAL_TEST=$(aws transfer test-connection \

--connector-id c-xxxxx \

--region ap-northeast-1 \

--output json)

echo "DEBUG - Final Test Result: $FINAL_TEST"

FINAL_STATUS=$(echo "$FINAL_TEST" | jq -r '.Status')

echo "Final connection status: $FINAL_STATUS"

if [ "$FINAL_STATUS" = "OK" ]; then

echo "Connector configured and tested successfully"

else

echo "Final test failed: $FINAL_STATUS"

fi

fi

```I didn't write this update before, but it appears to be a script related to additional functionality from an update in April 2025.

https://aws.amazon.com/about-aws/whats-new/2025/04/aws-transfer-family-configuration-options-sftp-connectors/

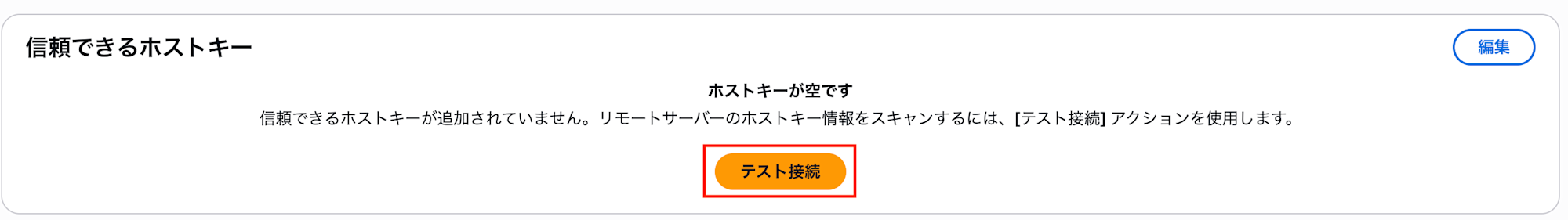

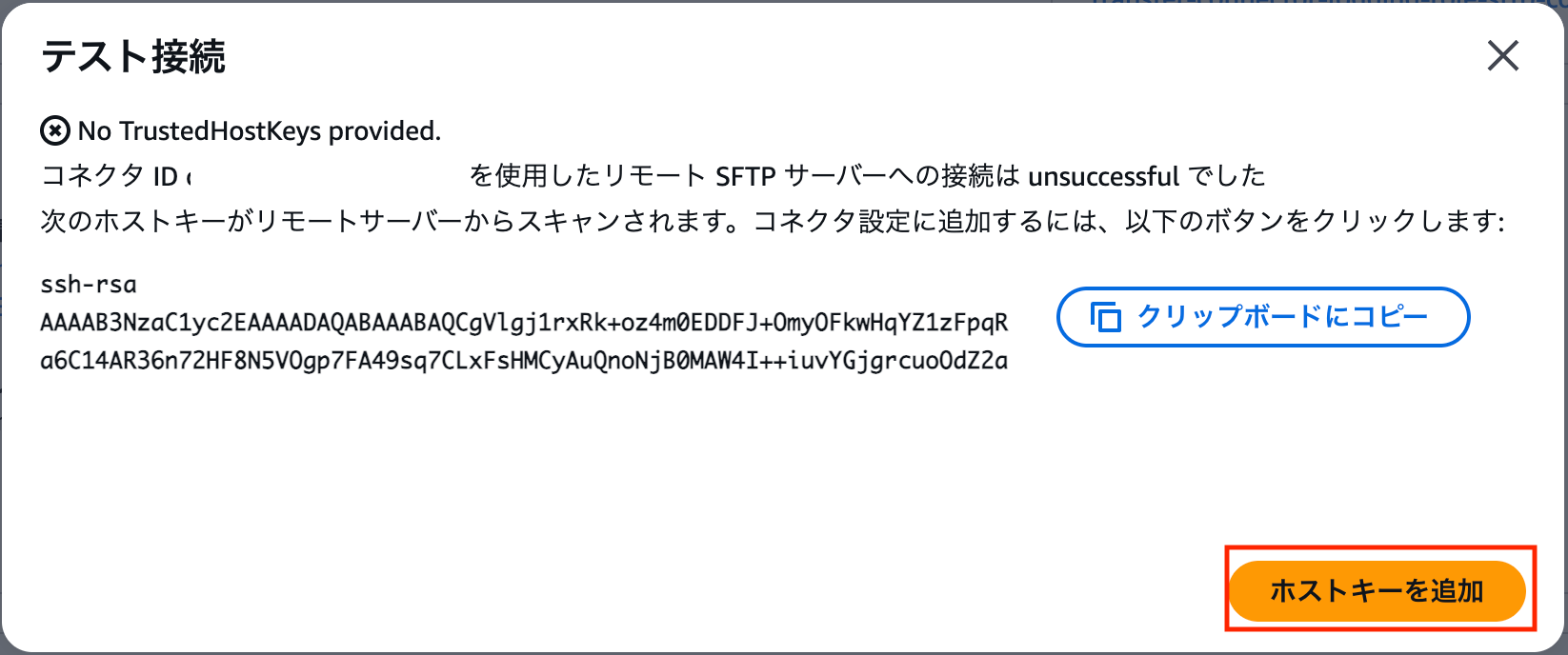

Prior to this update, users needed to extract the host key (public key) from the server and register it on the SFTP connector side. With this update, the service can now obtain and register the host key during a test connection, and the above script is a registration script that uses this feature.

In the management console, you can detect and register keys during test connections as shown below.

**Please note that even if the connection fails during execution, the terraform apply itself will be considered successful.**

If the key is not registered properly, please check the apply logs. I hadn't updated the AWS CLI for a while and was failing due to an insufficient version.

## Conclusion

I implemented SFTP connector construction with Terraform.

While the SFTP connector functionality itself isn't new, the ability to use it with Terraform expands the options, which can be quite beneficial depending on your needs.

In this case, we're using existing SFTP servers and Secrets for authentication information, which keeps things simple. However, if you want to create everything from scratch in one line, including creating users on the server, generating private keys (or bringing your own), and importing that information to Terraform modules or Secrets Manager, it might be a bit more complicated.

The SFTP connector is primarily a feature for existing SFTP servers where you cannot install the AWS CLI, so please be careful if you encounter such cases.

![[アップデート] Transfer Family サーバー用の Terraform I-A モジュールがリリースされました](https://images.ctfassets.net/ct0aopd36mqt/wp-thumbnail-db64f1f2fda342fbdbdb6b2e336560e8/99e8f408bed87cdb4aa6bcadcaf57fc9/terraform-eyecatch.png)