Vercel AI Gateway has been generally available (GA)

Hello, this is Toyoshima.

Vercel's AI Gateway is now generally available (GA).

What is AI Gateway

AI Gateway is a service that provides an integrated API for accessing more than 100 models from a single endpoint.

AI Gateway is ideal for the following use cases:

- When you want to find the optimal solution by trying multiple models

- For example, even if you want to switch models for each use case, such as "I want to use GPT for text generation but Claude for summarization," AI Gateway allows you to easily switch from the same endpoint

- When high request processing capacity is required

- There are limitations to rate limits (requests per second) with just one vendor. Using AI Gateway allows processing across multiple vendors, making it easier to handle large-scale traffic.

- When you want to try the latest models immediately

- Even when new models are released, you can try them immediately without adjusting detailed connection settings. You can create an environment that makes it easier to catch up with new technologies.

- When you need a system that is resilient to failures

- Even if a specific model or vendor is temporarily down, AI Gateway can automatically switch to another model.

- When you want to understand costs and usage patterns in one place

- Normally, you would need to look at separate dashboards for each vendor, but with AI Gateway you can centrally check "which models were used how much" and "how much did it cost."

Reference: Release blog

https://vercel.com/blog/ai-gateway-is-now-generally-available

---## AI Gateway Sample Code

AI Gateway demo code is also helpful, but in the GA version, AI SDK automatically recognizes AI Gateway, allowing you to use it with minimal configuration.

If you want to go through a proxy server, you can also explicitly set the baseURL.

import { ModelMessage, streamText } from 'ai'

import dotenv from 'dotenv'

import * as readline from 'node:readline/promises'

/*

Set the AI Gateway API Key that can be issued from the Vercel admin panel

AI_GATEWAY_API_KEY=...

*/

dotenv.config({ path: '.env.local' })

// Interactive chat application

async function runChatApp() {

const terminal = readline.createInterface({

input: process.stdin,

output: process.stdout,

});

const messages: ModelMessage[] = []

while (true) {

const userInput = await terminal.question('You: ')

messages.push({ role: 'user', content: userInput })

const result = streamText({

model: 'openai/gpt-4o', // Just change the model ID to use other providers

messages,

})

let fullResponse = ''

process.stdout.write('\nAssistant: ')

for await (const delta of result.textStream) {

fullResponse += delta;

process.stdout.write(delta);

}

process.stdout.write('\n\n')

messages.push({ role: 'assistant', content: fullResponse })

}

}

runChatApp().catch(console.error)

Traditionally, we had to manage multiple API keys and separate implementations to accommodate different API specifications for each provider.

With AI Gateway, you only need one API key and can use it by simply specifying the model ID.

Furthermore, when deployed on Vercel, it supports OIDC authentication in addition to API key authentication.

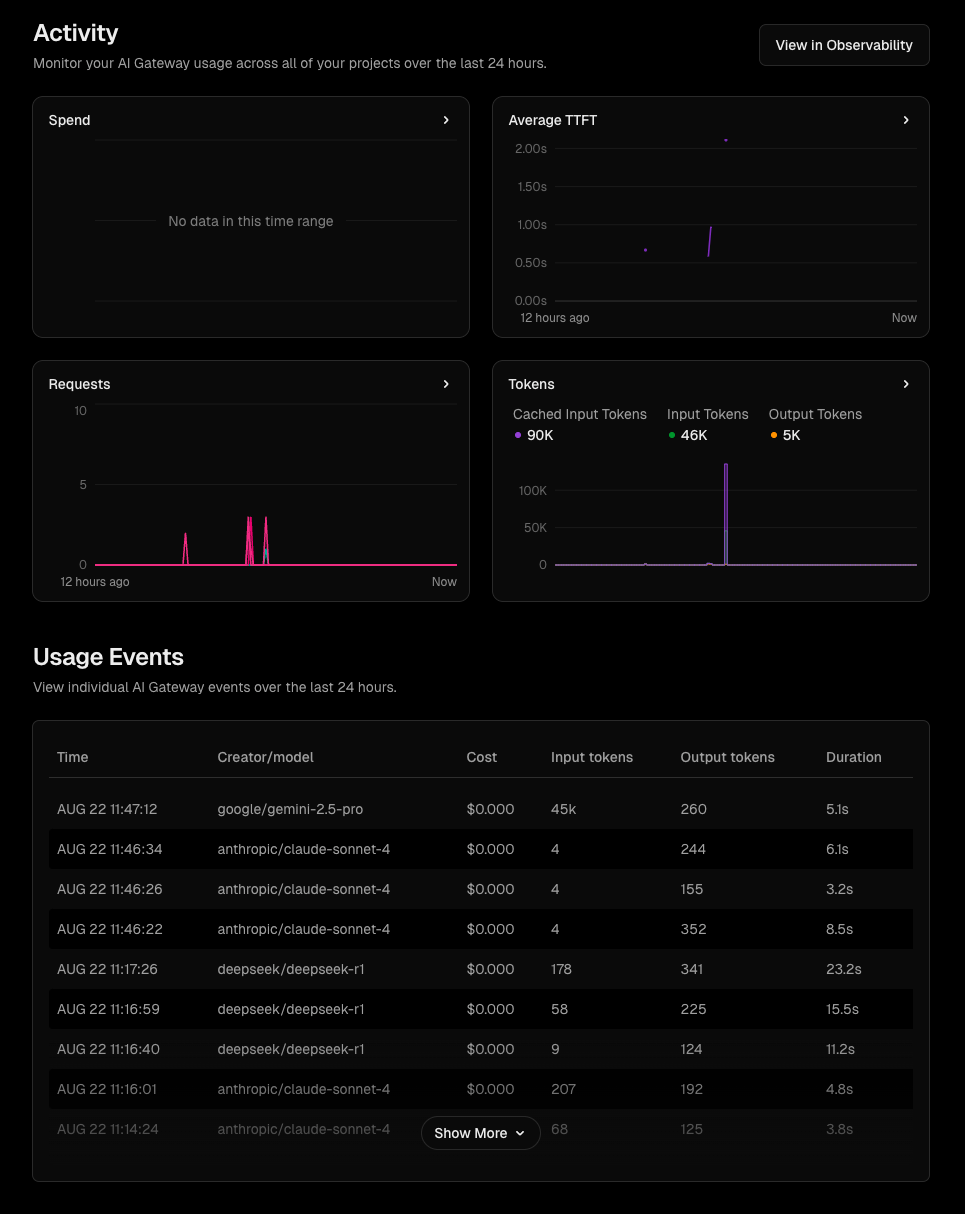

Visualization of Cost and Performance

When using multiple LLMs, it can be complicated to track costs and manage usage.

AI Gateway's dashboard allows you to visualize which models are being used, how much, and what costs are being incurred.

This enables model selection based on cost performance and prevents unexpected cost overruns.

Example of admin screen

Details: https://vercel.com/docs/ai-gateway/observability## Pricing Information

There is no fee for AI Gateway itself; only the charges from AI providers apply.

- Purchase AI Gateway credits before use, which are consumed as you use the service

- There is a free tier of $5 per month, making it easy to try out

- Besides models provided by Vercel, you can also use your own API keys (BYOK) (pricing depends on the AI provider)

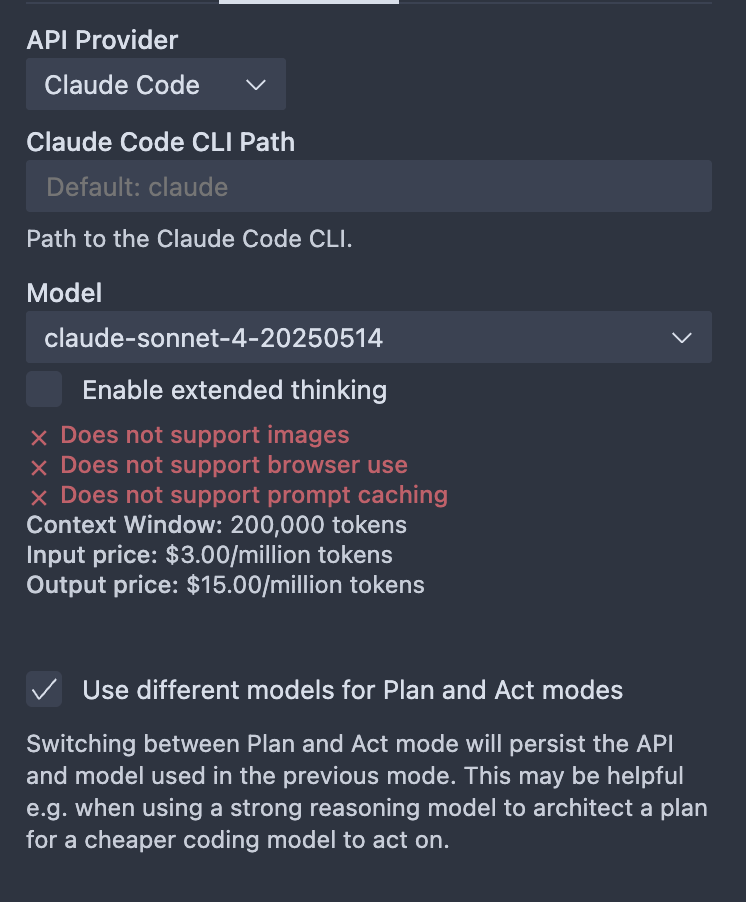

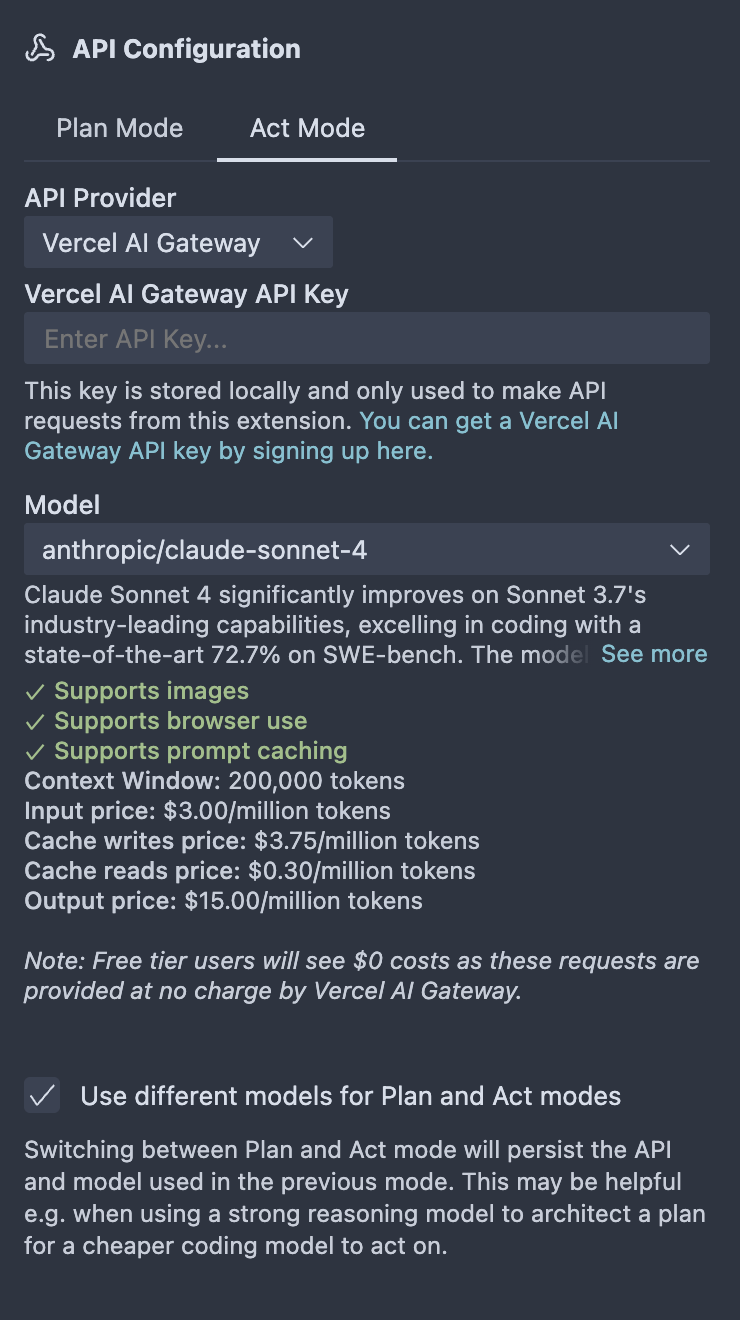

Using AI Gateway from Cline

With the AI Gateway GA announcement, Cline also posted about their support

Comparing Claude Sonnet 4 via Cline + AI Gateway versus Cline alone:

- Context Window and pricing are the same

- However, image input and caching are supported through AI Gateway (I wonder why...)

These were the differences I noticed

In particular, since AI Gateway offers a $5 free tier, I found it convenient to easily compare different models from Cline.

*From the UI, it appears that BYOK cannot be used from Cline.

Summary

Vercel AI Gateway is a system that greatly improves the development and operational experience of LLM-powered applications.

- Ease of switching between models

- Elimination of provider dependency

- Centralized monitoring of costs and usage

- Free tier ($5 worth) to easily try it out

I believe these features have the potential to become standard choices in future AI development.

I hope this article is helpful for those interested in AI development or AI Gateway.