AWS FireLens (AWS for Fluent Bit) のPrometheus形式のメトリクスをAWS Distro for OpenTelemetry (ADOT) CollectorでスクレイピングしてCloudWatchメトリクスにPUTしてみた

Fluent Bitのメトリクスを取得してCloudWatchメトリクスとして扱いたい

こんにちは、のんピ(@non____97)です。

皆さんはFluent Bitのメトリクスを取得してCloudWatchメトリクスとして扱いたいなと思ったことはありますか? 私はあります。

ECSでログルーターとしてAWS FireLens (AWS for FluentBit)を使うことが多いと思います。この時トラブルシューティングのためにFluent Bitに関するメトリクスを取得したい場合があります。

以下で紹介されているとおり、Fluent Bitでは正常に取り込んだレコード数やバイト数、出力時にドロップもしくは失敗したチャンク数などをPrometheus形式のメトリクスを取得することが可能です。

そのため、Prometheus ServerからメトリクスをスクレイピングすることでFluent Bitの状況を把握することが可能です。

取得したメトリクスをCloudWatchメトリクスにPUTする場合は、CloudWatch Agentを使うことが多いのではないでしょうか。

CloudWatch AgentでPrometheus形式のメトリクスを取得する方法は以下AWS公式ドキュメントにまとまっています。

しかし、何らかの理由でCloudWatch Agentをサイドカーで動作させたい場合は以下2点が気になります。

- どのタスクのメトリクスか分からない

- ログストリーム名が全タスクで同じになる

サイドカーのCloudWatch Agentでスクレイピングすると以下のようなログが出力されます。

{

"CloudWatchMetrics": [

{

"Namespace": "FluentBit/ECS",

"Dimensions": [

[

"ClusterName",

"TaskDefinitionFamily",

"name"

],

[

"ClusterName",

"TaskDefinitionFamily"

],

[

"ClusterName",

"name"

],

[

"ClusterName"

]

],

"Metrics": [

{

"Name": "fluentbit_filter_records_total",

"Unit": "",

"StorageResolution": 60

},

{

"Name": "fluentbit_filter_bytes_total",

"Unit": "",

"StorageResolution": 60

},

{

"Name": "fluentbit_filter_drop_records_total",

"Unit": "",

"StorageResolution": 60

}

]

}

],

"ClusterName": "EcsNativeBlueGreenStack-EcsConstructCluster14AE103B-TOUf761WepWh",

"TaskDefinitionFamily": "EcsNativeBlueGreenStackEcsConstructTaskDefinitionF683F4B2",

"Timestamp": "1769069293739",

"Version": "0",

"fluentbit_filter_add_records_total": 0,

"fluentbit_filter_drop_bytes_total": 0,

"instance": "127.0.0.1:2020",

"job": "fluent-bit-metrics",

"name": "parser.4",

"prom_metric_type": "counter",

"fluentbit_filter_bytes_total": 12720,

"fluentbit_filter_drop_records_total": 0,

"fluentbit_filter_records_total": 24

}

タスクIDが含まれていないことが分かります。

ログストリーム名はPROMETHEUS_CONFIG_CONTENTで渡したjob_nameの値となり固定です。job_name: fluent-bitとした場合、全てのタスクのCloudWatch Agentがfluent-bitというログストリームに書き込みます。これではどのタスクのメトリクスなのか判別がつきません。

以下AWS公式ドキュメントで紹介されているとおり、ECS Service Discoveryを用いてスクレイピング対象のコンテナを検出すればタスクIDを取得することも可能です。

ただし、ECS Service Discoveryのスクレイピングジョブの定義でIPアドレスを指定することはできません。そのため、仮にサイドカーで動作をさせると他のタスクのメトリクスも取得しようとしてしまいます。

CloudWatch Agent側で"TaskId":"${aws:ECSTaskId}"を設定できないかも確認しましたが、関連するGitHub Issueは特に動きなく、クローズされてしまっています。

タスク定義でentryPointやcommandを用いてECSタスクメタデータエンドポイントから取得した情報を元に設定ファイルを編集すればできそうですが、CloudWatch Agentのベースイメージはscratchであり、curlやsed、jqなどはインストールされていません。

サイドカー かつ カスタムイメージを使わずにでPrometheus形式のメトリクスを収集し、CloudWatchメトリクスにPUTしたい場合はADOT (AWS Distro for OpenTelemetry) Collectorが候補になります。

ADOT Collectorを使用することにより、サイドカー構成であってもタスクIDなどの情報を付与することが可能になります。

実際に試してみました。

やってみた

検証環境

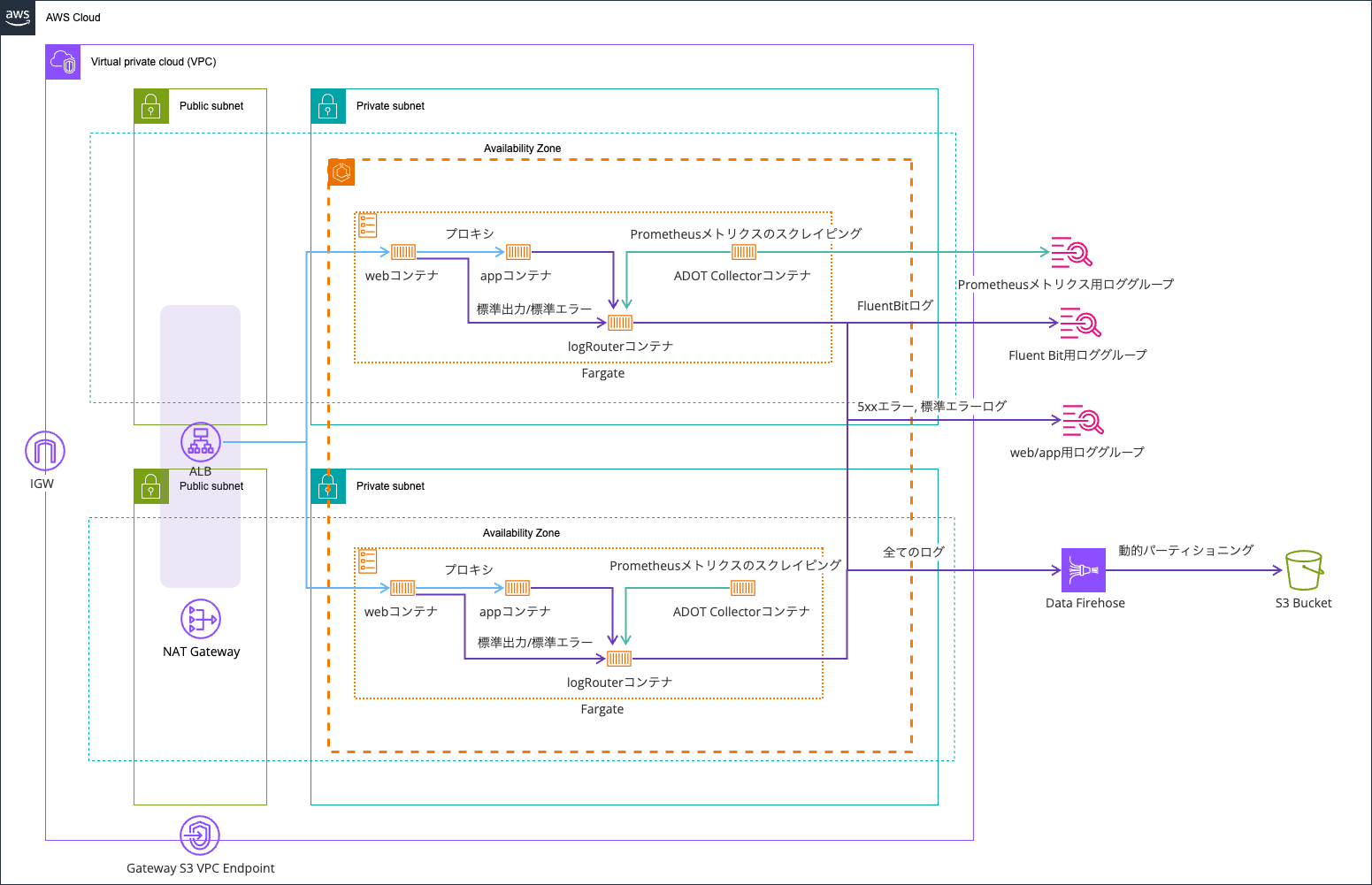

検証環境は以下のとおりです。

ベースは以下記事で使用したものを流用します。

コードは以下リポジトリに保存しています。

Fluent Bitメトリクスの取得処理について

Fluent Bitメトリクスの取得処理について説明します。

まず、Prometheus形式のFluent Bitのメトリクスを取得するために、Fluent BitでHTTP ServerをLISTENさせます。それ以外で特に気にする点はありません。

# ref : 詳解 FireLens – Amazon ECS タスクで高度なログルーティングを実現する機能を深く知る | Amazon Web Services ブログ https://aws.amazon.com/jp/blogs/news/under-the-hood-firelens-for-amazon-ecs-tasks/

# ref : aws-for-fluent-bit/use_cases/init-process-for-fluent-bit/README.md at mainline · aws/aws-for-fluent-bit https://github.com/aws/aws-for-fluent-bit/blob/mainline/use_cases/init-process-for-fluent-bit/README.md

[SERVICE]

Flush 1

Grace 30

Parsers_File /fluent-bit/parsers/parsers.conf

# メトリクス用HTTPサーバーを有効化

# ref : Monitoring | Fluent Bit: Official Manual https://docs.fluentbit.io/manual/administration/monitoring

HTTP_Server On

HTTP_Listen 0.0.0.0

HTTP_PORT 2020

.

.

(以下略)

.

.

続いて、ADOT Collectorの設定です。ADOT Collectorの設定の全体像は以下記事が参考になります。

Fluent Bitのポートに対して1分単位でスクレイピングを行い、EMF形式でログ出力をして、CloudWatchのカスタムメトリクスとして扱います。

設定ファイル自体は以下のとおりです。

# Fluent BitのPrometheusメトリクスのスクレイピング

# ref: https://aws-otel.github.io/docs/getting-started/container-insights/ecs-prometheus/

receivers:

prometheus:

config:

scrape_configs:

- job_name: "fluent-bit-metrics"

scrape_interval: 1m

scrape_timeout: 10s

static_configs:

- targets: ["127.0.0.1:2020"]

metrics_path: "/api/v2/metrics/prometheus"

processors:

# メモリ使用量を制限

# ref : https://github.com/open-telemetry/opentelemetry-collector/blob/main/processor/memorylimiterprocessor/README.md

memory_limiter:

check_interval: 1s

limit_mib: 100

spike_limit_mib: 25

# 特定のnameラベルを持つメトリクスを除外

filter/exclude_names:

metrics:

datapoint:

- 'attributes["name"] == "re_emitted"'

- 'IsMatch(attributes["name"], "^null\\..*")'

- 'IsMatch(attributes["name"], "^emitter_for_multiline\\..*")'

# ECSタスクメタデータを追加

# ref : https://docs.aws.amazon.com/AmazonECS/latest/developerguide/fargate-metadata.html

resourcedetection:

# ref : https://github.com/open-telemetry/opentelemetry-collector-contrib/blob/main/processor/resourcedetectionprocessor/internal/aws/ecs/documentation.md

detectors: [env, ecs]

timeout: 5s

override: false

# ref : opentelemetry-collector-contrib/processor/resourceprocessor/README.md at main · open-telemetry/opentelemetry-collector-contrib https://github.com/open-telemetry/opentelemetry-collector-contrib/blob/main/processor/resourceprocessor/README.md

resource:

attributes:

# ARNからClusterNameを抽出

- key: aws.ecs.cluster.arn

pattern: "^arn:aws:ecs:[^:]+:[^:]+:cluster/(?P<ClusterName>[^/]+)$"

action: extract

- key: TaskId

from_attribute: aws.ecs.task.id

action: insert

- key: TaskDefinitionFamily

from_attribute: aws.ecs.task.family

action: insert

# 不要な属性を削除

- key: aws.ecs.task.arn

action: delete

- key: aws.log.group.arns

action: delete

- key: aws.log.stream.arns

action: delete

# counterをデルタ値に変換

# ref : https://github.com/open-telemetry/opentelemetry-collector-contrib/blob/main/processor/cumulativetodeltaprocessor/README.md

cumulativetodelta:

include:

metrics:

- "^fluentbit_(input|output)_.*_total$"

match_type: regexp

# デルタ値に変換したメトリクスについて、メトリクス名の _total を削除

# ref : https://github.com/open-telemetry/opentelemetry-collector-contrib/blob/main/processor/metricstransformprocessor/README.md

metricstransform:

transforms:

- include: "^(.*)_total$"

match_type: regexp

action: update

new_name: "$${1}"

# ref : https://github.com/open-telemetry/opentelemetry-collector/tree/main/processor#recommended-processors

batch:

timeout: 60s

exporters:

# ref : https://github.com/open-telemetry/opentelemetry-collector-contrib/blob/main/exporter/awsemfexporter/README.md

awsemf:

namespace: ECS/FluentBit

log_group_name: "/aws/ecs/containerinsights/{ClusterName}/prometheus"

log_stream_name: "/task/{TaskId}/fluent-bit"

log_retention: 14

resource_to_telemetry_conversion:

enabled: true

dimension_rollup_option: NoDimensionRollup

metric_declarations:

- dimensions:

- [ClusterName, TaskId, TaskDefinitionFamily, name]

metric_name_selectors:

- "^fluentbit_input_.*"

- "^fluentbit_output_.*"

- dimensions:

- [ClusterName, TaskId, TaskDefinitionFamily]

metric_name_selectors:

- "^fluentbit_storage_.*"

service:

pipelines:

metrics:

receivers: [prometheus]

processors:

[

memory_limiter,

filter/exclude_names,

resourcedetection,

resource,

cumulativetodelta,

metricstransform,

batch,

]

exporters: [awsemf]

CloudWatch Agentの場合はPrometheus形式のメトリクスがcounterの場合は自動でデルタ値を計算してくれるようです。

カウンターメトリクス

Prometheus カウンターメトリクスは、値を増加またはゼロにリセットすることができる単一の単調増加カウンターを表す累積メトリクスです。CloudWatch エージェントは、前回のスクレイプからデルタを計算し、ログイベントのメトリクス値としてデルタ値を送信します。したがって、CloudWatch エージェントは 2 回目のスクレイプから 1 つのログイベントを生成し始め、後続のスクラップがあれば続行します。

CloudWatch エージェントによる Prometheus メトリクスタイプの変換 - Amazon CloudWatch

今回はcumulativetodeltaで末尾が_totalのものについては明示的にデルタ値の計算しています。

それに伴い実態が_totalではなくなるのでメトリクス名の変換をしています。

なお、今回はfluentbit_filter_bytes_totalやfluentbit_filter_records_totalなどFilterのメトリクスはCloudWatchメトリクスにPUTしていません。必要に応じて追加してください。

Fluent Bitメトリクスの取得

実際にデプロイをしてFluent Bitメトリクスの取得がされているかを確認します。

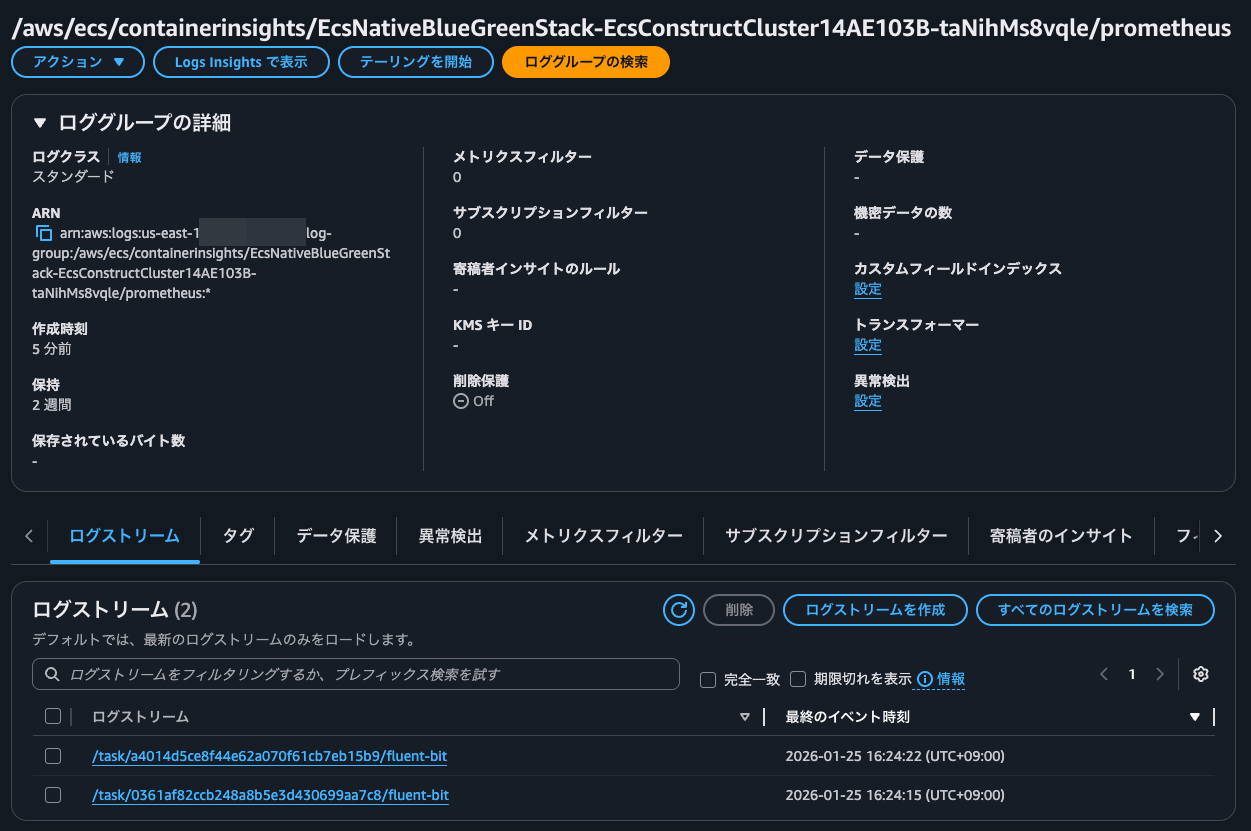

ADOT Collectorの出力先のロググループは以下のとおりです。

実際のADOT Collectorのログは以下のとおりです。

2026/01/25 07:24:03 ADOT Collector version: v0.46.0

2026/01/25 07:24:08 found no extra config, skip it, err: open /opt/aws/aws-otel-collector/etc/extracfg.txt: no such file or directory

2026/01/25 07:24:08 attn: users of the `datadog`, `logzio`, `sapm`, `signalfx` exporter components. please refer to https://github.com/aws-observability/aws-otel-collector/issues/2734 in regards to an upcoming ADOT Collector breaking change

2026-01-25T07:24:08.771Z warn awsemfexporter@v0.141.0/emf_exporter.go:89 the default value for DimensionRollupOption will be changing to NoDimensionRollupin a future release. See https://github.com/open-telemetry/opentelemetry-collector-contrib/issues/23997 for moreinformation

{

"resource": {

"service.instance.id": "dbefa28f-de53-4e1c-a9fa-c91722e395c9",

"service.name": "aws-otel-collector",

"service.version": "v0.46.0"

},

"otelcol.component.id": "awsemf",

"otelcol.component.kind": "exporter",

"otelcol.signal": "metrics"

}

2026-01-25T07:24:08.848Z info memorylimiter@v0.141.0/memorylimiter.go:71 Memory limiter configured

{

"resource": {

"service.instance.id": "dbefa28f-de53-4e1c-a9fa-c91722e395c9",

"service.name": "aws-otel-collector",

"service.version": "v0.46.0"

},

"otelcol.component.kind": "processor",

"limit_mib": 100,

"spike_limit_mib": 25,

"check_interval": 1

}

2026-01-25T07:24:08.855Z info service@v0.141.0/service.go:224 Starting aws-otel-collector...

{

"resource": {

"service.instance.id": "dbefa28f-de53-4e1c-a9fa-c91722e395c9",

"service.name": "aws-otel-collector",

"service.version": "v0.46.0"

},

"Version": "v0.46.0",

"NumCPU": 2

}

2026-01-25T07:24:08.855Z info extensions/extensions.go:40 Starting extensions...

{

"resource": {

"service.instance.id": "dbefa28f-de53-4e1c-a9fa-c91722e395c9",

"service.name": "aws-otel-collector",

"service.version": "v0.46.0"

}

}

2026-01-25T07:24:08.860Z info internal/resourcedetection.go:137 began detecting resource information

{

"resource": {

"service.instance.id": "dbefa28f-de53-4e1c-a9fa-c91722e395c9",

"service.name": "aws-otel-collector",

"service.version": "v0.46.0"

},

"otelcol.component.id": "resourcedetection",

"otelcol.component.kind": "processor",

"otelcol.pipeline.id": "metrics",

"otelcol.signal": "metrics"

}

2026-01-25T07:24:09.052Z info internal/resourcedetection.go:188 detected resource information

{

"resource": {

"aws.ecs.cluster.arn": "arn:aws:ecs:us-east-1:<AWSアカウントID>:cluster/EcsNativeBlueGreenStack-EcsConstructCluster14AE103B-taNihMs8vqle",

"aws.ecs.launchtype": "fargate",

"aws.ecs.task.arn": "arn:aws:ecs:us-east-1:<AWSアカウントID>:task/EcsNativeBlueGreenStack-EcsConstructCluster14AE103B-taNihMs8vqle/0361af82ccb248a8b5e3d430699aa7c8",

"aws.ecs.task.family": "EcsNativeBlueGreenStackEcsConstructTaskDefinitionF683F4B2",

"aws.ecs.task.id": "0361af82ccb248a8b5e3d430699aa7c8",

"aws.ecs.task.revision": "59",

"aws.log.group.arns": [

"arn:aws:logs:us-east-1:<AWSアカウントID>:log-group:EcsNativeBlueGreenStack-EcsConstructTaskDefinitionlogRouterLogGroup27EC9B3C-urWYrfdCIgIx"

],

"aws.log.group.names": [

"EcsNativeBlueGreenStack-EcsConstructTaskDefinitionlogRouterLogGroup27EC9B3C-urWYrfdCIgIx"

],

"aws.log.stream.arns": [

"arn:aws:logs:us-east-1:<AWSアカウントID>:log-group:EcsNativeBlueGreenStack-EcsConstructTaskDefinitionlogRouterLogGroup27EC9B3C-urWYrfdCIgIx:log-stream:firelens/EcsNativeBlueGreenStackEcsConstructTaskDefinitionF683F4B2/logRouter/0361af82ccb248a8b5e3d430699aa7c8"

],

"aws.log.stream.names": [

"firelens/EcsNativeBlueGreenStackEcsConstructTaskDefinitionF683F4B2/logRouter/0361af82ccb248a8b5e3d430699aa7c8"

],

"cloud.account.id": "<AWSアカウントID>",

"cloud.availability_zone": "us-east-1a",

"cloud.platform": "aws_ecs",

"cloud.provider": "aws",

"cloud.region": "us-east-1"

},

"otelcol.component.id": "resourcedetection",

"otelcol.component.kind": "processor",

"otelcol.pipeline.id": "metrics",

"otelcol.signal": "metrics"

}

2026-01-25T07:24:09.057Z info prometheusreceiver@v0.141.0/metrics_receiver.go:157 Starting discovery manager

{

"resource": {

"service.instance.id": "dbefa28f-de53-4e1c-a9fa-c91722e395c9",

"service.name": "aws-otel-collector",

"service.version": "v0.46.0"

},

"otelcol.component.id": "prometheus",

"otelcol.component.kind": "receiver",

"otelcol.signal": "metrics"

}

2026-01-25T07:24:09.058Z info targetallocator/manager.go:224 Scrape job added

{

"resource": {

"service.instance.id": "dbefa28f-de53-4e1c-a9fa-c91722e395c9",

"service.name": "aws-otel-collector",

"service.version": "v0.46.0"

},

"otelcol.component.id": "prometheus",

"otelcol.component.kind": "receiver",

"otelcol.signal": "metrics",

"jobName": "fluent-bit-metrics"

}

2026-01-25T07:24:09.065Z info service@v0.141.0/service.go:247 Everything is ready. Begin running and processing data.

{

"resource": {

"service.instance.id": "dbefa28f-de53-4e1c-a9fa-c91722e395c9",

"service.name": "aws-otel-collector",

"service.version": "v0.46.0"

}

}

2026-01-25T07:24:09.066Z info prometheusreceiver@v0.141.0/metrics_receiver.go:217 Starting scrape manager

{

"resource": {

"service.instance.id": "dbefa28f-de53-4e1c-a9fa-c91722e395c9",

"service.name": "aws-otel-collector",

"service.version": "v0.46.0"

},

"otelcol.component.id": "prometheus",

"otelcol.component.kind": "receiver",

"otelcol.signal": "metrics"

}

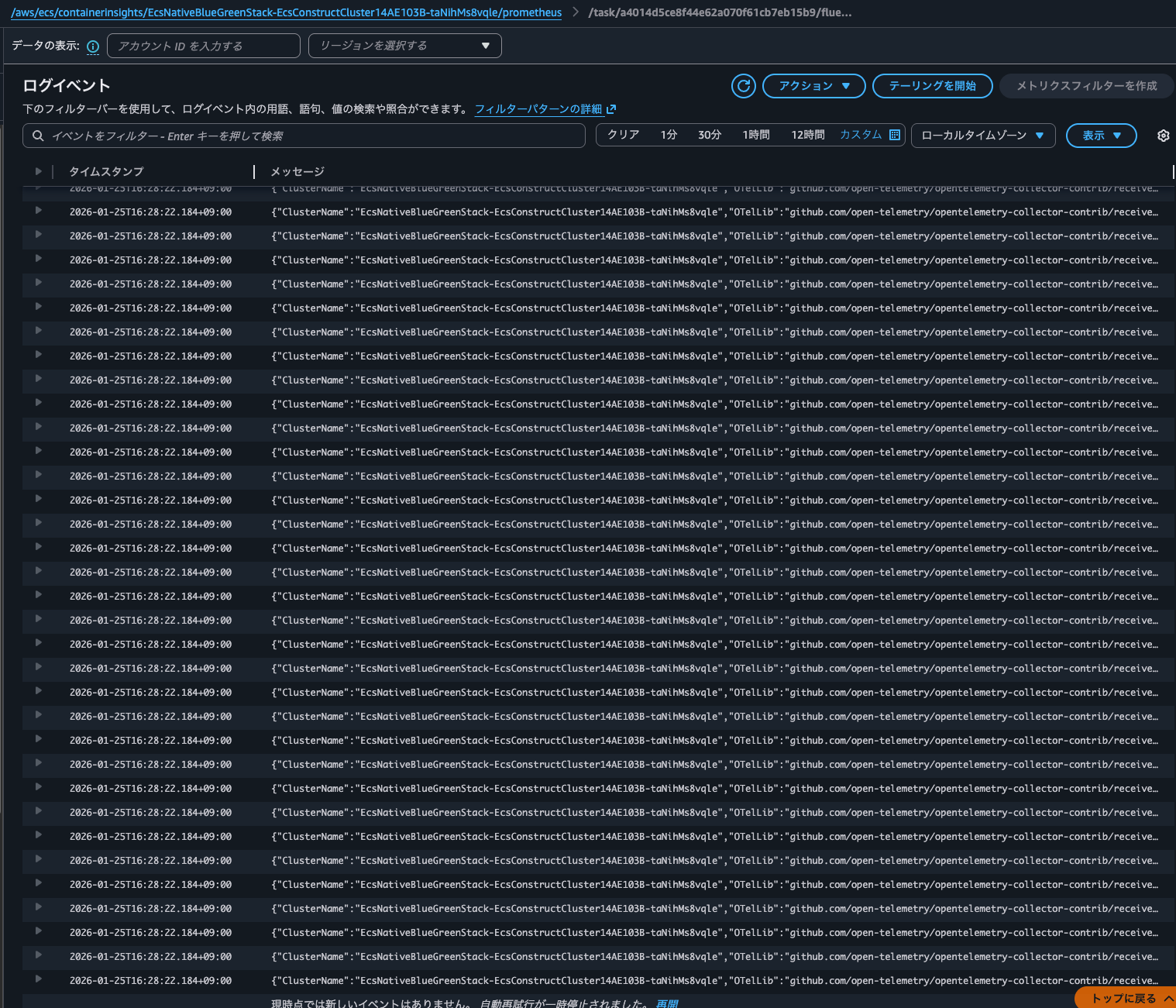

Fluent Bitのスクレイピング結果を出力するロググループは以下のとおりです。ECSタスクごとにログストリームが作成されていることが分かります。

今回は1分ごとにログイベントが34件出力されていました。スクレイピング対象を絞り込むことで件数を減らすことができるでしょう。

実際のログは以下のとおりです。EMF形式でログ出力されていることが分かります。

2026-01-25T16:28:22.184+09:00

{

"ClusterName": "EcsNativeBlueGreenStack-EcsConstructCluster14AE103B-taNihMs8vqle",

"OTelLib": "github.com/open-telemetry/opentelemetry-collector-contrib/receiver/prometheusreceiver",

"TaskDefinitionFamily": "EcsNativeBlueGreenStackEcsConstructTaskDefinitionF683F4B2",

"TaskId": "a4014d5ce8f44e62a070f61cb7eb15b9",

"Version": "1",

"_aws": {

"CloudWatchMetrics": [

{

"Namespace": "ECS/FluentBit",

"Dimensions": [

[

"ClusterName",

"TaskDefinitionFamily",

"TaskId",

"name"

]

],

"Metrics": [

{

"Name": "fluentbit_output_proc_bytes",

"Unit": "",

"StorageResolution": 60

},

{

"Name": "fluentbit_output_retried_records",

"Unit": "",

"StorageResolution": 60

},

{

"Name": "fluentbit_output_retries_failed",

"Unit": "",

"StorageResolution": 60

},

{

"Name": "fluentbit_output_proc_records",

"Unit": "",

"StorageResolution": 60

},

{

"Name": "fluentbit_output_retries",

"Unit": "",

"StorageResolution": 60

},

{

"Name": "fluentbit_output_errors",

"Unit": "",

"StorageResolution": 60

},

{

"Name": "fluentbit_output_dropped_records",

"Unit": "",

"StorageResolution": 60

}

]

}

],

"Timestamp": 1769326102184

},

"aws.ecs.cluster.arn": "arn:aws:ecs:us-east-1:<AWSアカウントID>:cluster/EcsNativeBlueGreenStack-EcsConstructCluster14AE103B-taNihMs8vqle",

"aws.ecs.launchtype": "fargate",

"aws.ecs.task.family": "EcsNativeBlueGreenStackEcsConstructTaskDefinitionF683F4B2",

"aws.ecs.task.id": "a4014d5ce8f44e62a070f61cb7eb15b9",

"aws.ecs.task.revision": "59",

"aws.log.group.names": "[\"EcsNativeBlueGreenStack-EcsConstructTaskDefinitionlogRouterLogGroup27EC9B3C-urWYrfdCIgIx\"]",

"aws.log.stream.names": "[\"firelens/EcsNativeBlueGreenStackEcsConstructTaskDefinitionF683F4B2/logRouter/a4014d5ce8f44e62a070f61cb7eb15b9\"]",

"cloud.account.id": "<AWSアカウントID>",

"cloud.availability_zone": "us-east-1b",

"cloud.platform": "aws_ecs",

"cloud.provider": "aws",

"cloud.region": "us-east-1",

"fluentbit_output_dropped_records": 0,

"fluentbit_output_errors": 0,

"fluentbit_output_proc_bytes": 33565,

"fluentbit_output_proc_records": 41,

"fluentbit_output_retried_records": 0,

"fluentbit_output_retries": 0,

"fluentbit_output_retries_failed": 0,

"name": "kinesis_firehose.1",

"server.port": "2020",

"service.instance.id": "127.0.0.1:2020",

"service.name": "fluent-bit-metrics",

"url.scheme": "http"

}

マッチしないものは以下のようにEMF形式のログは発行されていないことが分かります。

{

"ClusterName": "EcsNativeBlueGreenStack-EcsConstructCluster14AE103B-taNihMs8vqle",

"OTelLib": "github.com/open-telemetry/opentelemetry-collector-contrib/receiver/prometheusreceiver",

"TaskDefinitionFamily": "EcsNativeBlueGreenStackEcsConstructTaskDefinitionF683F4B2",

"TaskId": "a4014d5ce8f44e62a070f61cb7eb15b9",

"aws.ecs.cluster.arn": "arn:aws:ecs:us-east-1:<AWSアカウントID>:cluster/EcsNativeBlueGreenStack-EcsConstructCluster14AE103B-taNihMs8vqle",

"aws.ecs.launchtype": "fargate",

"aws.ecs.task.family": "EcsNativeBlueGreenStackEcsConstructTaskDefinitionF683F4B2",

"aws.ecs.task.id": "a4014d5ce8f44e62a070f61cb7eb15b9",

"aws.ecs.task.revision": "59",

"aws.log.group.names": "[\"EcsNativeBlueGreenStack-EcsConstructTaskDefinitionlogRouterLogGroup27EC9B3C-urWYrfdCIgIx\"]",

"aws.log.stream.names": "[\"firelens/EcsNativeBlueGreenStackEcsConstructTaskDefinitionF683F4B2/logRouter/a4014d5ce8f44e62a070f61cb7eb15b9\"]",

"cloud.account.id": "<AWSアカウントID>",

"cloud.availability_zone": "us-east-1b",

"cloud.platform": "aws_ecs",

"cloud.provider": "aws",

"cloud.region": "us-east-1",

"fluentbit_output_latency_seconds": {

"Max": 0,

"Min": 0,

"Count": 18,

"Sum": 9.941164255142212

},

"input": "re_emitted",

"output": "kinesis_firehose.1",

"server.port": "2020",

"service.instance.id": "127.0.0.1:2020",

"service.name": "fluent-bit-metrics",

"url.scheme": "http"

}

ちなみに、Fluent Bitのエンドポイントにcurlでアクセスして実際に取得できるPrometheus形式のメトリクスは以下のとおりです。

sh-5.2# curl localhost:2020/api/v2/metrics/prometheus

# HELP fluentbit_uptime Number of seconds that Fluent Bit has been running.

# TYPE fluentbit_uptime counter

fluentbit_uptime{hostname="ip-10-10-10-100.ec2.internal"} 2282

# HELP fluentbit_logger_logs_total Total number of logs

# TYPE fluentbit_logger_logs_total counter

fluentbit_logger_logs_total{message_type="error"} 0

fluentbit_logger_logs_total{message_type="warn"} 0

fluentbit_logger_logs_total{message_type="info"} 34

fluentbit_logger_logs_total{message_type="debug"} 0

fluentbit_logger_logs_total{message_type="trace"} 0

# HELP fluentbit_routing_logs_records_total Total log records routed from input to output

# TYPE fluentbit_routing_logs_records_total counter

fluentbit_routing_logs_records_total{input="emitter_for_multiline.2",output="kinesis_firehose.1"} 32

fluentbit_routing_logs_records_total{input="forward.1",output="kinesis_firehose.1"} 41

fluentbit_routing_logs_records_total{input="re_emitted",output="kinesis_firehose.1"} 60

fluentbit_routing_logs_records_total{input="re_emitted",output="cloudwatch_logs.2"} 60

# HELP fluentbit_routing_logs_bytes_total Total bytes routed from input to output (logs)

# TYPE fluentbit_routing_logs_bytes_total counter

fluentbit_routing_logs_bytes_total{input="emitter_for_multiline.2",output="kinesis_firehose.1"} 49649

fluentbit_routing_logs_bytes_total{input="forward.1",output="kinesis_firehose.1"} 22672

fluentbit_routing_logs_bytes_total{input="re_emitted",output="kinesis_firehose.1"} 78471

fluentbit_routing_logs_bytes_total{input="re_emitted",output="cloudwatch_logs.2"} 78471

# HELP fluentbit_input_bytes_total Number of input bytes.

# TYPE fluentbit_input_bytes_total counter

fluentbit_input_bytes_total{name="tcp.0"} 0

fluentbit_input_bytes_total{name="forward.1"} 283026

fluentbit_input_bytes_total{name="forward.2"} 0

fluentbit_input_bytes_total{name="emitter_for_multiline.1"} 0

fluentbit_input_bytes_total{name="emitter_for_multiline.2"} 74288

fluentbit_input_bytes_total{name="re_emitted"} 59533

# HELP fluentbit_input_records_total Number of input records.

# TYPE fluentbit_input_records_total counter

fluentbit_input_records_total{name="tcp.0"} 0

fluentbit_input_records_total{name="forward.1"} 1043

fluentbit_input_records_total{name="forward.2"} 0

fluentbit_input_records_total{name="emitter_for_multiline.1"} 0

fluentbit_input_records_total{name="emitter_for_multiline.2"} 52

fluentbit_input_records_total{name="re_emitted"} 60

# HELP fluentbit_input_ring_buffer_writes_total Number of ring buffer writes.

# TYPE fluentbit_input_ring_buffer_writes_total counter

fluentbit_input_ring_buffer_writes_total{name="tcp.0"} 0

fluentbit_input_ring_buffer_writes_total{name="forward.1"} 0

fluentbit_input_ring_buffer_writes_total{name="forward.2"} 0

fluentbit_input_ring_buffer_writes_total{name="emitter_for_multiline.1"} 0

fluentbit_input_ring_buffer_writes_total{name="emitter_for_multiline.2"} 0

fluentbit_input_ring_buffer_writes_total{name="re_emitted"} 0

# HELP fluentbit_input_ring_buffer_retries_total Number of ring buffer retries.

# TYPE fluentbit_input_ring_buffer_retries_total counter

fluentbit_input_ring_buffer_retries_total{name="tcp.0"} 0

fluentbit_input_ring_buffer_retries_total{name="forward.1"} 0

fluentbit_input_ring_buffer_retries_total{name="forward.2"} 0

fluentbit_input_ring_buffer_retries_total{name="emitter_for_multiline.1"} 0

fluentbit_input_ring_buffer_retries_total{name="emitter_for_multiline.2"} 0

fluentbit_input_ring_buffer_retries_total{name="re_emitted"} 0

# HELP fluentbit_input_ring_buffer_retry_failures_total Number of ring buffer retry failures.

# TYPE fluentbit_input_ring_buffer_retry_failures_total counter

fluentbit_input_ring_buffer_retry_failures_total{name="tcp.0"} 0

fluentbit_input_ring_buffer_retry_failures_total{name="forward.1"} 0

fluentbit_input_ring_buffer_retry_failures_total{name="forward.2"} 0

fluentbit_input_ring_buffer_retry_failures_total{name="emitter_for_multiline.1"} 0

fluentbit_input_ring_buffer_retry_failures_total{name="emitter_for_multiline.2"} 0

fluentbit_input_ring_buffer_retry_failures_total{name="re_emitted"} 0

# HELP fluentbit_filter_records_total Total number of new records processed.

# TYPE fluentbit_filter_records_total counter

fluentbit_filter_records_total{name="record_modifier.0"} 1155

fluentbit_filter_records_total{name="multiline.1"} 1155

fluentbit_filter_records_total{name="multiline.2"} 106

fluentbit_filter_records_total{name="parser.3"} 52

fluentbit_filter_records_total{name="parser.4"} 989

fluentbit_filter_records_total{name="grep.5"} 989

fluentbit_filter_records_total{name="rewrite_tag.6"} 133

# HELP fluentbit_filter_bytes_total Total number of new bytes processed.

# TYPE fluentbit_filter_bytes_total counter

fluentbit_filter_bytes_total{name="record_modifier.0"} 777425

fluentbit_filter_bytes_total{name="multiline.1"} 0

fluentbit_filter_bytes_total{name="multiline.2"} 0

fluentbit_filter_bytes_total{name="parser.3"} 84327

fluentbit_filter_bytes_total{name="parser.4"} 528767

fluentbit_filter_bytes_total{name="grep.5"} 0

fluentbit_filter_bytes_total{name="rewrite_tag.6"} 31363

# HELP fluentbit_filter_add_records_total Total number of new added records.

# TYPE fluentbit_filter_add_records_total counter

fluentbit_filter_add_records_total{name="record_modifier.0"} 0

fluentbit_filter_add_records_total{name="multiline.1"} 0

fluentbit_filter_add_records_total{name="multiline.2"} 0

fluentbit_filter_add_records_total{name="parser.3"} 0

fluentbit_filter_add_records_total{name="parser.4"} 0

fluentbit_filter_add_records_total{name="grep.5"} 0

fluentbit_filter_add_records_total{name="rewrite_tag.6"} 0

# HELP fluentbit_filter_drop_records_total Total number of dropped records.

# TYPE fluentbit_filter_drop_records_total counter

fluentbit_filter_drop_records_total{name="record_modifier.0"} 0

fluentbit_filter_drop_records_total{name="multiline.1"} 0

fluentbit_filter_drop_records_total{name="multiline.2"} 54

fluentbit_filter_drop_records_total{name="parser.3"} 0

fluentbit_filter_drop_records_total{name="parser.4"} 0

fluentbit_filter_drop_records_total{name="grep.5"} 908

fluentbit_filter_drop_records_total{name="rewrite_tag.6"} 60

# HELP fluentbit_filter_drop_bytes_total Total number of dropped bytes.

# TYPE fluentbit_filter_drop_bytes_total counter

fluentbit_filter_drop_bytes_total{name="record_modifier.0"} 0

fluentbit_filter_drop_bytes_total{name="multiline.1"} 0

fluentbit_filter_drop_bytes_total{name="multiline.2"} 58308

fluentbit_filter_drop_bytes_total{name="parser.3"} 0

fluentbit_filter_drop_bytes_total{name="parser.4"} 0

fluentbit_filter_drop_bytes_total{name="grep.5"} 201576

fluentbit_filter_drop_bytes_total{name="rewrite_tag.6"} 40233

# HELP fluentbit_filter_emit_records_total Total number of emitted records

# TYPE fluentbit_filter_emit_records_total counter

fluentbit_filter_emit_records_total{name="multiline.2"} 54

fluentbit_filter_emit_records_total{name="rewrite_tag.6"} 60

# HELP fluentbit_output_proc_records_total Number of processed output records.

# TYPE fluentbit_output_proc_records_total counter

fluentbit_output_proc_records_total{name="null.0"} 0

fluentbit_output_proc_records_total{name="kinesis_firehose.1"} 133

fluentbit_output_proc_records_total{name="cloudwatch_logs.2"} 60

# HELP fluentbit_output_proc_bytes_total Number of processed output bytes.

# TYPE fluentbit_output_proc_bytes_total counter

fluentbit_output_proc_bytes_total{name="null.0"} 0

fluentbit_output_proc_bytes_total{name="kinesis_firehose.1"} 150792

fluentbit_output_proc_bytes_total{name="cloudwatch_logs.2"} 78471

# HELP fluentbit_output_errors_total Number of output errors.

# TYPE fluentbit_output_errors_total counter

fluentbit_output_errors_total{name="null.0"} 0

fluentbit_output_errors_total{name="kinesis_firehose.1"} 0

fluentbit_output_errors_total{name="cloudwatch_logs.2"} 0

# HELP fluentbit_output_retries_total Number of output retries.

# TYPE fluentbit_output_retries_total counter

fluentbit_output_retries_total{name="null.0"} 0

fluentbit_output_retries_total{name="kinesis_firehose.1"} 0

fluentbit_output_retries_total{name="cloudwatch_logs.2"} 0

# HELP fluentbit_output_retries_failed_total Number of abandoned batches because the maximum number of re-tries was reached.

# TYPE fluentbit_output_retries_failed_total counter

fluentbit_output_retries_failed_total{name="null.0"} 0

fluentbit_output_retries_failed_total{name="kinesis_firehose.1"} 0

fluentbit_output_retries_failed_total{name="cloudwatch_logs.2"} 0

# HELP fluentbit_output_dropped_records_total Number of dropped records.

# TYPE fluentbit_output_dropped_records_total counter

fluentbit_output_dropped_records_total{name="null.0"} 0

fluentbit_output_dropped_records_total{name="kinesis_firehose.1"} 0

fluentbit_output_dropped_records_total{name="cloudwatch_logs.2"} 0

# HELP fluentbit_output_retried_records_total Number of retried records.

# TYPE fluentbit_output_retried_records_total counter

fluentbit_output_retried_records_total{name="null.0"} 0

fluentbit_output_retried_records_total{name="kinesis_firehose.1"} 0

fluentbit_output_retried_records_total{name="cloudwatch_logs.2"} 0

# HELP fluentbit_process_start_time_seconds Start time of the process since unix epoch in seconds.

# TYPE fluentbit_process_start_time_seconds gauge

fluentbit_process_start_time_seconds{hostname="ip-10-10-10-100.ec2.internal"} 1769325840

# HELP fluentbit_build_info Build version information.

# TYPE fluentbit_build_info gauge

fluentbit_build_info{hostname="ip-10-10-10-100.ec2.internal",version="4.2.2",os="linux"} 1769325840

# HELP fluentbit_hot_reloaded_times Collect the count of hot reloaded times.

# TYPE fluentbit_hot_reloaded_times gauge

fluentbit_hot_reloaded_times{hostname="ip-10-10-10-100.ec2.internal"} 0

# HELP fluentbit_storage_chunks Total number of chunks in the storage layer.

# TYPE fluentbit_storage_chunks gauge

fluentbit_storage_chunks 0

# HELP fluentbit_storage_mem_chunks Total number of memory chunks.

# TYPE fluentbit_storage_mem_chunks gauge

fluentbit_storage_mem_chunks 0

# HELP fluentbit_storage_fs_chunks Total number of filesystem chunks.

# TYPE fluentbit_storage_fs_chunks gauge

fluentbit_storage_fs_chunks 0

# HELP fluentbit_storage_fs_chunks_up Total number of filesystem chunks up in memory.

# TYPE fluentbit_storage_fs_chunks_up gauge

fluentbit_storage_fs_chunks_up 0

# HELP fluentbit_storage_fs_chunks_down Total number of filesystem chunks down.

# TYPE fluentbit_storage_fs_chunks_down gauge

fluentbit_storage_fs_chunks_down 0

# HELP fluentbit_input_ingestion_paused Is the input paused or not?

# TYPE fluentbit_input_ingestion_paused gauge

fluentbit_input_ingestion_paused{name="tcp.0"} 0

fluentbit_input_ingestion_paused{name="forward.1"} 0

fluentbit_input_ingestion_paused{name="forward.2"} 0

fluentbit_input_ingestion_paused{name="emitter_for_multiline.1"} 0

fluentbit_input_ingestion_paused{name="emitter_for_multiline.2"} 0

fluentbit_input_ingestion_paused{name="re_emitted"} 0

# HELP fluentbit_input_storage_overlimit Is the input memory usage overlimit ?.

# TYPE fluentbit_input_storage_overlimit gauge

fluentbit_input_storage_overlimit{name="tcp.0"} 0

fluentbit_input_storage_overlimit{name="forward.1"} 0

fluentbit_input_storage_overlimit{name="forward.2"} 0

fluentbit_input_storage_overlimit{name="emitter_for_multiline.1"} 0

fluentbit_input_storage_overlimit{name="emitter_for_multiline.2"} 0

fluentbit_input_storage_overlimit{name="re_emitted"} 0

# HELP fluentbit_input_storage_memory_bytes Memory bytes used by the chunks.

# TYPE fluentbit_input_storage_memory_bytes gauge

fluentbit_input_storage_memory_bytes{name="tcp.0"} 0

fluentbit_input_storage_memory_bytes{name="forward.1"} 0

fluentbit_input_storage_memory_bytes{name="forward.2"} 0

fluentbit_input_storage_memory_bytes{name="emitter_for_multiline.1"} 0

fluentbit_input_storage_memory_bytes{name="emitter_for_multiline.2"} 0

fluentbit_input_storage_memory_bytes{name="re_emitted"} 0

# HELP fluentbit_input_storage_chunks Total number of chunks.

# TYPE fluentbit_input_storage_chunks gauge

fluentbit_input_storage_chunks{name="tcp.0"} 0

fluentbit_input_storage_chunks{name="forward.1"} 0

fluentbit_input_storage_chunks{name="forward.2"} 0

fluentbit_input_storage_chunks{name="emitter_for_multiline.1"} 0

fluentbit_input_storage_chunks{name="emitter_for_multiline.2"} 0

fluentbit_input_storage_chunks{name="re_emitted"} 0

# HELP fluentbit_input_storage_chunks_up Total number of chunks up in memory.

# TYPE fluentbit_input_storage_chunks_up gauge

fluentbit_input_storage_chunks_up{name="tcp.0"} 0

fluentbit_input_storage_chunks_up{name="forward.1"} 0

fluentbit_input_storage_chunks_up{name="forward.2"} 0

fluentbit_input_storage_chunks_up{name="emitter_for_multiline.1"} 0

fluentbit_input_storage_chunks_up{name="emitter_for_multiline.2"} 0

fluentbit_input_storage_chunks_up{name="re_emitted"} 0

# HELP fluentbit_input_storage_chunks_down Total number of chunks down.

# TYPE fluentbit_input_storage_chunks_down gauge

fluentbit_input_storage_chunks_down{name="tcp.0"} 0

fluentbit_input_storage_chunks_down{name="forward.1"} 0

fluentbit_input_storage_chunks_down{name="forward.2"} 0

fluentbit_input_storage_chunks_down{name="emitter_for_multiline.1"} 0

fluentbit_input_storage_chunks_down{name="emitter_for_multiline.2"} 0

fluentbit_input_storage_chunks_down{name="re_emitted"} 0

# HELP fluentbit_input_storage_chunks_busy Total number of chunks in a busy state.

# TYPE fluentbit_input_storage_chunks_busy gauge

fluentbit_input_storage_chunks_busy{name="tcp.0"} 0

fluentbit_input_storage_chunks_busy{name="forward.1"} 0

fluentbit_input_storage_chunks_busy{name="forward.2"} 0

fluentbit_input_storage_chunks_busy{name="emitter_for_multiline.1"} 0

fluentbit_input_storage_chunks_busy{name="emitter_for_multiline.2"} 0

fluentbit_input_storage_chunks_busy{name="re_emitted"} 0

# HELP fluentbit_input_storage_chunks_busy_bytes Total number of bytes used by chunks in a busy state.

# TYPE fluentbit_input_storage_chunks_busy_bytes gauge

fluentbit_input_storage_chunks_busy_bytes{name="tcp.0"} 0

fluentbit_input_storage_chunks_busy_bytes{name="forward.1"} 0

fluentbit_input_storage_chunks_busy_bytes{name="forward.2"} 0

fluentbit_input_storage_chunks_busy_bytes{name="emitter_for_multiline.1"} 0

fluentbit_input_storage_chunks_busy_bytes{name="emitter_for_multiline.2"} 0

fluentbit_input_storage_chunks_busy_bytes{name="re_emitted"} 0

# HELP fluentbit_output_upstream_total_connections Total Connection count.

# TYPE fluentbit_output_upstream_total_connections gauge

fluentbit_output_upstream_total_connections{name="null.0"} 0

fluentbit_output_upstream_total_connections{name="kinesis_firehose.1"} 0

fluentbit_output_upstream_total_connections{name="cloudwatch_logs.2"} 0

# HELP fluentbit_output_upstream_busy_connections Busy Connection count.

# TYPE fluentbit_output_upstream_busy_connections gauge

fluentbit_output_upstream_busy_connections{name="null.0"} 0

fluentbit_output_upstream_busy_connections{name="kinesis_firehose.1"} 0

fluentbit_output_upstream_busy_connections{name="cloudwatch_logs.2"} 0

# HELP fluentbit_output_chunk_available_capacity_percent Available chunk capacity (percent)

# TYPE fluentbit_output_chunk_available_capacity_percent gauge

fluentbit_output_chunk_available_capacity_percent{name="null.0"} 100

fluentbit_output_chunk_available_capacity_percent{name="kinesis_firehose.1"} 100

fluentbit_output_chunk_available_capacity_percent{name="cloudwatch_logs.2"} 100

# HELP fluentbit_output_latency_seconds End-to-end latency in seconds

# TYPE fluentbit_output_latency_seconds histogram

fluentbit_output_latency_seconds_bucket{le="0.5",input="emitter_for_multiline.2",output="kinesis_firehose.1"} 7

fluentbit_output_latency_seconds_bucket{le="1.0",input="emitter_for_multiline.2",output="kinesis_firehose.1"} 12

fluentbit_output_latency_seconds_bucket{le="1.5",input="emitter_for_multiline.2",output="kinesis_firehose.1"} 21

fluentbit_output_latency_seconds_bucket{le="2.5",input="emitter_for_multiline.2",output="kinesis_firehose.1"} 21

fluentbit_output_latency_seconds_bucket{le="5.0",input="emitter_for_multiline.2",output="kinesis_firehose.1"} 21

fluentbit_output_latency_seconds_bucket{le="10.0",input="emitter_for_multiline.2",output="kinesis_firehose.1"} 21

fluentbit_output_latency_seconds_bucket{le="20.0",input="emitter_for_multiline.2",output="kinesis_firehose.1"} 21

fluentbit_output_latency_seconds_bucket{le="30.0",input="emitter_for_multiline.2",output="kinesis_firehose.1"} 21

fluentbit_output_latency_seconds_bucket{le="+Inf",input="emitter_for_multiline.2",output="kinesis_firehose.1"} 21

fluentbit_output_latency_seconds_sum{input="emitter_for_multiline.2",output="kinesis_firehose.1"} 14.462409257888794

fluentbit_output_latency_seconds_count{input="emitter_for_multiline.2",output="kinesis_firehose.1"} 21

fluentbit_output_latency_seconds_bucket{le="0.5",input="forward.1",output="kinesis_firehose.1"} 15

fluentbit_output_latency_seconds_bucket{le="1.0",input="forward.1",output="kinesis_firehose.1"} 31

fluentbit_output_latency_seconds_bucket{le="1.5",input="forward.1",output="kinesis_firehose.1"} 32

fluentbit_output_latency_seconds_bucket{le="2.5",input="forward.1",output="kinesis_firehose.1"} 32

fluentbit_output_latency_seconds_bucket{le="5.0",input="forward.1",output="kinesis_firehose.1"} 32

fluentbit_output_latency_seconds_bucket{le="10.0",input="forward.1",output="kinesis_firehose.1"} 32

fluentbit_output_latency_seconds_bucket{le="20.0",input="forward.1",output="kinesis_firehose.1"} 32

fluentbit_output_latency_seconds_bucket{le="30.0",input="forward.1",output="kinesis_firehose.1"} 32

fluentbit_output_latency_seconds_bucket{le="+Inf",input="forward.1",output="kinesis_firehose.1"} 32

fluentbit_output_latency_seconds_sum{input="forward.1",output="kinesis_firehose.1"} 17.994272232055664

fluentbit_output_latency_seconds_count{input="forward.1",output="kinesis_firehose.1"} 32

fluentbit_output_latency_seconds_bucket{le="0.5",input="re_emitted",output="kinesis_firehose.1"} 19

fluentbit_output_latency_seconds_bucket{le="1.0",input="re_emitted",output="kinesis_firehose.1"} 38

fluentbit_output_latency_seconds_bucket{le="1.5",input="re_emitted",output="kinesis_firehose.1"} 43

fluentbit_output_latency_seconds_bucket{le="2.5",input="re_emitted",output="kinesis_firehose.1"} 43

fluentbit_output_latency_seconds_bucket{le="5.0",input="re_emitted",output="kinesis_firehose.1"} 43

fluentbit_output_latency_seconds_bucket{le="10.0",input="re_emitted",output="kinesis_firehose.1"} 43

fluentbit_output_latency_seconds_bucket{le="20.0",input="re_emitted",output="kinesis_firehose.1"} 43

fluentbit_output_latency_seconds_bucket{le="30.0",input="re_emitted",output="kinesis_firehose.1"} 43

fluentbit_output_latency_seconds_bucket{le="+Inf",input="re_emitted",output="kinesis_firehose.1"} 43

fluentbit_output_latency_seconds_sum{input="re_emitted",output="kinesis_firehose.1"} 23.2548668384552

fluentbit_output_latency_seconds_count{input="re_emitted",output="kinesis_firehose.1"} 43

fluentbit_output_latency_seconds_bucket{le="0.5",input="re_emitted",output="cloudwatch_logs.2"} 20

fluentbit_output_latency_seconds_bucket{le="1.0",input="re_emitted",output="cloudwatch_logs.2"} 38

fluentbit_output_latency_seconds_bucket{le="1.5",input="re_emitted",output="cloudwatch_logs.2"} 43

fluentbit_output_latency_seconds_bucket{le="2.5",input="re_emitted",output="cloudwatch_logs.2"} 43

fluentbit_output_latency_seconds_bucket{le="5.0",input="re_emitted",output="cloudwatch_logs.2"} 43

fluentbit_output_latency_seconds_bucket{le="10.0",input="re_emitted",output="cloudwatch_logs.2"} 43

fluentbit_output_latency_seconds_bucket{le="20.0",input="re_emitted",output="cloudwatch_logs.2"} 43

fluentbit_output_latency_seconds_bucket{le="30.0",input="re_emitted",output="cloudwatch_logs.2"} 43

fluentbit_output_latency_seconds_bucket{le="+Inf",input="re_emitted",output="cloudwatch_logs.2"} 43

fluentbit_output_latency_seconds_sum{input="re_emitted",output="cloudwatch_logs.2"} 23.37363862991333

fluentbit_output_latency_seconds_count{input="re_emitted",output="cloudwatch_logs.2"} 43

nameのkinesis_firehose.1やcloudwatch_logs.2、forward.1などの末尾の値は[INPUT]や[FILTER]、[OUTPUT]のプラグインごとの通し番号です。

kinesis_firehose.1は2つ目の[OUTPUT]プラグインであるため末尾に1、cloudwatch_logs.2は3つ目の[OUTPUT]プラグインであるため末尾に2が付与されています。

じゃあ1つ目はどこ? という話ですが、これはデフォルト設定である/fluent-bit/etc/fluent-bit.confで定義されているnull.0が該当します。

sh-5.2# cat /fluent-bit/etc/fluent-bit.conf

[INPUT]

Name tcp

Listen 127.0.0.1

Port 8877

Tag firelens-healthcheck

[INPUT]

Name forward

Mem_Buf_Limit 25MB

unix_path /var/run/fluent.sock

[INPUT]

Name forward

Listen 127.0.0.1

Port 24224

[FILTER]

Name record_modifier

Match *

Record ecs_cluster EcsNativeBlueGreenStack-EcsConstructCluster14AE103B-taNihMs8vqle

Record ecs_task_arn arn:aws:ecs:us-east-1:<AWSアカウントID>:task/EcsNativeBlueGreenStack-EcsConstructCluster14AE103B-taNihMs8vqle/a4014d5ce8f44e62a070f61cb7eb15b9

Record ecs_task_definition EcsNativeBlueGreenStackEcsConstructTaskDefinitionF683F4B2:59

[OUTPUT]

Name null

Match firelens-healthcheck

つまりはデフォルトで以下のnameが存在します。

- tcp.0

- forward.1

- forward.2

- record_modifier.0

- null.0

標準出力/標準エラーで拾うのであればforward.2を、ヘルスチェックを行わないのであればtcp.0のメトリクスは除外しても良いかなと思います。null.0やrecord_modifier.0についても除外でも良いと思います。

kinesis_firehose.1やcloudwatch_logs.2などの末尾の数字が気になる場合はAliasを設定しましょう。

[OUTPUT]

Name kinesis_firehose

Alias all_logs

Match *-firelens-*

delivery_stream ${FIREHOSE_DELIVERY_STREAM_NAME}

region ${AWS_REGION}

time_key datetime

time_key_format %Y-%m-%dT%H:%M:%S.%3NZ

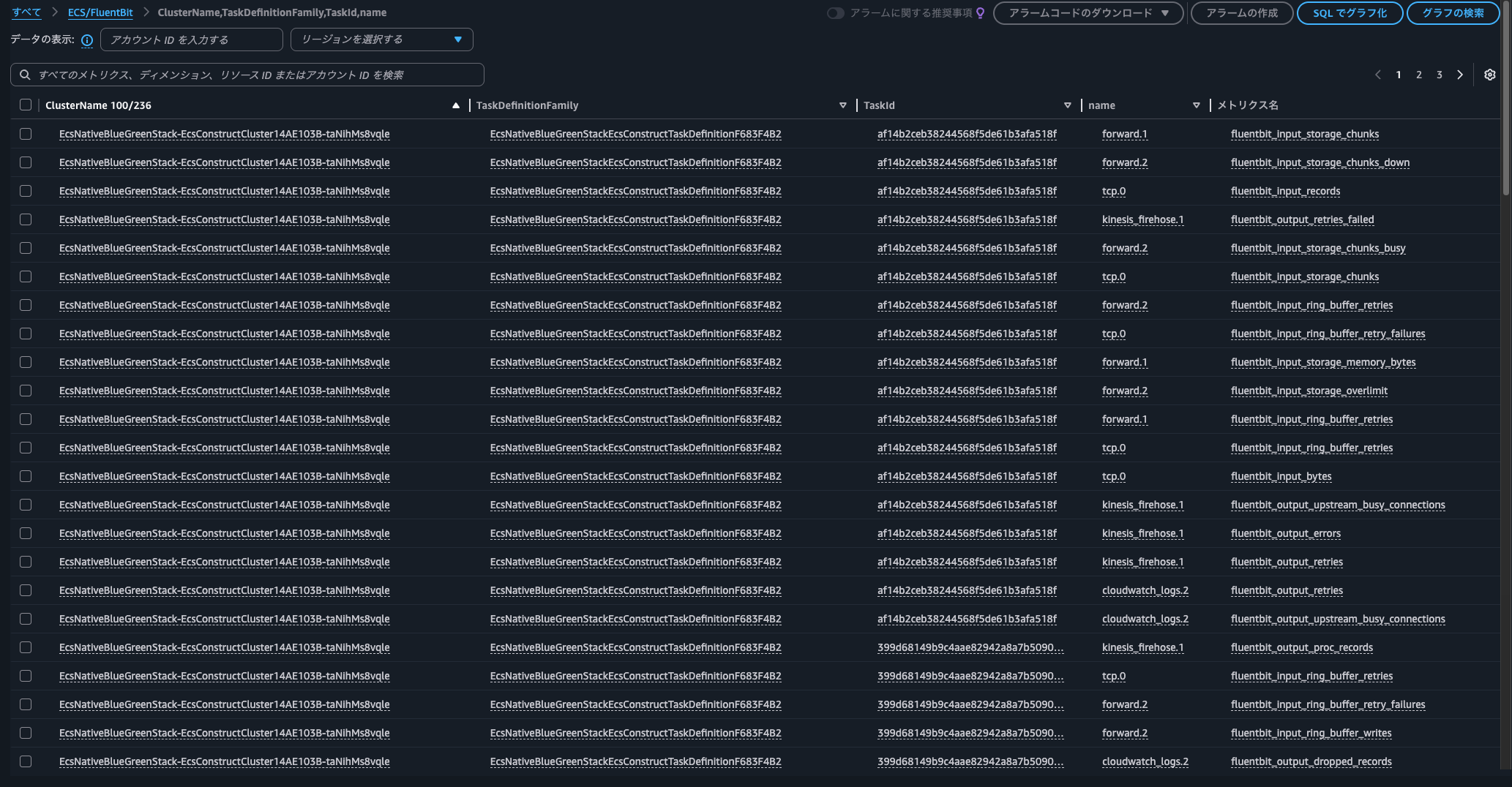

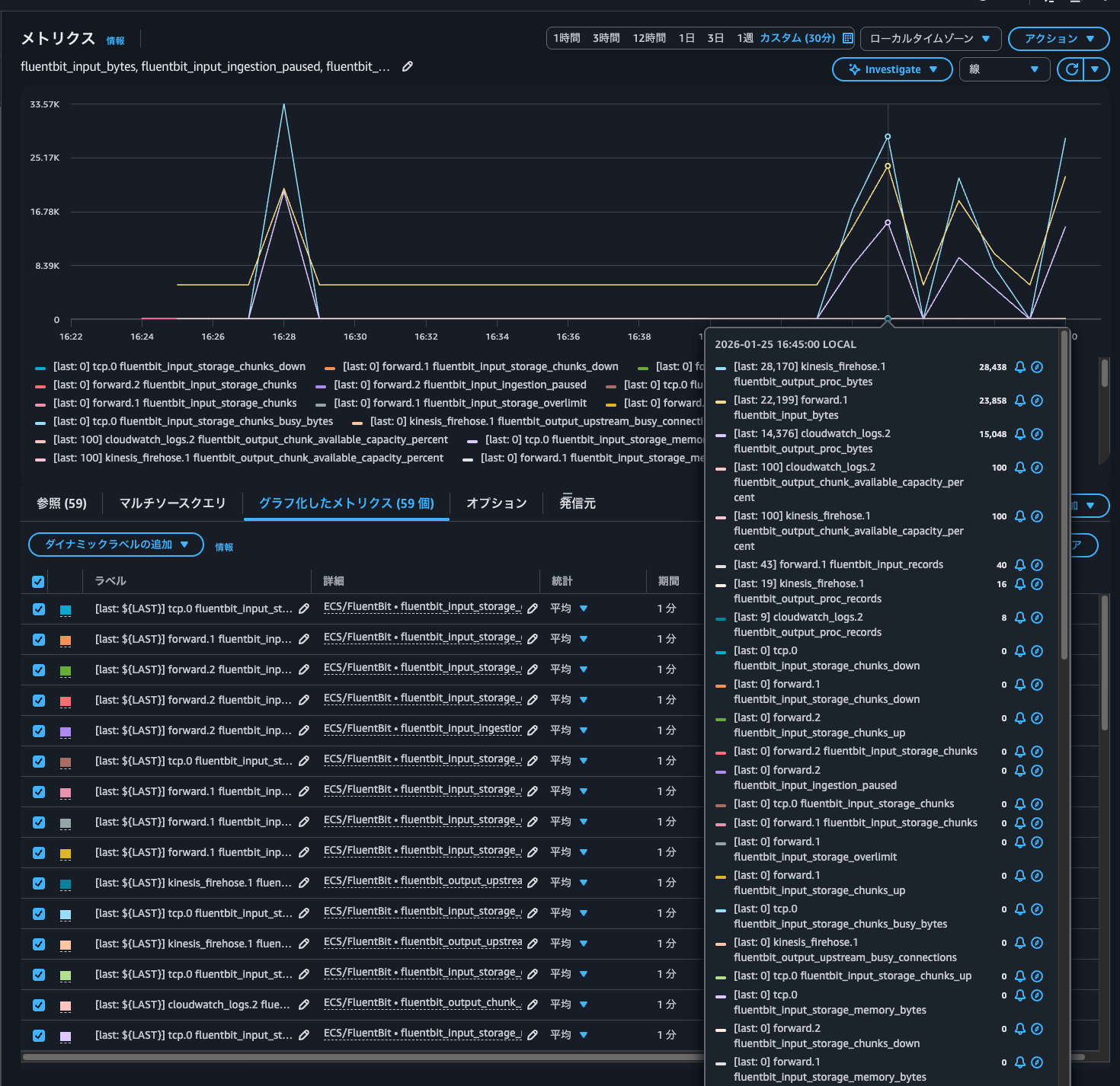

AWSマネジメントコンソールからCloudWatchメトリクスを確認してみます。

ECS/FluentBitというカスタム名前空間があることが分かります。

ClusterName,TaskDefinitionFamily,TaskId,nameのディメンションを確認すると、各種メトリクスがPUTされていることが分かります。

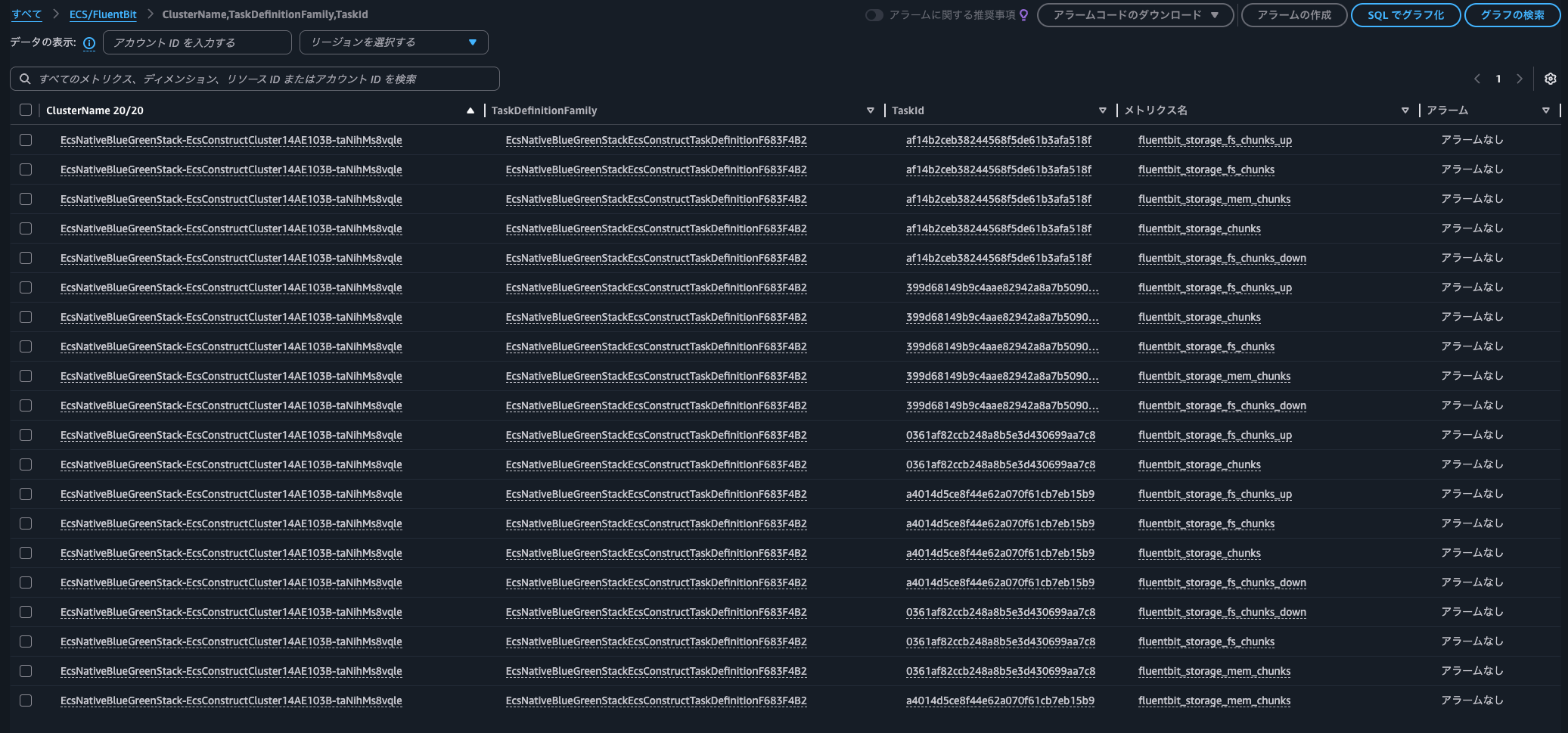

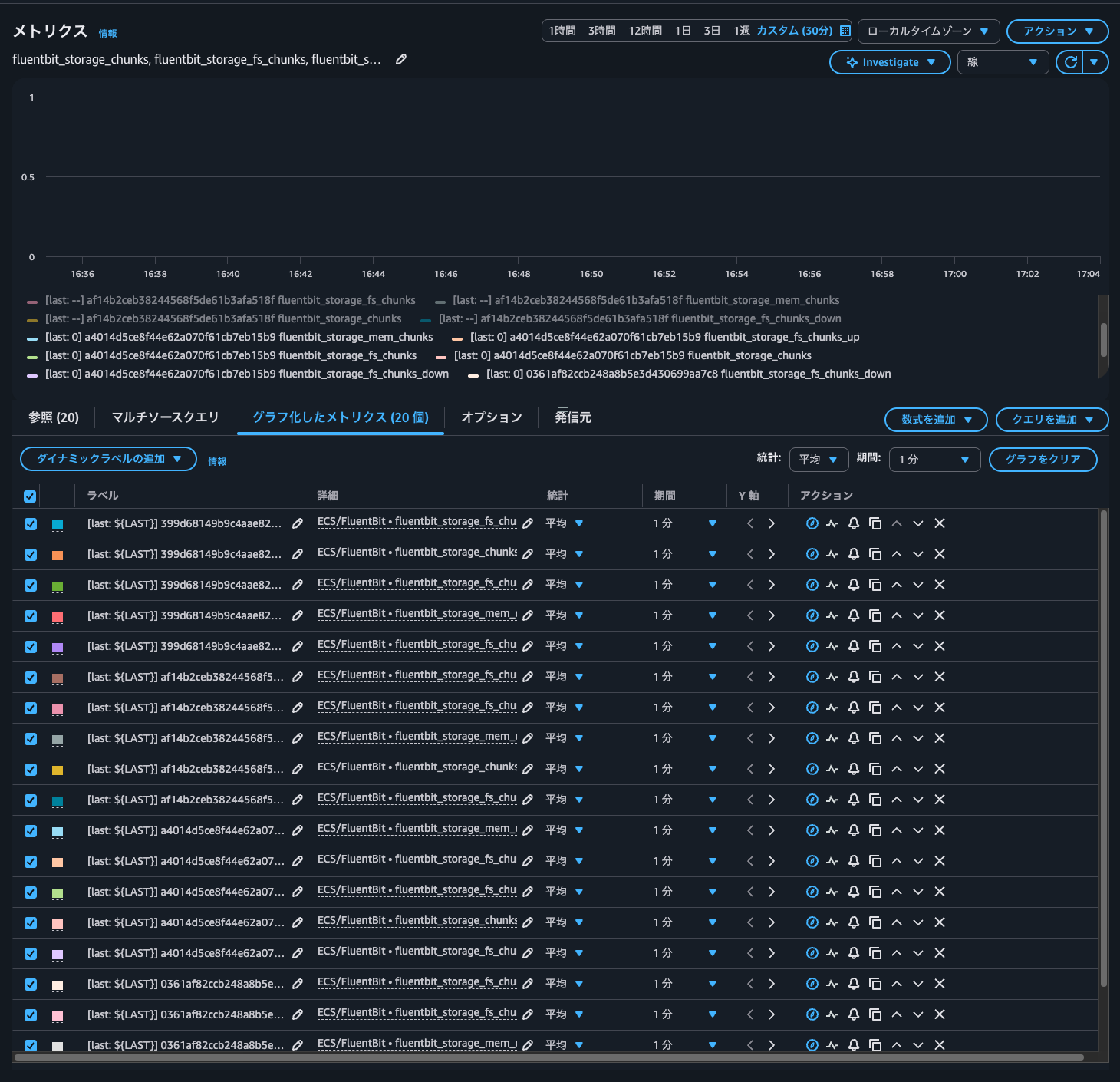

ClusterName,TaskDefinitionFamily,TaskIdのディメンションについても同様です。

何回かALBにアクセスをしてログ出力をさせた上で再度メトリクスを確認します。

ログ出力のタイミングで各種メトリクスの変動がありますね。一方でfluentbit_input_storage_memory_bytesやfluentbit_storage_chunksなどのメトリクスタイプがgaugeのものは0でした。これは恐らくFlush 1で1秒単位でフラッシュをしており、スクレイピング時点で解放されてしまっているためだと考えます。

それと関連してかClusterName,TaskDefinitionFamily,TaskIdのディメンションについても特に動きはありませんでした。

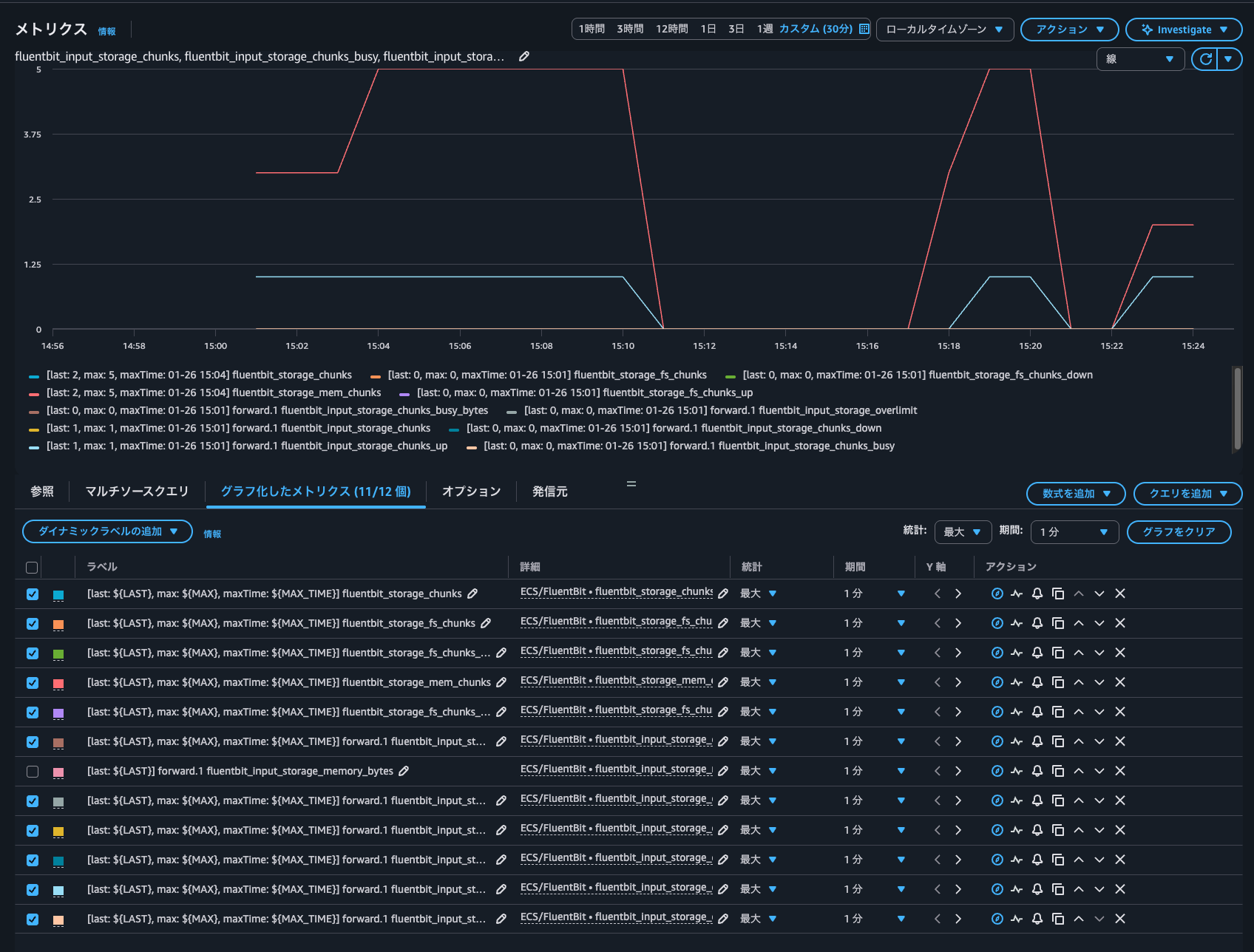

試しにFlush 600とすると、以下のようにメトリクスの変動がありました。

Flush 600なので10分間隔で0になり、そこからアクセスすると、また増えていくような形です。

柔軟な処理をしたい場合はADOT Collectorが便利

AWS FireLens (AWS for Fluent Bit) のPrometheus形式のメトリクスをAWS Distro for OpenTelemetry (ADOT) CollectorでスクレイピングしてCloudWatchメトリクスにPUTしてみました。

柔軟な処理をしたい場合はADOT Collectorが便利ですね。

この記事が誰かの助けになれば幸いです。

以上、クラウド事業本部 コンサルティング部の のんピ(@non____97)でした!