AWS PCS でジョブの自動再キューイングをサポートした Slurm 25.05 がリリースされました

はじめに

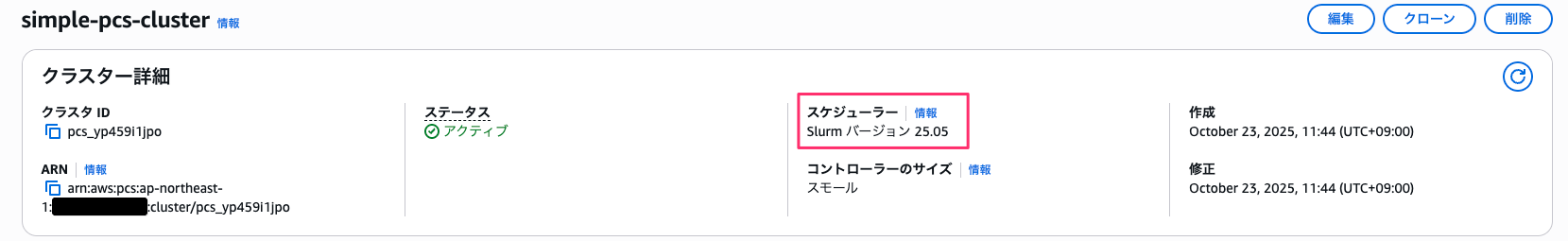

AWS Parallel Computing Service (PCS) で Slurm 25.05 のサポートが追加されました。Slurm 25.05 で追加された新機能と変更点について、AWS PCS クラスターを構築して検証した結果を紹介します。

Slurm 25.05 の主な新機能

AWS PCS で利用可能になった Slurm 25.05 の主な新機能は以下の 3 点です。

- マルチクラスター sackd 設定のサポート

- requeue_on_resume_failure のデフォルト有効化

- LogTimeFormat の変更

それぞれの詳細を見ていきます。

マルチクラスター sackd 設定のサポート

機能概要

複数の AWS PCS クラスターへ単一のログインノードから接続できるようになりました。ログインノードの台数を削減でき、管理コストと運用コストを低減できます。

どう変わったのか

Slurm 25.05 では、複数の sackd(Slurm Auth and Cred Kiosk Daemon)デーモンを同一ログインノード上で共存させることが可能になりました。これにより、1 つのログインノードから複数のクラスターへアクセスできます。

複数の sackd デーモンを共存させるには、systemd の RuntimeDirectory オプションを使用します。slurm-<clustername> のようにクラスターごとに異なるディレクトリを設定することで、各クラスター専用のソケットパスが作成されます。たとえば /run/slurm-cluster1/sack.socket、/run/slurm-cluster2/sack.socket のようになり、複数のクラスターへの接続が可能になります。

参考: https://slurm.schedmd.com/sackd.html

具体的には、以下の変更が入っていました。

- クライアントが

/run/slurm-<cluster>/sack.socketを優先的に探すようになった SLURM_CONF環境変数で設定ソースと認証ソケットを別々に指定できる- sackd が

RUNTIME_DIRECTORY環境変数を認識し、クラスターごとに独立した実行環境を持てる

参考: https://github.com/SchedMD/slurm/blob/slurm-25.05/CHANGELOG/slurm-25.05.md

設定方法

実際に試したかったのですが難易度が高かったため時間があるときに確認します。設定方法は以下のドキュメントを参照ください。

requeue_on_resume_failure のデフォルト有効化

機能概要

Slurm の requeue_on_resume_failure SchedulerParameter がデフォルトで有効になりました。インスタンス起動失敗時にジョブを自動的に再キューイングします。

実機で確認

AWS PCS クラスター(Slurm 25.05)で scontrol show config を実行しました。その結果、SchedulerParameters に requeue_on_resume_failure が設定されていることを確認しました。

SchedulerParameters = requeue_on_resume_failure

ResumeTimeout = 1800 sec

SLURM_VERSION = 25.05.3

ResumeTimeout は、ResumeProgram がノードを起動してから Slurm に応答するまでの最大待機時間を指定します。AWS PCS では 1800 秒(30 分)に設定されています。この時間内にノードが起動しない場合、自動的に DOWN 状態となりジョブが再キューイングされます。

参考: Slurm Workload Manager - slurm.conf

タイムアウト設定について

1800 秒(30 分)は一見長めの設定です。通常、コンピュートノードは 10 分程度で起動します(経験上)。以下の理由から、余裕をもった設定になったと推測しています。

- コンピュートノードの初期化にかかる時間

- EC2 インスタンスのキャパシティ不足による起動遅延をある程度見込んで

LogTimeFormat の変更

変更内容

LogTimeFormat=format_stderr オプションが削除されました。ログファイルと標準エラー出力(stderr)は常に同じタイムスタンプフォーマットで出力されるようになりました。

現在利用可能なフォーマット

Slurm 25.05 では以下のフォーマットが利用可能です。

iso8601iso8601_ms(デフォルト)rfc5424rfc5424_msrfc3339clockshortthread_id

参考: Slurm Workload Manager - slurm.conf

実機での確認

AWS PCS クラスター(Slurm 25.05)では rfc5424_ms フォーマットが採用されています。

LogTimeFormat = rfc5424_ms

検証環境

本記事の検証には、以下の構成で AWS PCS クラスターを構築しました。

| 項目 | 設定値 |

|---|---|

| リージョン | 東京リージョン(ap-northeast-1) |

| Slurm バージョン | 25.05.3 |

| ログインノード | t3.micro × 1 台 |

| コンピュートノード | m7i.large × 最大 4 台 |

| 共有ストレージ | Amazon EFS |

構築に使用した CloudFormation テンプレートです。

CloudFormation テンプレート

AWSTemplateFormatVersion: '2010-09-09'

Description: 'Simple AWS PCS cluster for verification - 1 login node (t3.micro) and up to 4 compute nodes (m7i.large) with EFS storage'

Parameters:

ClusterName:

Type: String

Default: 'simple-pcs-cluster'

Description: 'Name of the PCS cluster'

AMIID:

Type: AWS::EC2::Image::Id

Default: 'ami-056a2dabd78ff3886'

SlurmVersion:

Type: String

Default: '25.05'

Description: 'Slurm version for the PCS cluster'

AllowedValues:

- '25.05'

- '24.11'

Resources:

# VPC

VPC:

Type: AWS::EC2::VPC

Properties:

CidrBlock: '10.0.0.0/16'

EnableDnsHostnames: true

EnableDnsSupport: true

Tags:

- Key: Name

Value: !Sub '${ClusterName}-vpc'

# Internet Gateway

InternetGateway:

Type: AWS::EC2::InternetGateway

Properties:

Tags:

- Key: Name

Value: !Sub '${ClusterName}-igw'

InternetGatewayAttachment:

Type: AWS::EC2::VPCGatewayAttachment

Properties:

InternetGatewayId: !Ref InternetGateway

VpcId: !Ref VPC

# Public Subnet

PublicSubnet1:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref VPC

AvailabilityZone: !Select [0, !GetAZs '']

CidrBlock: '10.0.1.0/24'

MapPublicIpOnLaunch: true

Tags:

- Key: Name

Value: !Sub '${ClusterName}-public-subnet-1'

PublicSubnet2:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref VPC

AvailabilityZone: !Select [1, !GetAZs '']

CidrBlock: '10.0.2.0/24'

MapPublicIpOnLaunch: true

Tags:

- Key: Name

Value: !Sub '${ClusterName}-public-subnet-2'

PublicSubnet3:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref VPC

AvailabilityZone: !Select [2, !GetAZs '']

CidrBlock: '10.0.3.0/24'

MapPublicIpOnLaunch: true

Tags:

- Key: Name

Value: !Sub '${ClusterName}-public-subnet-3'

# Private Subnet

PrivateSubnet1:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref VPC

AvailabilityZone: !Select [0, !GetAZs '']

CidrBlock: '10.0.11.0/24'

Tags:

- Key: Name

Value: !Sub '${ClusterName}-private-subnet-1'

PrivateSubnet2:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref VPC

AvailabilityZone: !Select [1, !GetAZs '']

CidrBlock: '10.0.12.0/24'

Tags:

- Key: Name

Value: !Sub '${ClusterName}-private-subnet-2'

PrivateSubnet3:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref VPC

AvailabilityZone: !Select [2, !GetAZs '']

CidrBlock: '10.0.13.0/24'

Tags:

- Key: Name

Value: !Sub '${ClusterName}-private-subnet-3'

# Isolated Subnet

IsolatedSubnet1:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref VPC

AvailabilityZone: !Select [0, !GetAZs '']

CidrBlock: '10.0.21.0/24'

Tags:

- Key: Name

Value: !Sub '${ClusterName}-isolated-subnet-1'

IsolatedSubnet2:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref VPC

AvailabilityZone: !Select [1, !GetAZs '']

CidrBlock: '10.0.22.0/24'

Tags:

- Key: Name

Value: !Sub '${ClusterName}-isolated-subnet-2'

IsolatedSubnet3:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref VPC

AvailabilityZone: !Select [2, !GetAZs '']

CidrBlock: '10.0.23.0/24'

Tags:

- Key: Name

Value: !Sub '${ClusterName}-isolated-subnet-3'

# NAT Gateway

NatGateway1EIP:

Type: AWS::EC2::EIP

DependsOn: InternetGatewayAttachment

Properties:

Domain: vpc

NatGateway1:

Type: AWS::EC2::NatGateway

Properties:

AllocationId: !GetAtt NatGateway1EIP.AllocationId

SubnetId: !Ref PublicSubnet1

# Route Tables

PublicRouteTable:

Type: AWS::EC2::RouteTable

Properties:

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub '${ClusterName}-public-routes'

DefaultPublicRoute:

Type: AWS::EC2::Route

DependsOn: InternetGatewayAttachment

Properties:

RouteTableId: !Ref PublicRouteTable

DestinationCidrBlock: '0.0.0.0/0'

GatewayId: !Ref InternetGateway

PublicSubnet1RouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

RouteTableId: !Ref PublicRouteTable

SubnetId: !Ref PublicSubnet1

PublicSubnet2RouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

RouteTableId: !Ref PublicRouteTable

SubnetId: !Ref PublicSubnet2

PublicSubnet3RouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

RouteTableId: !Ref PublicRouteTable

SubnetId: !Ref PublicSubnet3

PrivateRouteTable1:

Type: AWS::EC2::RouteTable

Properties:

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub '${ClusterName}-private-routes-1'

DefaultPrivateRoute1:

Type: AWS::EC2::Route

Properties:

RouteTableId: !Ref PrivateRouteTable1

DestinationCidrBlock: '0.0.0.0/0'

NatGatewayId: !Ref NatGateway1

PrivateSubnet1RouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

RouteTableId: !Ref PrivateRouteTable1

SubnetId: !Ref PrivateSubnet1

PrivateSubnet2RouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

RouteTableId: !Ref PrivateRouteTable1

SubnetId: !Ref PrivateSubnet2

PrivateSubnet3RouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

RouteTableId: !Ref PrivateRouteTable1

SubnetId: !Ref PrivateSubnet3

# Security Groups

LoginSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupName: !Sub '${ClusterName}-login-sg'

GroupDescription: 'Security group for PCS login nodes'

VpcId: !Ref VPC

SecurityGroupEgress:

- IpProtocol: -1

CidrIp: 0.0.0.0/0

Description: 'Allow all outbound traffic'

Tags:

- Key: Name

Value: !Sub '${ClusterName}-login-sg'

ComputeSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupName: !Sub '${ClusterName}-compute-sg'

GroupDescription: 'Security group for PCS compute nodes'

VpcId: !Ref VPC

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 22

ToPort: 22

CidrIp: 10.0.0.0/16

Description: 'SSH access from VPC for PCS requirement'

SecurityGroupEgress:

- IpProtocol: -1

CidrIp: 0.0.0.0/0

Description: 'Allow all outbound traffic'

Tags:

- Key: Name

Value: !Sub '${ClusterName}-compute-sg'

# Allow all traffic from login to compute

ComputeSecurityGroupIngressFromLogin:

Type: AWS::EC2::SecurityGroupIngress

Properties:

GroupId: !Ref ComputeSecurityGroup

IpProtocol: -1

SourceSecurityGroupId: !Ref LoginSecurityGroup

Description: 'Allow all traffic from login nodes'

# Allow all traffic from compute to login

LoginSecurityGroupIngressFromCompute:

Type: AWS::EC2::SecurityGroupIngress

Properties:

GroupId: !Ref LoginSecurityGroup

IpProtocol: -1

SourceSecurityGroupId: !Ref ComputeSecurityGroup

Description: 'Allow all traffic from compute nodes'

# Self-referencing rules for login nodes

LoginSecurityGroupIngressSelfRef:

Type: AWS::EC2::SecurityGroupIngress

Properties:

GroupId: !Ref LoginSecurityGroup

IpProtocol: -1

SourceSecurityGroupId: !Ref LoginSecurityGroup

Description: 'Allow all traffic within login nodes'

# Self-referencing rules for compute nodes

ComputeSecurityGroupIngressSelfRef:

Type: AWS::EC2::SecurityGroupIngress

Properties:

GroupId: !Ref ComputeSecurityGroup

IpProtocol: -1

SourceSecurityGroupId: !Ref ComputeSecurityGroup

Description: 'Allow all traffic within compute nodes'

EFSSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupName: !Sub '${ClusterName}-efs-sg'

GroupDescription: 'Security group for EFS'

VpcId: !Ref VPC

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 2049

ToPort: 2049

SourceSecurityGroupId: !Ref LoginSecurityGroup

- IpProtocol: tcp

FromPort: 2049

ToPort: 2049

SourceSecurityGroupId: !Ref ComputeSecurityGroup

Tags:

- Key: Name

Value: !Sub '${ClusterName}-efs-sg'

# EFS

EFSFileSystem:

Type: AWS::EFS::FileSystem

Properties:

PerformanceMode: generalPurpose

ThroughputMode: bursting

Encrypted: true

FileSystemTags:

- Key: Name

Value: !Sub '${ClusterName}-efs'

EFSMountTarget1:

Type: AWS::EFS::MountTarget

Properties:

FileSystemId: !Ref EFSFileSystem

SubnetId: !Ref PrivateSubnet1

SecurityGroups:

- !Ref EFSSecurityGroup

EFSMountTarget2:

Type: AWS::EFS::MountTarget

Properties:

FileSystemId: !Ref EFSFileSystem

SubnetId: !Ref PrivateSubnet2

SecurityGroups:

- !Ref EFSSecurityGroup

EFSMountTarget3:

Type: AWS::EFS::MountTarget

Properties:

FileSystemId: !Ref EFSFileSystem

SubnetId: !Ref PrivateSubnet3

SecurityGroups:

- !Ref EFSSecurityGroup

# IAM Role for PCS Instance

PCSInstanceRole:

Type: AWS::IAM::Role

Properties:

RoleName: !Sub 'AWSPCS-${AWS::StackName}-${AWS::Region}'

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service: ec2.amazonaws.com

Action: sts:AssumeRole

ManagedPolicyArns:

- arn:aws:iam::aws:policy/AmazonSSMManagedInstanceCore

Policies:

- PolicyName: PCSComputeNodePolicy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- pcs:RegisterComputeNodeGroupInstance

Resource: '*'

PCSInstanceProfile:

Type: AWS::IAM::InstanceProfile

Properties:

Roles:

- !Ref PCSInstanceRole

# Launch Template for Login Nodes

LoginLaunchTemplate:

Type: AWS::EC2::LaunchTemplate

Properties:

LaunchTemplateName: !Sub '${ClusterName}-login-launch-template'

LaunchTemplateData:

ImageId: !Ref AMIID

SecurityGroupIds:

- !Ref LoginSecurityGroup

IamInstanceProfile:

Arn: !GetAtt PCSInstanceProfile.Arn

UserData:

Fn::Base64: !Sub |

MIME-Version: 1.0

Content-Type: multipart/mixed; boundary="==BOUNDARY=="

--==BOUNDARY==

Content-Type: text/x-shellscript; charset="us-ascii"

#!/bin/bash

yum update -y

yum install -y amazon-efs-utils

mkdir -p /shared

echo "${EFSFileSystem}.efs.${AWS::Region}.amazonaws.com:/ /shared efs defaults,_netdev" >> /etc/fstab

mount -a

chown ec2-user:ec2-user /shared

chmod 755 /shared

# Add Slurm commands to PATH for all users

echo 'export PATH=/opt/slurm/bin:$PATH' >> /etc/profile.d/slurm.sh

chmod +x /etc/profile.d/slurm.sh

# Add to ec2-user's bashrc for immediate availability

echo 'export PATH=/opt/slurm/bin:$PATH' >> /home/ec2-user/.bashrc

chown ec2-user:ec2-user /home/ec2-user/.bashrc

--==BOUNDARY==--

MetadataOptions:

HttpTokens: required

HttpPutResponseHopLimit: 2

HttpEndpoint: enabled

# Launch Template for Compute Nodes

ComputeLaunchTemplate:

Type: AWS::EC2::LaunchTemplate

Properties:

LaunchTemplateName: !Sub '${ClusterName}-compute-launch-template'

LaunchTemplateData:

ImageId: !Ref AMIID

SecurityGroupIds:

- !Ref ComputeSecurityGroup

IamInstanceProfile:

Arn: !GetAtt PCSInstanceProfile.Arn

UserData:

Fn::Base64: !Sub |

MIME-Version: 1.0

Content-Type: multipart/mixed; boundary="==BOUNDARY=="

--==BOUNDARY==

Content-Type: text/x-shellscript; charset="us-ascii"

#!/bin/bash

yum update -y

yum install -y amazon-efs-utils

mkdir -p /shared

echo "${EFSFileSystem}.efs.${AWS::Region}.amazonaws.com:/ /shared efs defaults,_netdev" >> /etc/fstab

mount -a

chown ec2-user:ec2-user /shared

chmod 755 /shared

# Add Slurm commands to PATH for all users

echo 'export PATH=/opt/slurm/bin:$PATH' >> /etc/profile.d/slurm.sh

chmod +x /etc/profile.d/slurm.sh

# Add to ec2-user's bashrc for immediate availability

echo 'export PATH=/opt/slurm/bin:$PATH' >> /home/ec2-user/.bashrc

chown ec2-user:ec2-user /home/ec2-user/.bashrc

--==BOUNDARY==--

MetadataOptions:

HttpTokens: required

HttpPutResponseHopLimit: 2

HttpEndpoint: enabled

# PCS Cluster

PCSCluster:

Type: AWS::PCS::Cluster

Properties:

Name: !Ref ClusterName

Size: SMALL

Scheduler:

Type: SLURM

Version: !Ref SlurmVersion

Networking:

SubnetIds:

- !Ref PrivateSubnet1

SecurityGroupIds:

- !Ref LoginSecurityGroup

- !Ref ComputeSecurityGroup

# Login Node Group

LoginNodeGroup:

Type: AWS::PCS::ComputeNodeGroup

Properties:

ClusterId: !GetAtt PCSCluster.Id

Name: login

ScalingConfiguration:

MinInstanceCount: 1

MaxInstanceCount: 1

IamInstanceProfileArn: !GetAtt PCSInstanceProfile.Arn

CustomLaunchTemplate:

TemplateId: !Ref LoginLaunchTemplate

Version: 1

SubnetIds:

- !Ref PublicSubnet1

AmiId: !Ref AMIID

InstanceConfigs:

- InstanceType: 't3.micro'

# Compute Node Group

ComputeNodeGroup:

Type: AWS::PCS::ComputeNodeGroup

Properties:

ClusterId: !GetAtt PCSCluster.Id

Name: compute

ScalingConfiguration:

MinInstanceCount: 0

MaxInstanceCount: 4

IamInstanceProfileArn: !GetAtt PCSInstanceProfile.Arn

CustomLaunchTemplate:

TemplateId: !Ref ComputeLaunchTemplate

Version: 1

SubnetIds:

- !Ref PrivateSubnet1

AmiId: !Ref AMIID

InstanceConfigs:

- InstanceType: 'm7i.large'

# Queue

ComputeQueue:

Type: AWS::PCS::Queue

Properties:

ClusterId: !GetAtt PCSCluster.Id

Name: compute-queue

ComputeNodeGroupConfigurations:

- ComputeNodeGroupId: !GetAtt ComputeNodeGroup.Id

Outputs:

ClusterId:

Description: 'The ID of the PCS cluster'

Value: !GetAtt PCSCluster.Id

Export:

Name: !Sub '${AWS::StackName}-ClusterId'

VPCId:

Description: 'The ID of the VPC'

Value: !Ref VPC

Export:

Name: !Sub '${AWS::StackName}-VPCId'

EFSFileSystemId:

Description: 'The ID of the EFS file system'

Value: !Ref EFSFileSystem

Export:

Name: !Sub '${AWS::StackName}-EFSFileSystemId'

PCSConsoleUrl:

Description: 'URL to access the cluster in the PCS console'

Value: !Sub 'https://${AWS::Region}.console.aws.amazon.com/pcs/home?region=${AWS::Region}#/clusters/${PCSCluster.Id}'

Export:

Name: !Sub '${AWS::StackName}-PCSConsoleUrl'

EC2ConsoleUrl:

Description: 'URL to access login node instances via Session Manager'

Value: !Sub 'https://${AWS::Region}.console.aws.amazon.com/ec2/home?region=${AWS::Region}#Instances:instanceState=running;tag:aws:pcs:compute-node-group-id=${LoginNodeGroup.Id}'

Export:

Name: !Sub '${AWS::StackName}-EC2ConsoleUrl'

PCSInstanceRoleArn:

Description: 'ARN of the PCS Instance Role with vended log delivery permissions'

Value: !GetAtt PCSInstanceRole.Arn

Export:

Name: !Sub '${AWS::StackName}-PCSInstanceRoleArn'

まとめ

AWS Parallel Computing Service で Slurm 25.05 のサポートが開始されました。

本記事では、主な新機能として以下の 3 点を紹介しました。

- マルチクラスター sackd 設定のサポート

- 単一ログインノードから複数クラスターへアクセスが可能に

- requeue_on_resume_failure のデフォルト有効化

- インスタンス起動失敗時の自動リトライによりクラスターの信頼性が向上

- LogTimeFormat の変更

- ログ出力の一貫性が向上

とくに requeue_on_resume_failure のデフォルト有効化は、AWS のような動的スケーリング環境において、キャパシティ不足対策として有用なアップデートでした。

おわりに

AWS PCS のアップデートというよりは、Slurm 25.05 のアップデート内容を確認することになり、なかなか調べるのが大変でした。良い勉強になりました。AWS ParallelCluster も Slurm25.x 代対応になったら同じ様なアップデート入りそうですね。

付録

AWS PCS クラスター(Slurm 25.05.3)で取得した Slurm 設定の全文を貼っておきます。

scontrol show config の出力全文

Configuration data as of 2025-10-23T06:22:02

AccountingStorageBackupHost = (null)

AccountingStorageEnforce = none

AccountingStorageHost = localhost

AccountingStorageExternalHost = (null)

AccountingStorageParameters = (null)

AccountingStoragePort = 0

AccountingStorageTRES = cpu,mem,energy,node,billing,fs/disk,vmem,pages

AccountingStorageType = (null)

AccountingStorageUser = root

AccountingStoreFlags = (null)

AcctGatherEnergyType = (null)

AcctGatherFilesystemType = (null)

AcctGatherInterconnectType = (null)

AcctGatherNodeFreq = 0 sec

AcctGatherProfileType = (null)

AllowSpecResourcesUsage = no

AuthAltTypes = (null)

AuthAltParameters = (null)

AuthInfo = use_client_ids,cred_expire=70

AuthType = auth/slurm

BatchStartTimeout = 10 sec

BcastExclude = /lib,/usr/lib,/lib64,/usr/lib64

BcastParameters = (null)

BOOT_TIME = 2025-10-23T02:53:27

BurstBufferType = (null)

CertgenParameters = (null)

CertgenType = (null)

CertmgrParameters = (null)

CertmgrType = (null)

CliFilterPlugins = (null)

ClusterName = simple-pcs-cluster

CommunicationParameters = NoCtldInAddrAny

CompleteWait = 0 sec

CpuFreqDef = Unknown

CpuFreqGovernors = OnDemand,Performance,UserSpace

CredType = auth/slurm

DataParserParameters = (null)

DebugFlags = AuditRPCs,GLOB_SILENCE,Power

DefMemPerNode = UNLIMITED

DependencyParameters = (null)

DisableRootJobs = no

EioTimeout = 60

EnforcePartLimits = ALL

EpilogMsgTime = 2000 usec

FairShareDampeningFactor = 1

FederationParameters = (null)

FirstJobId = 1

GresTypes = gpu

GpuFreqDef = (null)

GroupUpdateForce = 1

GroupUpdateTime = 600 sec

HASH_VAL = Match

HashPlugin = hash/sha3

HealthCheckInterval = 0 sec

HealthCheckNodeState = ANY

HealthCheckProgram = (null)

InactiveLimit = 0 sec

InteractiveStepOptions = --interactive --preserve-env --pty $SHELL

JobAcctGatherFrequency = 30

JobAcctGatherType = (null)

JobAcctGatherParams = (null)

JobCompHost = localhost

JobCompLoc = /var/log/container/controller/daemon/slurm-25.05-jobcomp.log

JobCompParams = (null)

JobCompPort = 0

JobCompType = jobcomp/pcs

JobCompUser = root

JobContainerType = (null)

JobDefaults = (null)

JobFileAppend = 0

JobRequeue = 1

JobSubmitPlugins = (null)

KillOnBadExit = 0

KillWait = 30 sec

LaunchParameters = (null)

Licenses = (null)

LogTimeFormat = rfc5424_ms

MailDomain = (null)

MailProg = /bin/mail

MaxArraySize = 257

MaxBatchRequeue = 5

MaxDBDMsgs = 0

MaxJobCount = 257

MaxJobId = 67043328

MaxMemPerNode = UNLIMITED

MaxNodeCount = 5

MaxStepCount = 40000

MaxTasksPerNode = 512

MCSPlugin = (null)

MCSParameters = (null)

MessageTimeout = 210 sec

MinJobAge = 300 sec

MpiDefault = pmix

MpiParams = (null)

NEXT_JOB_ID = 1

NodeFeaturesPlugins = (null)

OverTimeLimit = 0 min

PluginDir = /opt/slurm/lib/slurm

PlugStackConfig = (null)

PreemptMode = OFF

PreemptParameters = (null)

PreemptType = (null)

PreemptExemptTime = 00:00:00

PrEpParameters = (null)

PrEpPlugins = prep/script

PriorityParameters = (null)

PrioritySiteFactorParameters = (null)

PrioritySiteFactorPlugin = (null)

PriorityDecayHalfLife = 7-00:00:00

PriorityCalcPeriod = 00:05:00

PriorityFavorSmall = no

PriorityFlags =

PriorityMaxAge = 7-00:00:00

PriorityType = priority/multifactor

PriorityUsageResetPeriod = NONE

PriorityWeightAge = 0

PriorityWeightAssoc = 0

PriorityWeightFairShare = 0

PriorityWeightJobSize = 0

PriorityWeightPartition = 0

PriorityWeightQOS = 0

PriorityWeightTRES = (null)

PrivateData = none

ProctrackType = proctrack/cgroup

PrologEpilogTimeout = 65534

PrologFlags = Alloc,Contain

PropagatePrioProcess = 0

PropagateResourceLimits = ALL

PropagateResourceLimitsExcept = (null)

RebootProgram = /sbin/reboot

ReconfigFlags = KeepPartState

RequeueExit = (null)

RequeueExitHold = (null)

ResumeFailProgram = (null)

ResumeProgram = /etc/slurm/scripts/resume_program.sh

ResumeRate = 0 nodes/min

ResumeTimeout = 1800 sec

ResvEpilog = (null)

ResvOverRun = 0 min

ResvProlog = (null)

ReturnToService = 1

SchedulerParameters = requeue_on_resume_failure

SchedulerTimeSlice = 30 sec

SchedulerType = sched/backfill

ScronParameters = (null)

SelectType = select/cons_tres

SelectTypeParameters = CR_CPU

SlurmUser = slurm(401)

SlurmctldAddr = (null)

SlurmctldDebug = verbose

SlurmctldHost[0] = slurmctld-primary(10.0.11.198)

SlurmctldLogFile = /dev/null

SlurmctldPort = 6817

SlurmctldSyslogDebug = (null)

SlurmctldPrimaryOffProg = (null)

SlurmctldPrimaryOnProg = (null)

SlurmctldTimeout = 300 sec

SlurmctldParameters = idle_on_node_suspend,power_save_min_interval=0,node_reg_mem_percent=75,enable_configless,disable_triggers,max_powered_nodes=32

SlurmdDebug = info

SlurmdLogFile = /var/log/slurmd.log

SlurmdParameters = (null)

SlurmdPidFile = /var/run/slurm/slurmd.pid

SlurmdPort = 6818

SlurmdSpoolDir = /var/spool/slurmd

SlurmdSyslogDebug = (null)

SlurmdTimeout = 180 sec

SlurmdUser = root(0)

SlurmSchedLogFile = (null)

SlurmSchedLogLevel = 0

SlurmctldPidFile = /var/run/slurm/slurmctld.pid

SLURM_CONF = /etc/slurm/slurm.conf

SLURM_VERSION = 25.05.3

SrunEpilog = (null)

SrunPortRange = 0-0

SrunProlog = (null)

StateSaveLocation = /mnt/efs/slurm/statesave

SuspendExcNodes = login-[1-1],

SuspendExcParts = (null)

SuspendExcStates = DYNAMIC_FUTURE,DYNAMIC_NORM

SuspendProgram = /etc/slurm/scripts/suspend_program.sh

SuspendRate = 0 nodes/min

SuspendTime = 600 sec

SuspendTimeout = 60 sec

SwitchParameters = (null)

SwitchType = (null)

TaskEpilog = (null)

TaskPlugin = task/cgroup,task/affinity

TaskPluginParam = (null type)

TaskProlog = (null)

TCPTimeout = 2 sec

TLSParameters = (null)

TLSType = tls/none

TmpFS = /tmp

TopologyParam = RoutePart

TopologyPlugin = topology/flat

TrackWCKey = no

TreeWidth = 16

UsePam = no

UnkillableStepProgram = (null)

UnkillableStepTimeout = 1050 sec

VSizeFactor = 0 percent

WaitTime = 0 sec

X11Parameters = (null)

Cgroup Support Configuration:

AllowedRAMSpace = 100.0%

AllowedSwapSpace = 0.0%

CgroupMountpoint = /sys/fs/cgroup

CgroupPlugin = autodetect

ConstrainCores = yes

ConstrainDevices = no

ConstrainRAMSpace = yes

ConstrainSwapSpace = no

EnableControllers = no

EnableExtraControllers = (null)

IgnoreSystemd = no

IgnoreSystemdOnFailure = no

MaxRAMPercent = 100.0%

MaxSwapPercent = 100.0%

MemorySwappiness = (null)

MinRAMSpace = 30MB

SystemdTimeout = 1000 ms

MPI Plugins Configuration:

PMIxCliTmpDirBase = (null)

PMIxCollFence = (null)

PMIxDebug = 0

PMIxDirectConn = yes

PMIxDirectConnEarly = no

PMIxDirectConnUCX = no

PMIxDirectSameArch = no

PMIxEnv = (null)

PMIxFenceBarrier = no

PMIxNetDevicesUCX = (null)

PMIxTimeout = 300

PMIxTlsUCX = (null)

Slurmctld(primary) at slurmctld-primary is UP