My Journey In Data Analytics Department as Trainee 第1話

この記事は公開されてから1年以上経過しています。情報が古い可能性がありますので、ご注意ください。

This beautiful journey started in the month of march 2021. The experience gained at the Data Analytics department was tremendous. I was fortunate enough to learn a lot of new services, software's and many other important things, I was glad to be trained under excellent mentors who had diverse knowledge related to Data Analytics and were equally helpful at the times of difficulties. The current Blog gives out my perspective of the Data Analytics Department and my learning's in various phases related to Data Analytics. Similar to the training received the blog would be divided into weeks and further onto tasks.

1週目

Week 1 was mostly concentrated on knowing basics like what is Data Analytics, the various functionalities of the Data Analytics Department and also about the setup of various resources which will be used during the training.

タスク1

Data Analytics Business Unit, which is concentrated on Data analysis platform construction, data flow implementation and analysis tools. The division itself is devised to various departments. Each department has there own mission. In a Business perspective it is viewed as a combination of data accumulation first, data analysis and lastly utilization of data.

Data Analytics Platform, purpose is for data analysis that seeks actions from internal and external data that are likely to produce useful results in corporate activities, coming back to data analytics platform it is system that has the functions of collecting, processing, accumulating and providing data some of AWS Services associated with it are Amazon Redshift, AWS Glue and many other. Collect data for analysis firstly, next data storage locations in-house database, customer data on salesforce and many more, Data Acquisition methods, In House Database : ODBC SQL, SaaS Service: Dedicated API etc. Various data structures, In house database: Table → CSV, TSV, SaaS service: CSV, JSON, Excel etc. Store Data in a location which can easily be accessed by SQL.

A small briefing of Data Lake, Data Warehouse and Data Mart Data Lake --Initially for storing raw data and later for Data storage close to raw data that can be searched immediately with SQL etc. Data Warehouse(DWH) --As Structure it is collection of analytical tables or stored as a relational Database. As a system it manages the above set of tables Data Mart (DM) --Most useful when it's for specific analysis, aggregated form of DWH.

It's always good to keep in mind that clean and new data is equally important as a large set of Data.

BI (Business Intelligence), A software to quickly find and execute actions that seem productive. Various purposes of BI is Reporting, which display in an easy to understand form with the latest data, easy reference with browser or tablet, notification function and many more. Multi-Dimensional Analysis which helps easily combine DWH and Data lake to see fruitful results.

タスク2

The focus here is to basically setup of various resources that are required for the training, down below is small information of what these resources are and how they are used in the training.

The different resources that are required for training Course

AWS CLI

The AWS Command Line Interface (AWS CLI) is an open source tool that enables you to interact with AWS services using commands in your command-line shell. For more information on CLI check 参照 1.

Python

The programming language used in serverless framework. Check 参照 2 for more information about python.

Serverless Framework

Build applications sintered of microservices that run in response to events, auto-scale for you, and only charge you when they run. Check 参照 3 for more information about serverless.

Backlog Settings

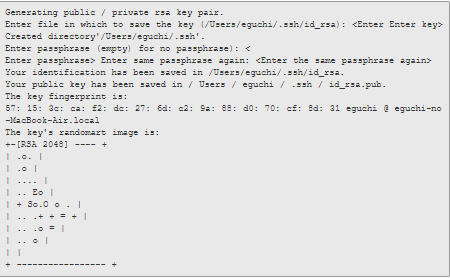

One of important sections, as through this we'll be establishing a connection between backlog git and our local system (Our PC). Type the below command in your desired console to generate public and private keys.

ssh-keygen

Type the below command to Display public key.

cat ~/.ssh/id_rsa.pub

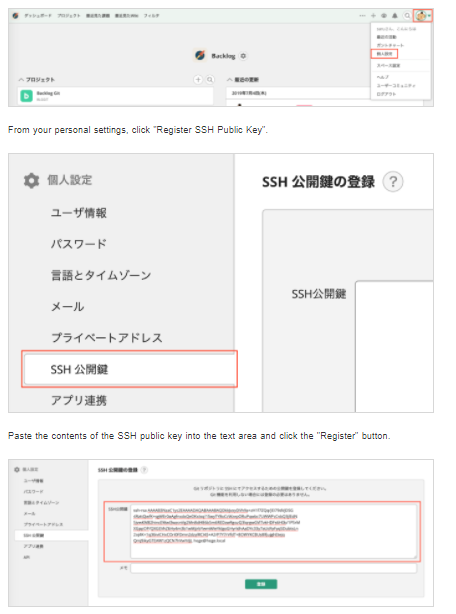

Copy the above public key, go to your backlog, click on your profile, next click on Register SSH Public Key, paste it and save it.

Git Flow

A branching operation model in Git. In lane man term, basically we follow the below instructions to add files from our Local repository into the backlog git repository it can be reviewed by others in my scenario it was checked by our mentors related to our solution. It is as follows-

#First we use clone command in a directory, where your files contain $ git clone cm1@cm1.git.backlog.jp:/CM_DA_NEW_GRADUATES/YOUR.NAME.git #Change Directory $ cd YOUR.NAME #Initialize Git-flow $ git flow init #create feature branch $ git flow feature start PreferedBranchName #staging your fixed file $ git add . #commit $ git commit -m "Any comment like added files, modified files, deleted files can be written here" #push to remote $ git flow feature publish PreferedBranchName

まとめ

The blog above depicts my journey of Week 1 in the DA department which gave me an introductory to what actually is data analytics, it's various departments and introduction to various tools. CLI to connect to our AWS account, Backlog settings and the git flow which can be used to run and push files from local repository (local PC) to the remote repository (BackLogGit). The upcoming blog would depict about the usage of resources mentioned above and other new resources.