My Journey In Data Analytics Department as Trainee 第3話(最終部)

この記事は公開されてから1年以上経過しています。情報が古い可能性がありますので、ご注意ください。

My journey in Data Analytics department came to end at last week of march. My time in the Data Analytics Department gave me an urge to continue learning new things. Week3 was introduced with a new service Amazon Redshift which is explained below. how exactly redshift is used and related things. Also how to connect redshift with few AWS services. If you haven't gone through My Journey In Data Analytics Department as trainee 第1話と第2話 it is highly recommended for better understanding of Data Analytics department, tools and services used which maybe useful in analysis and other departments. Also to continue with the below blog you need to follow some steps that are present in the ep1 and ep2. The link for the same is posted below.

3週目

The final week focuses mostly on redshift, what exactly is redshift, initial setup that is required for the redshift inorder to start making use of it. Then we move on to creating a database in it, creating a table in it. why exactly the creation of the external table is necessary and lastly to execute query that gives out output without duplicate records.

Advance Preparation

Redshift, AWS Redshift enables you to have a real time lake house approach which combines traditional data sources in our data warehouse with event data stored in S3 as well as real time data stored in Amazon Aurora or RDS. For more information on AWS Redshift please check 参照 1.

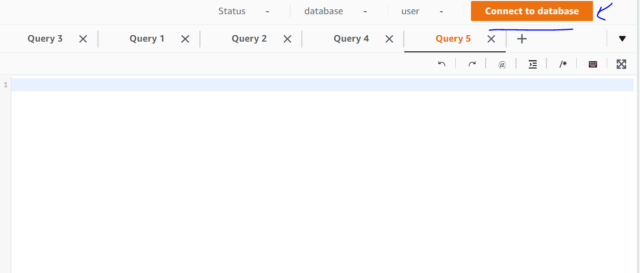

Next we move onto cluster creation. The cluster created here is with DC2. Check 参照 2 for more information about how to create cluster. This common cluster is used by all the members in trainee. Again a common database created used by all trainee in the start. Next we move on to query editor, there is an inbuilt query editor of Redshift which is recommended check 参照 3 for more information or other option is making using of client tool.

For Connecting to database in Query editor follow the below steps.

After clicking connect database follow the below setup.

- Connection:

- Create a new connection

- Authentication:

- Temporary Credential

- Cluster:

- da-india-shared

- Database name:

- devio

- Database user:

- india_shared

タスク1

In this task we create our personal database, after following the above steps for connecting database in advance preparation section, we type the below command in query editor to create the database.

create database hemanth #you can give any name for database

Next is creation of pull request, click here for information related to it.

タスク2

Creation of table in database created. Click on connect database in query editor. Follow the same procedure as in Advance preparation just replace devio with your database name, here the database name created above is hemanth type it down.

create table hemanthweek3 (title VARCHAR, url VARCHAR, publish_date TIMESTAMP, author VARCHAR, tags VARCHAR);

Next is creation of pull request, click here for information related to it.

タスク3

Create an external table so that you can read the JSON file saved in the S3 bucket in Week2. Here it is good to keep in mind that the file to be processed is in in JSONL format. For this first we have to create IAM role with Amazon S3 access, other perquisites and associated with cluster. In the training it was created well in advance and this IAM role was commonly used by all the trainees.

Next step is creation of external schema as shown below.

create external schema hemanthspectrum from data catalog database 'hemanth' iam_role 'arn:aws:iam::123456789012:role/da-exercise-202103-redshift-s3' create external database if not exists;

The IAM role used here is the created one above.

Next Creation of the external table

CREATE EXTERNAL table hemanthspectrum.data ( title varchar(4000), link varchar(4000), author varchar(4000), published timestamp, category array<struct<term:varchar,scheme:varchar,label:varchar>> ) ROW FORMAT SERDE 'org.openx.data.jsonserde.JsonSerDe' STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' LOCATION 's3://da-exercise-202103/hemanth_kumar_r/json-data/';

Location is place where your data is store in S3, here its the JSONL file. category has sub tags namely term, scheme and label hence we have put them in an array struct form.

Finally to check if data is present in the external table execute the below command and if you get an output it should be good.

SELECT f.title, f.link, f.author, f.published FROM hemanthspectrum.data AS f

For more information on Amazon Redshift spectrum check 参照 4 and more information on related to nested query check 参照 5.

タスク4

In the final task, execute an query which stores no duplicate records from the external table to the analysis table i.e that the table created in タスク2。

TRUNCATE TABLE hemanthweek3; #Inserting data to the hemanthweek3 analysis table insert into hemanthweek3(title,url,publish_date,author,tags) SELECT DISTINCT t.title , t.link , t.published , t.author , c.term FROM hemanthspectrum.data AS t LEFT JOIN t.category AS c ON TRUE #to verify SELECT title, url, publish_date, author, tags FROM hemanthweek3

First truncate to delete if any data exists inside the analysis table, next insert command followed by select distinct so that data without any duplication is stored in the analysis table. Next verifying the data with select command.

まとめ

Week 3 focused on Amazon Redshift, querying in it, how the data from s3 can be queried in redshift using external data, how to transfer the data from the external table to normal table (Analysis table). The training in the Data Analytics department comes to close in this week. Hence the series my journey in data analytics series comes to close. At the very beginning of this journey there might be certain problems to overcome but as you overcome the results are presented.

参照

- https://dev.classmethod.jp/articles/aws-redshift-101/

- Cluster Creation in Redshift

- https://docs.aws.amazon.com/redshift/latest/mgmt/query-editor.html

- Creation of IAM role, it's association, creation of external schema and external table and also query in S3

- https://docs.aws.amazon.com/redshift/latest/dg/tutorial-query-nested-data.html